Invented by Khan; Tufail Ahmed, Goswami; Pranjal, Hu; Di, Wei; Changyong, Truist Bank

Modern businesses are flooded with data, but making sense of all that information can be very hard. Data can get messy, duplicated, or even wrong, making it tough to work with. A new patent application introduces a smart computing system that uses machine learning and artificial intelligence to keep databases clean, organized, and trustworthy. Let’s break down how this technology works, why it matters, and what makes it so special.

Background and Market Context

Almost every company today collects more data than ever before. This data comes from sales, customers, websites, and even from other businesses. Storing all this information is expensive, especially if the same data gets saved in more than one place. When teams work with messy or duplicated data, it leads to wasted time, higher storage bills, and mistakes in reports. Bad data can even cause costly decisions or trouble with privacy regulations.

As more companies move their databases to the cloud, it becomes easier for teams to share and access information. But this also means that duplicates and errors can quickly spread. If workers don’t know which dataset is correct, or if the same data is stored in many places, it causes confusion and can make important analytics unreliable. In industries like finance, healthcare, and retail, these problems can translate into lost revenue and increased risk.

To solve these challenges, businesses need ways to regularly check, clean, and organize their data. Manual cleanup is slow and often misses hidden issues. Traditional data management tools can help, but they usually require experts to set up rules and check every step. As data grows, these old systems can’t keep up. That’s why there is a growing demand for automated systems that can scan huge amounts of data, spot problems, and fix them with little or no human help.

The patent application we’re exploring addresses these needs by combining artificial intelligence, machine learning, and data quality rules into a single, automated platform. This system promises to reduce storage costs, improve data accuracy, and help businesses meet strict data regulations — all while freeing up employees to focus on more important work.

Scientific Rationale and Prior Art

Before diving into the new invention, it’s important to understand what’s already out there. In the past, database cleaning was mostly done manually or with simple, rule-based software. These older tools would scan for things like missing values (nulls), duplicates, or wrong data types. Human experts had to set up the rules for what “good” data looked like and had to check the results. This approach was slow, error-prone, and hard to scale.

Over time, some improvements were made. More advanced tools started using statistical methods to find outliers or spot columns with unusual values. Some systems let users set up routines that would run on a schedule, but they still needed lots of input from data experts. Tools like ETL (Extract, Transform, Load) pipelines helped move and clean data, but didn’t really “think” about the meaning or context of the data.

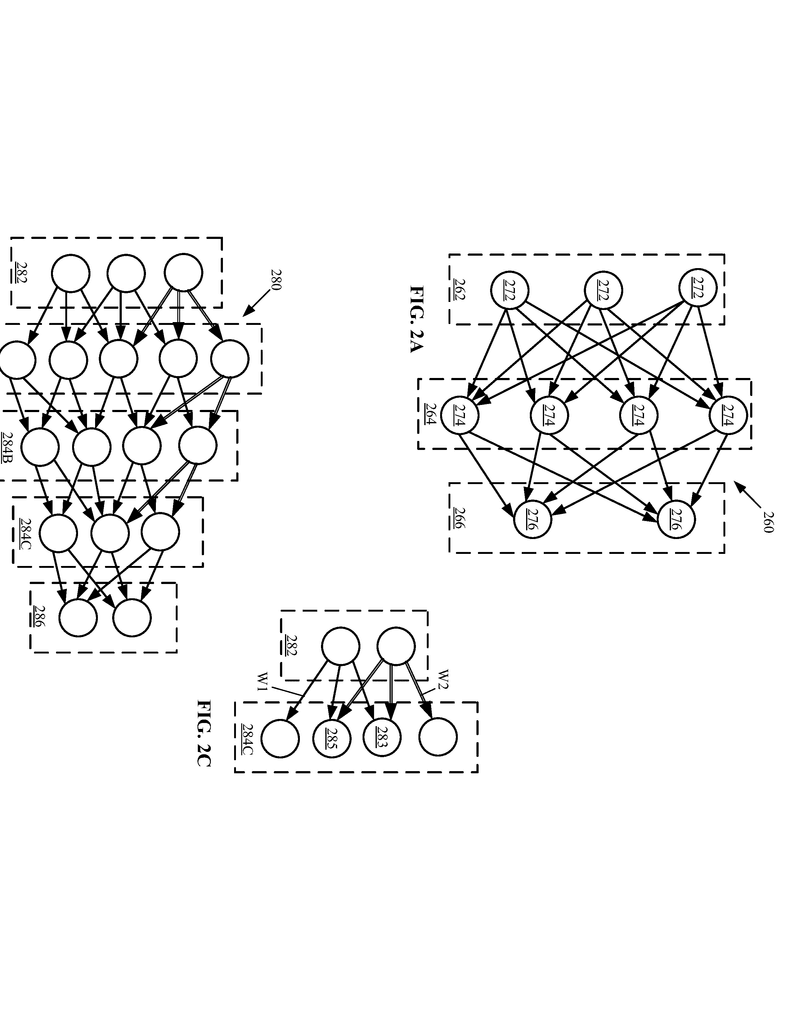

Recent years saw the rise of machine learning in data management. Systems began to use models trained on past data to spot patterns and problems that rules might miss. For example, a machine learning model could notice if a set of email addresses doesn’t match the usual pattern, or if a column labeled “phone number” has text instead of numbers. Some tools could even group similar records together or guess what kind of data a column held by looking at sample values.

However, even these newer systems had limits. Many would only suggest fixes, not apply them automatically. They might not adapt to new types of data without retraining or reprogramming. Most didn’t track usage patterns over time or learn from historical cleanup decisions. Very few could recommend or enforce data quality rules based on the actual meaning (semantics) of the data — such as knowing that a social security number should never be empty or duplicated.

The patent application in question builds on these advances but takes them further. It introduces a system that not only analyzes and cleans data, but also uses a feedback loop to optimize its own rules, learns from past decisions, and connects the dots between the meaning of the data and the right way to keep it clean. It brings together ideas from database management, artificial intelligence, and user experience to make data cleaning both smarter and simpler.

Invention Description and Key Innovations

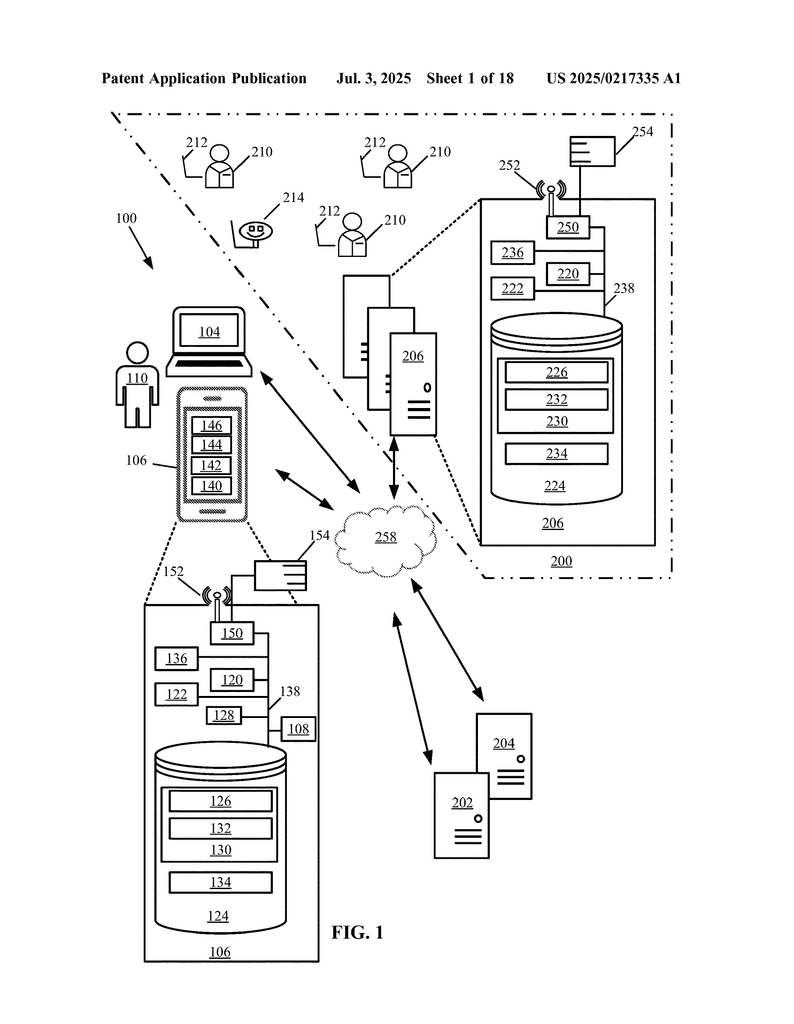

At the heart of this invention is a computing system designed to manage, clean, and classify data automatically. This system uses a mix of traditional programming, machine learning models, and artificial intelligence to do its job. Here’s how it works, step by step:

Accessing and Profiling Data

The system can connect to one or more storage locations and access datasets. For each dataset, it computes a digital fingerprint (checksum) to quickly spot duplicates or track changes over time. It profiles the data, checking the structure, types of values, and any patterns it finds. For example, it will notice if a column has a lot of empty values, if values are repeated, or if some entries don’t match what you’d expect (like a phone number column filled with words).

Analyzing Patterns and Recommending Rules

Using both statistics and machine learning, the system looks for common issues: missing values, duplicates, wrong data types, and values that don’t fit expected formats. It examines both the column names and the actual data, using regular expressions for text patterns and machine learning models for more complex cases. For example, a column might be identified as “email” because the system sees both the name and the data match email patterns.

The system then suggests data quality rules based on what it finds. These rules might say things like “this column must not be empty,” “this field must be unique,” or “only numbers are allowed here.” Each rule is given a confidence score, showing how sure the system is that it applies.

Optimizing Rules through Semantics and History

A key innovation is how the system uses data classification and historical information to get better over time. It classifies data columns by their semantic meaning — for example, knowing that a column is a social security number, a phone number, or an email address. It learns from past cleanup actions, seeing which rules worked well and which didn’t. If it notices that every “social security number” column is always set to “not null” in the past, it will strongly recommend that rule in the future.

This feedback loop lets the system adapt to changing data and user needs, getting smarter as it goes. If a new type of data is added or if cleanup rules change, the system can learn these patterns and update its recommendations.

Automated Cleanup and User Interaction

Once the rules are picked — either automatically or with user input — the system applies them to the data. It filters the dataset, keeping only the records that meet the quality standards and removing or isolating those that don’t. If data doesn’t meet a rule (for example, a row has a blank value where none are allowed), the system can either delete it, move it to a separate dataset for review, or flag it for further action.

Users can interact with the system through a clear interface. The system can display prompts when it finds new issues or recommends new rules. Users can approve, reject, or tweak the recommended rules, giving them control while still saving time. The system keeps track of these decisions, using them to improve future recommendations.

Handling New Data and Real-Time Quality Checks

The invention doesn’t just work on existing data; it also checks new data as it’s added. When new records come in, the system applies the same set of quality rules, only allowing data that meets the standards to enter the main dataset. This helps keep the database clean over time and prevents old problems from coming back.

Advanced Features: Data Similarity and Security

Another major feature is the ability to detect similar datasets, not just exact duplicates. By comparing structure, data types, and even the meaning of columns, the system can spot when two datasets are almost the same or when one is a subset of another. It can suggest merging, deleting, or archiving redundant data, which saves storage space and cuts costs.

Security is also built in. The system can recognize sensitive information (like social security numbers or credit card data) and check whether the right protections are in place, such as data masking or tokenization. If it finds sensitive data without enough security, it can alert the right people or recommend fixes.

Why This System Stands Out

What makes this invention unique is the way it brings together several cutting-edge ideas:

- It automates the full cycle of data cleanup, from finding issues to fixing them, with very little human help needed.

- It uses both the structure and meaning of data to recommend rules, not just surface-level checks.

- It learns from past actions and user input, getting better at its job over time.

- It handles both old data and new data as it comes in, keeping the database clean at all times.

- It helps enforce security and privacy by spotting sensitive data and checking for proper handling.

The result is a system that saves money, reduces risk, and helps companies trust their data — all while making life easier for technical and business users alike.

Conclusion

Managing large amounts of data is one of the hardest jobs for any modern business, but it doesn’t have to be a headache. This patent application describes a smart, automated system that cleans up databases, recommends the right data quality rules, and learns from every decision to get even better over time. By combining advanced machine learning with easy-to-use controls, the invention gives companies a powerful way to keep their data clean, safe, and reliable. As data continues to grow, tools like this will be essential for staying ahead, cutting costs, and making smarter, faster decisions.

Click here https://ppubs.uspto.gov/pubwebapp/ and search 20250217335.