Invented by Khan; Tufail Ahmed, Goswami; Pranjal, Hu; Di, Wei; Changyong, Truist Bank

In today’s world, data is everywhere. But not all data is clean, accurate, or useful. This blog will help you understand a new patent application for a system that uses computers to analyze data and make smart rules to keep it clean and organized. We’ll break down what’s happening in the market, the science and older ideas behind the invention, and finally, we’ll explain what makes this new system special and how it works.

Background and Market Context

Businesses today rely on data to make decisions, serve their customers, and grow. Data helps them see patterns, predict trends, and solve problems. But as companies collect more and more data, they face some big challenges. One of the biggest is making sure their data is good – meaning it’s correct, up-to-date, unique, and complete. Without good data, companies can’t trust their reports or analysis. Even worse, bad data can cost them money, waste time, and lead to wrong decisions.

Storing data isn’t free. Companies pay for space on servers, whether in their own buildings or in the cloud. As teams and departments grow, many people end up creating copies of the same data. These duplicates waste storage space and make it harder to know which version is correct. If someone analyzes two versions of the same data, they could get wrong results. And if different data sets have slight differences, it becomes even harder to trust the results.

Another problem is that data comes from many sources and in many forms. Some data is structured, like tables with rows and columns. Other data is unstructured, like emails or text messages. Each type needs different ways to check if it’s good. As data grows, it becomes impossible for people to keep checking everything by hand. This is where computers and smart programs become important. They can look for patterns, find errors, and suggest rules to keep data clean. But traditional tools often need a lot of setup or can’t adapt quickly as data changes.

Companies also face strict rules about how they store and use data, especially private information like social security numbers or credit card details. If they don’t protect this data, they can face fines or lose customer trust. So, there’s a need for systems that can automatically spot sensitive data and make sure it’s handled the right way.

In short, the market needs a smarter, faster, and more automatic way to check data quality, remove duplicates, and make sure only the best data is kept. This patent application addresses these needs by using advanced software to automatically create and manage rules for data quality, saving time, money, and reducing mistakes.

Scientific Rationale and Prior Art

To understand what’s new in this invention, it helps to know how things have been done before. Traditionally, companies used simple rules to check data: for example, making sure a date field isn’t empty, or an email address looks like an email. These rules were usually set up by people who knew the data well. As companies grew, they used software tools to help, but these tools often needed lots of setup and manual work.

Older systems checked for problems like missing values, duplicate rows, or data in the wrong format. Some even used basic statistics, like checking if a number is much bigger or smaller than normal. If a problem was found, the system might alert a person to fix it. But this approach doesn’t scale well if you have hundreds or thousands of tables. It also can’t easily adapt if the data changes or comes from new sources.

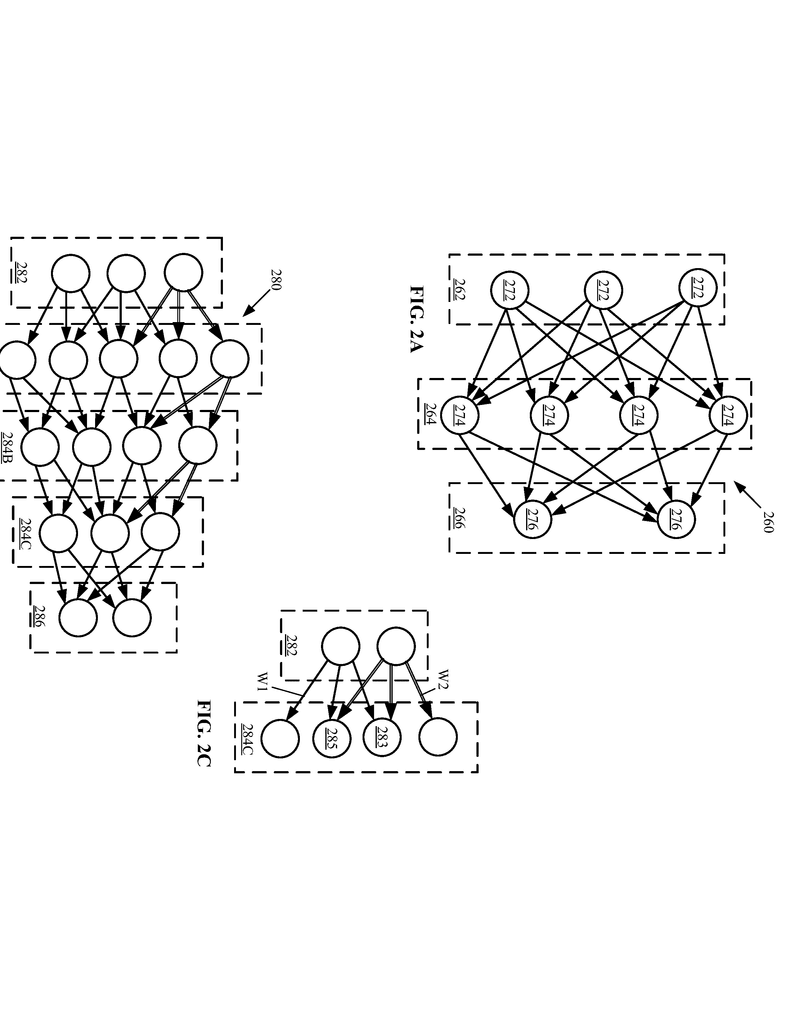

As data management evolved, companies started using more advanced tools like machine learning and artificial intelligence. These tools can learn patterns from data and make predictions. For example, a program can learn what a social security number looks like and spot mistakes even if the format changes a little. Some systems use natural language processing to understand column names and guess what kind of data is inside.

Some companies have also used clustering and similarity analysis to find duplicates or related data sets. This means the system groups similar data together and can suggest merging or removing copies. However, many of these tools still depend on people to check the results and decide what to do.

Previous inventions and products focused on:

- Manual or semi-automatic rule creation

- Fixed rule sets that don’t adapt easily

- Simple checks for data types, missing values, and duplicates

- Basic statistical checks for outliers

- Alerts for people to review, rather than full automation

What was missing was a way for the system to learn from the data itself, create rules automatically, score how confident it is in those rules, and apply them without needing people to step in every time. There was also a need for better ways to handle new data as it’s added, and to keep the rules up-to-date as patterns change.

This is where the new invention stands out. It builds on ideas from machine learning, neural networks, and data profiling, but adds a smart layer that can recommend, score, and apply data quality rules on its own, based on what it learns from real data and past usage patterns.

Invention Description and Key Innovations

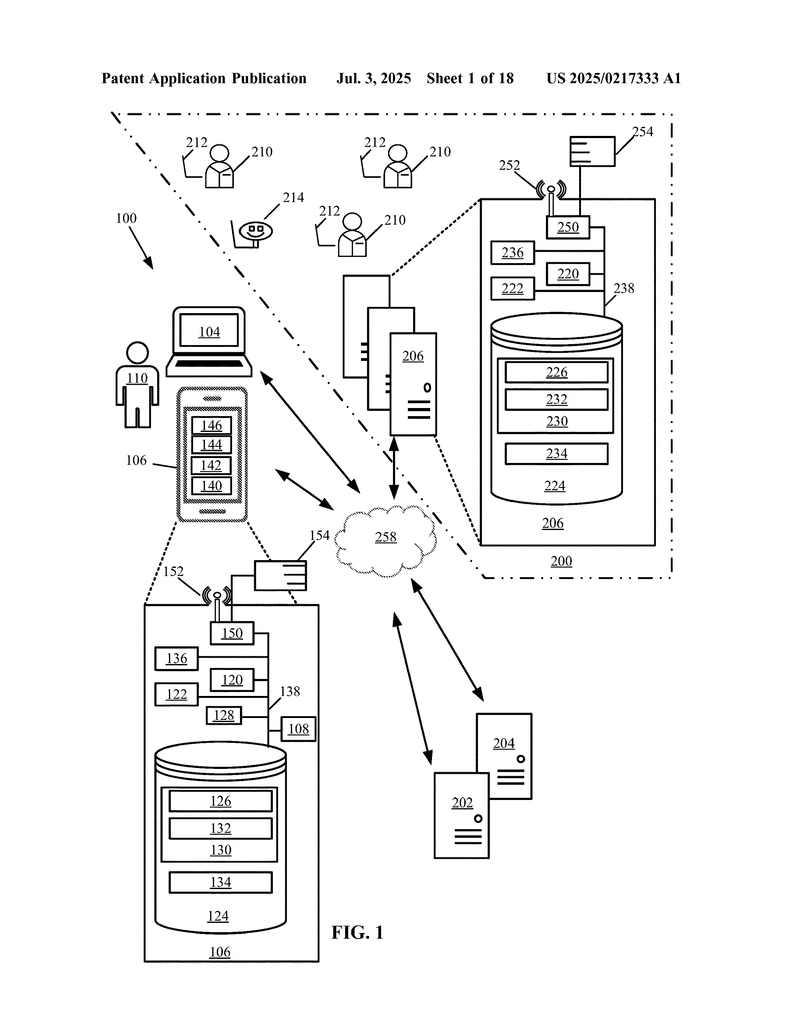

This patent introduces a computing system designed to make data quality management automatic and smart. The system is made up of a processor (the brain), memory (where it stores programs and rules), and a communication part (so it can talk to other computers and devices). What makes this system special is the way it looks at data, learns patterns, creates rules, and then uses those rules to keep the data clean – all with little or no human help.

Here’s how the invention works in simple terms:

1. Learning from Data:

The system starts by looking at a dataset – a table full of data, with rows and columns. It checks for important qualities like:

Timeliness (is the data fresh?),

Accuracy (is it correct?),

Uniqueness (are there duplicates?),

Completeness (are any pieces missing?),

Validity (does it follow the right format?), and

Consistency (does it match other data?).

It does this by running data analysis and profiling. For each column, it checks if values are missing (null), if the data type matches what’s expected (like numbers in a number field), and if there are patterns (like email addresses or phone numbers). It also reviews if some columns are supposed to be unique or if duplicates are allowed.

The system uses both regular expressions (smart patterns that match things like emails or phone numbers) and machine learning models (which can learn from past data to spot errors or new types). It keeps a library of known data types, but can also learn new ones as it goes.

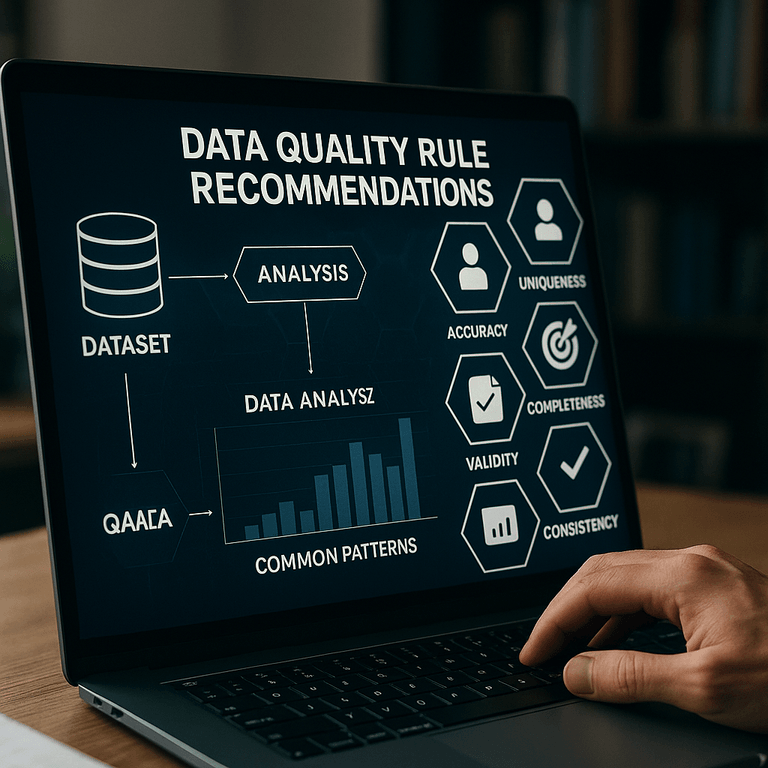

2. Creating and Scoring Rules:

Once it finds common patterns or issues, the system suggests rules to fix or prevent them. For example, if it sees that a column should never have missing values, it creates a rule to block any new data that has a blank in that column. If it notices that a certain field is almost always unique, it can create a rule to stop duplicates.

Here’s the clever part: the system gives each rule a confidence score. This score shows how sure it is that the rule makes sense for the data. If the score is high (above a set threshold, like 75%), the system will go ahead and use the rule. If the score is low, it might skip the rule or ask a person for input. This scoring is key because it means the system can avoid making rules that don’t fit, reducing mistakes and manual work.

3. Applying Rules to New Data:

When new data comes in, the system applies the rules it’s learned. It checks each piece of new data against the rules. If the data fits (for example, it’s a valid email, or it doesn’t duplicate something already there), the system adds it to the main dataset. If not, it rejects the data or puts it aside for review. This keeps the dataset clean and trustworthy as it grows.

4. Handling Special Data Types and Relationships:

The invention isn’t just about single columns. It can also look for relationships between columns – like if “city” and “state” always go together, or if a “user ID” should always match a “user name.” It can check these relationships for consistency and suggest rules if it finds problems.

It also uses statistical checks like mean and standard deviation to find outliers or invalid values. For example, if a “salary” field has a number that’s way higher or lower than normal, it can flag it.

For certain types of data, like social security numbers or credit card details, the system can recognize the patterns and suggest extra rules for security, like masking or restricting who can see the data.

5. Interactive and Automated Workflows:

The system can work in a few ways. In some setups, it can show a user a list of recommended rules and let the user pick which ones to apply. In other setups, it can do everything on its own, applying rules and cleaning up data without waiting for a person. This flexibility means companies can choose how much control they want to keep.

There’s also an interactive portal where users can manage rules, see what’s been applied, check on new data, and review any issues. The system keeps track of what rules are used most often and learns over time, getting better as it sees more data and more feedback.

6. Continuous Improvement:

The system isn’t static. It keeps learning from new data and from how its rules perform. If it sees that certain rules are always accepted and work well, it gets more confident in applying similar rules in the future. If a rule causes problems or is often rejected, it can lower its confidence or stop suggesting it. This feedback loop makes the system smarter and more reliable over time.

Key Innovations:

– Automatic Rule Generation: The system doesn’t need someone to write every rule by hand. It finds patterns, creates rules, and keeps them up-to-date as data changes.

– Confidence Scoring: By scoring each rule, the system avoids over-applying rules that might not fit. This reduces errors and the need for constant human checking.

– Semantic Classification: The system uses both the column names and the actual data to guess the type of data, even if names are misspelled or unclear, making it more robust.

– Flexible Workflows: Users can choose between full automation or interactive rule selection, fitting different business needs.

– Real-Time Filtering: As new data arrives, it’s checked instantly, keeping the main dataset clean and up-to-date.

– Relationship and Statistical Checks: The system can handle not just single values, but also relationships between fields and advanced statistical checks.

– Feedback and Learning: The system adapts over time, getting better at making and applying rules as it sees more data and gets feedback.

This new system means companies can trust their data more, spend less time fixing errors, and save money on storage and manual work. It also helps them keep up with regulations and protect sensitive information automatically.

Conclusion

Managing data quality is a constant challenge for modern businesses. The patent application we explored today shows a big step forward: a system that can look at data, learn what’s important, make rules to keep data clean, and apply them automatically. By mixing machine learning, smart pattern matching, scoring, and flexible workflows, this invention makes data quality management easier, faster, and more reliable. For companies facing growing data and tighter rules, this kind of automated solution isn’t just nice to have – it’s becoming necessary. With systems like this, businesses can trust their data, make better decisions, and focus on what matters most.

Click here https://ppubs.uspto.gov/pubwebapp/ and search 20250217333.