Invented by Katelyn Rose Nye, Gireesha Rao, General Electric Co

Point-of-care alerts are notifications that are sent to healthcare providers when a radiological finding is detected. These alerts can be sent via email, text message, or through an electronic health record (EHR) system. The purpose of these alerts is to provide healthcare providers with real-time information about a patient’s condition, allowing them to make informed decisions about treatment options.

One of the key drivers of the market for point-of-care alerts is the increasing prevalence of chronic diseases such as cancer, heart disease, and diabetes. These diseases require frequent monitoring and follow-up care, which can be time-consuming and costly. Point-of-care alerts can help to streamline the process by providing healthcare providers with timely information about a patient’s condition, allowing them to make more informed decisions about treatment options.

Another driver of the market for point-of-care alerts is the increasing adoption of EHR systems. EHR systems are electronic records of patient health information that are used by healthcare providers to manage patient care. EHR systems can be integrated with point-of-care alert systems, allowing healthcare providers to receive real-time notifications about a patient’s condition.

The market for point-of-care alerts is also being driven by advances in technology. New imaging technologies such as magnetic resonance imaging (MRI) and computed tomography (CT) scans are becoming more widely available, allowing healthcare providers to detect and diagnose diseases earlier. Point-of-care alerts can help to ensure that healthcare providers are aware of these findings and can take appropriate action.

In conclusion, the market for systems and methods for delivering point-of-care alerts for radiological findings is growing rapidly. With the increasing demand for faster and more accurate diagnoses, healthcare providers are turning to technology to improve patient outcomes. As the prevalence of chronic diseases continues to rise and new imaging technologies become more widely available, the demand for point-of-care alerts is likely to continue to grow.

The General Electric Co invention works as follows

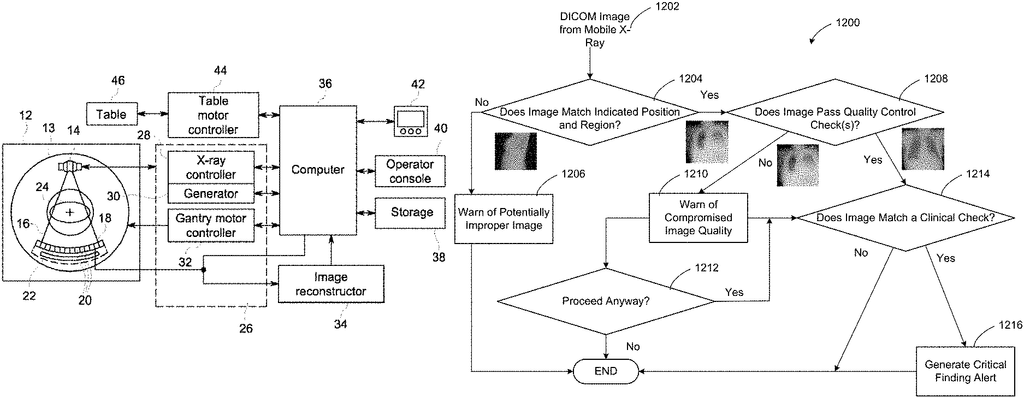

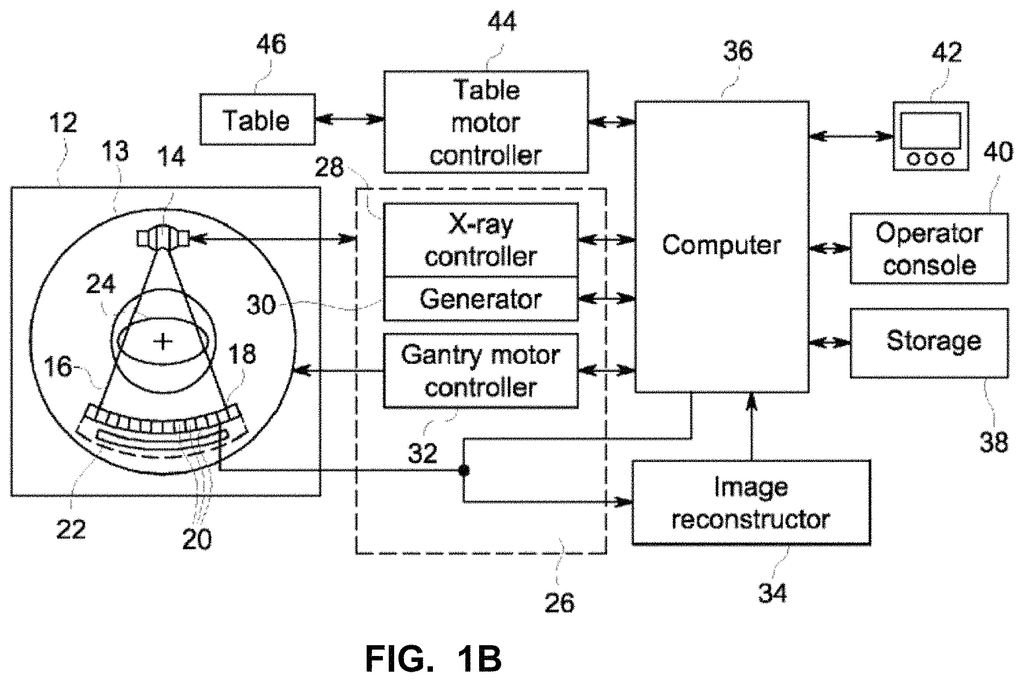

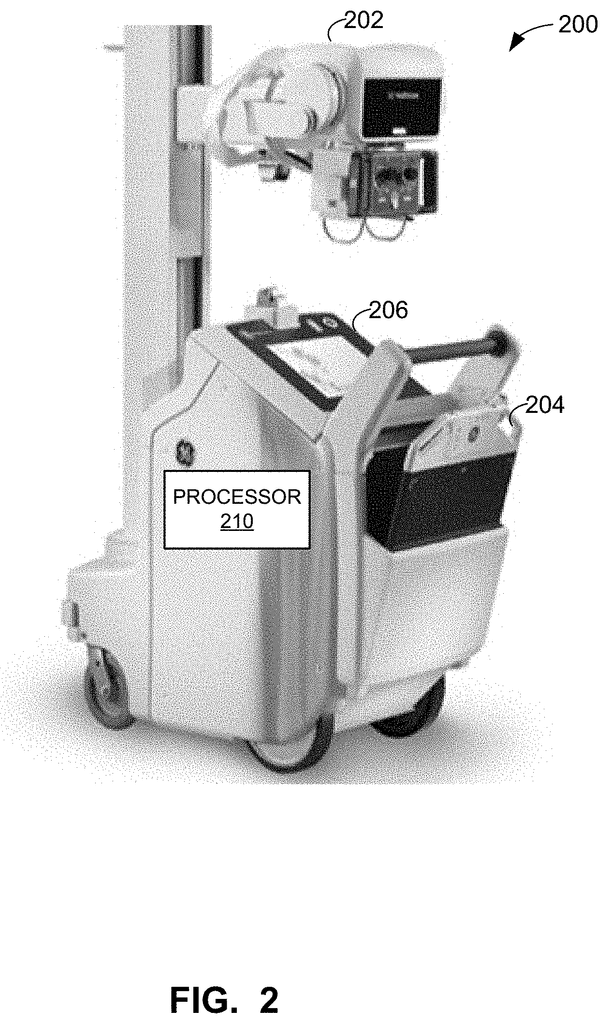

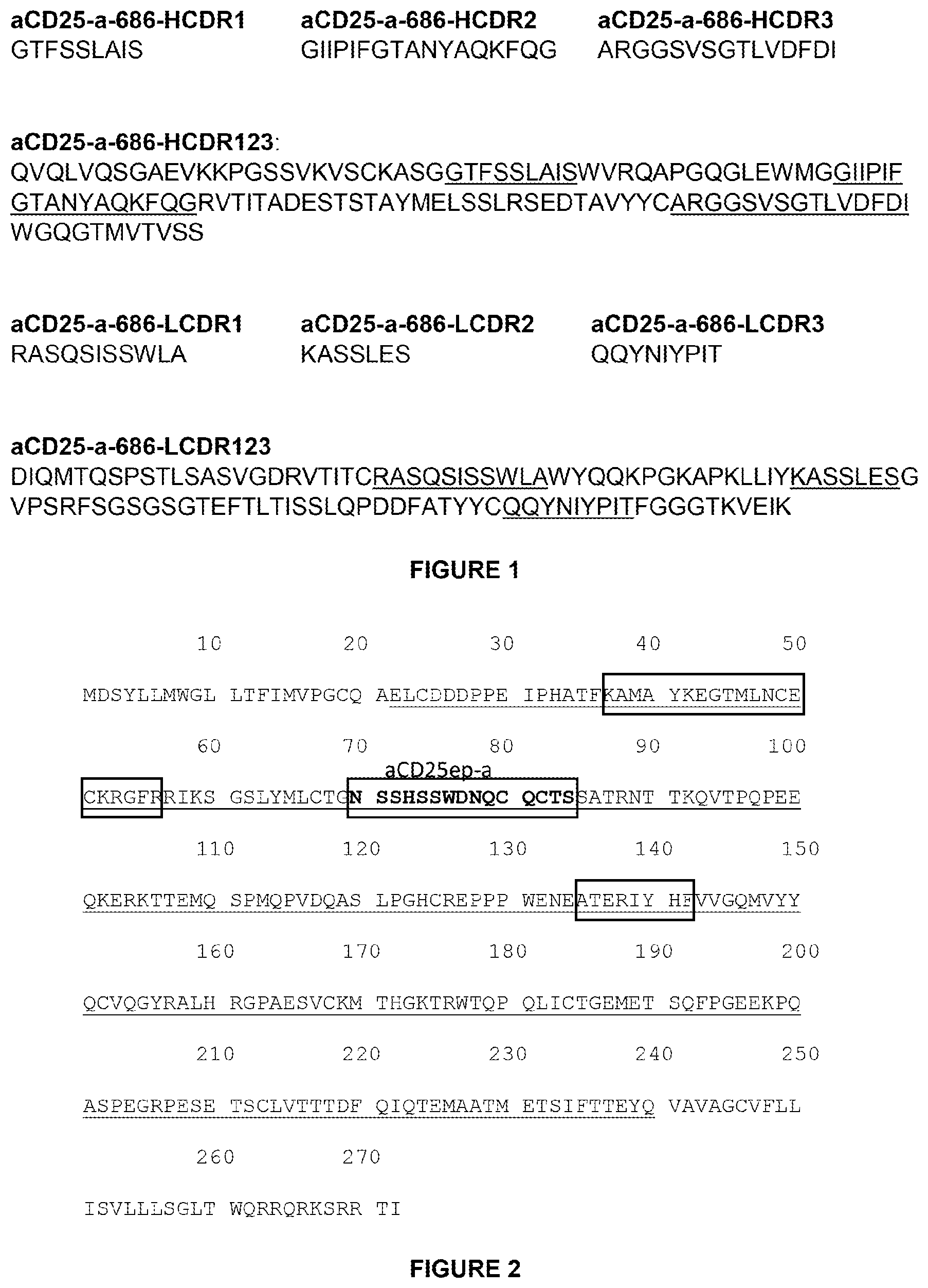

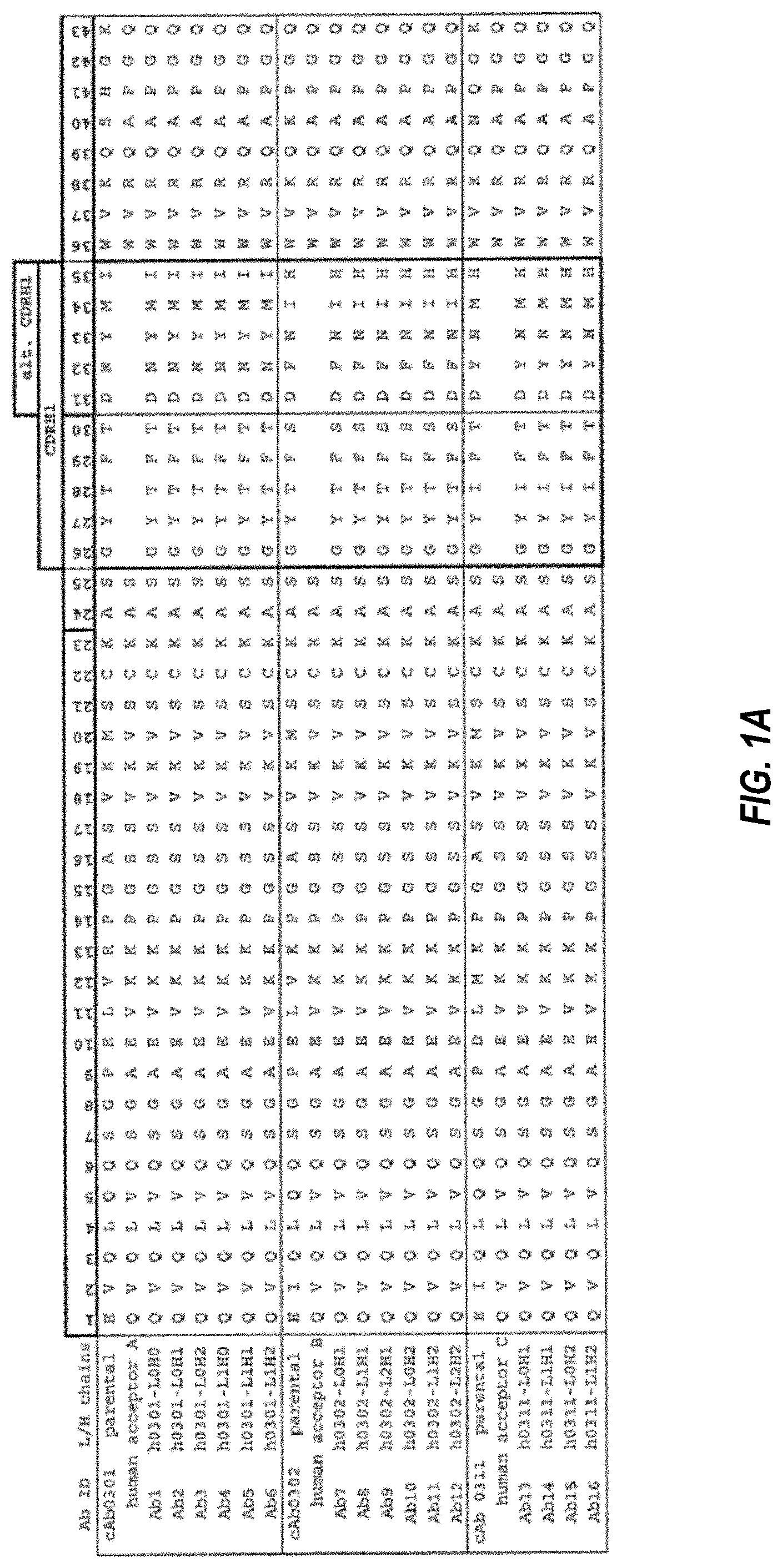

The disclosure and description of apparatus, systems, methods, and techniques for improving imaging quality control, images processing, identification and notification at or near the point of care is disclosed. A processor in an example imaging apparatus is configured to: process first image information using a trained network to generate first analysis from the image information; identify a finding clinical in the image data on the basis of the analysis; compare the analysis with a subsequent analysis that was generated by second image data acquired in a secondary image acquisition; generate notification to the imaging apparatus about the clinical finding, to trigger a response.

Background for Systems and methods for delivering point-of-care alerts for radiological findings

Healthcare facilities such as hospitals, clinics and doctors’ offices face many economic, technological, and administrative challenges in order to provide high-quality care for patients. The emerging accreditation to control and standardize radiation exposure dose usage within a healthcare organization, as well as a lack of skilled staff and equipment, are all factors that can hinder the effective management of imaging and information systems used for diagnosis, treatment, and examination of patients.

Healthcare provider consolidations create geographicly dispersed hospital networks, in which physical contact with the systems is too expensive. Referring physicians also want better collaboration and direct access to data. Physicians are often overwhelmed with data and have less time. They are also more patient-oriented and eager to get help.

Healthcare provider (e.g. x-ray technologist or doctor, nurse, etc.). Tasks such as image processing, analysis, quality control/quality assurance, and the like are difficult and time-consuming tasks that humans cannot do alone.

Certain illustrations provide apparatus, systems and methods to improve image quality control, image processing and identification of findings in data. They also generate notification at or near the point of care for patients.

The example processor is to execute the instructions so that at least: evaluate the first image data with respect to an image quality measure; process first image data using a trained learning network to generate a first analysis of the first image data when it satisfies image quality measure; identify a clinical finding in first image based on a first analysis generated from first image acquired by a second image acquisition. The example processor executes instructions to: evaluate the data in relation to an image-quality measure; process the data in accordance with a trained network to generate a 1st analysis of the data in question; identify a clinical result in the data in question based on that analysis; compare that analysis with a 2nd analysis generated by second image acquired in a 2nd image acquisition; and trigger a notification to alert a healthcare professional about the clinical finding.

The first example provides a computer-readable medium that includes instructions that, when executed, will cause a processor to: evaluate first image information with respect an image quality measurement; process first image information using a trained network to generate first analysis of first image; identify clinical findings in the data from the analysis; compare the analysis with a subsequent analysis generated by second image data acquired in a secondary image acquisition; and trigger a notification to alert a healthcare professional regarding the clinical finding.

Certain Examples provide a computer implemented method that includes evaluating the first image data in relation to an image-quality measure by executing an instruction on at least one processor. When the first data meets the image quality measurement, the example method includes processing the data by executing a processor instruction to create a first analysis. Executing an instruction with atleast one processor identifies a clinical finding based on the analysis of the first image data. By executing an instruction with the atleast one processor, the example method compares the first analysis and a subsequent analysis. The second analysis is generated from second data acquired in a secondary image acquisition. When comparing identifies that there is a difference between the first and second analyses, the example method includes triggering a notification by executing an instructing using the atleast one processor at the imaging apparatus in order to alert a healthcare professional regarding the clinical finding, and prompt a response with respect to the patient associated with the image data.

The following detailed description refers to the accompanying drawings, which form a part of this disclosure. In which are shown specific examples that can be practiced. These examples provide enough detail for one skilled in art to practice the subject matter. It is also to be understood that additional examples can be used and that logical and mechanical changes as well as electrical modifications may be made to the disclosure without departing from its scope. This detailed description does not limit the scope of this disclosure. It is intended to illustrate an example implementation. You can combine features from different parts of this description to create new aspects of subject matter.

When introducing elements from various embodiments, the articles?a? ?an,? ?the,? ?said? and?the? are intended to indicate that there are one or more elements. imply that one or more elements exist. The terms ‘comprising,’? ?including,? ?Including,? These terms are meant to be inclusive. They may include additional elements.

While some examples are described in the context medical or healthcare systems, others can be used outside of the medical setting. Certain examples, such as explosive detection and non-destructive tests, can also be used in non-medical imaging.

I. Overview

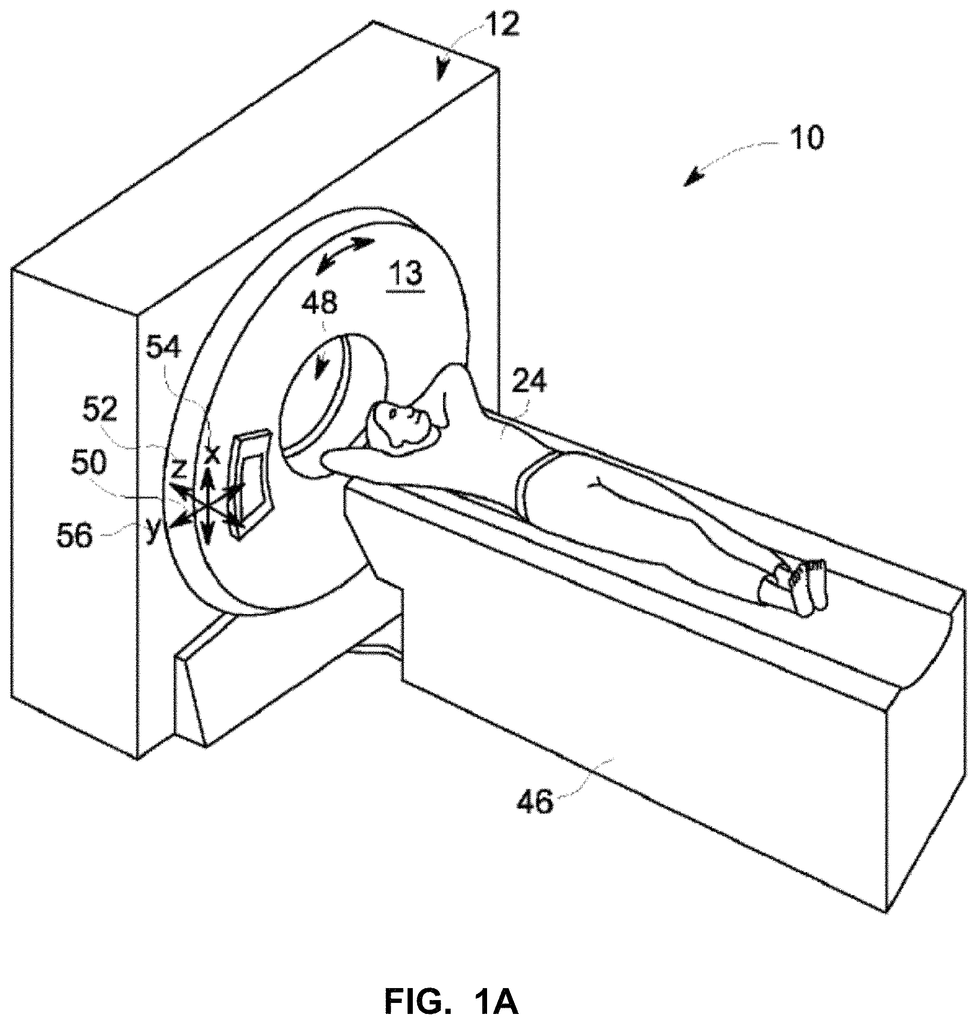

Imaging devices (e.g. gamma camera (positron emission tomography scanner (PET), computed tomography scanner (CT) scanner), X-Ray machine (fluoroscopy machine), magnetic resonance (MR) imaging device, ultrasound scanner, etc. Generate medical images (e.g. native Digital Imaging and Communications in Medicine DICOM images) that represent the body’s parts (e.g. organs, tissues, etc.). Diagnose and/or treat disease. Volumetric data may be included in medical images, including voxels that are associated with the particular part of the body. A clinician can use medical image visualization software to annotate, measure and/or report functional and anatomical characteristics at various locations on a medical picture. A clinician might use the medical imaging software to identify areas of interest in a medical image.

Acquisition and processing of medical images data plays an important role in diagnosing and treating patients in a healthcare setting. The workflow of medical imaging can be set up, monitored and updated during the operation of medical imaging devices and workflows. Deep learning and machine technology can be used to configure, monitor and update medical imaging workflows and devices.

Some examples facilitate or provide improved imaging devices that improve coverage and diagnostic accuracy.” Some examples allow for improved image reconstruction and further processing that improves diagnostic accuracy.

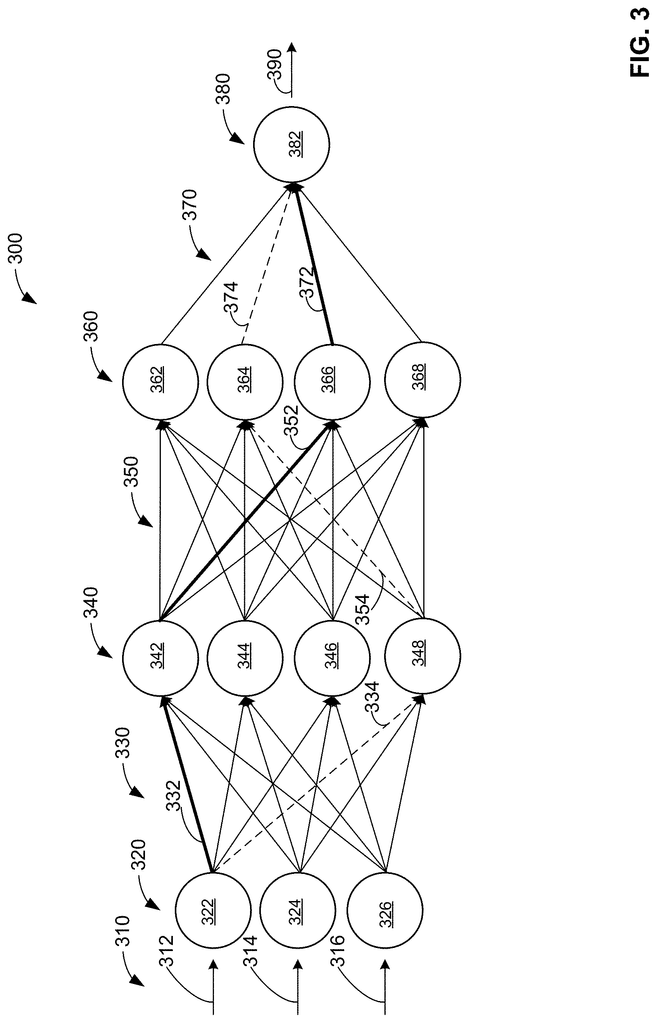

Machine learning techniques, whether deep learning networks or other experiential/observational learning system, can be used to locate an object in an image, understand speech and convert speech into text, and improve the relevance of search engine results, for example. Deep learning is a subset in machine learning. It uses multiple layers of processing, including non-linear and linear transformations, to model high-level abstractions of data. Many machine learning systems start with initial features or network weights that can be changed through learning and updating the network. A deep learning network, however, trains itself to recognize?good? features. Features for analysis. Machines that use deep learning techniques to process raw data can do so much better than those using traditional machine learning techniques. Different layers of abstraction or evaluation are used to facilitate the analysis of data that has distinct themes or contains highly correlated values.

Unless the context is clear, the following terms are taken to mean the contents of the claims and specification. Deep learning is a term that refers to deep learning. Deep learning is a machine-learning technique that uses multiple data processing layers to identify different structures in data sets and classify them with high accuracy. Deep learning networks can be training networks (e.g., models or devices for training networks) that learn patterns from a variety of inputs and outputs. A deployed network, such as a network model or device for deploying deep learning networks, can also be generated from the training network and provide an output in response.

The term “supervised learning” is used to describe this type of learning. Deep learning is where the machine is given already classified data from humans. Unsupervised learning is a term that refers to unsupervised learning. This is deep learning training that does not require the machine to be given classified data, but allows the machine to detect abnormalities. What is semi-supervised learning? This is a deep-learning training method that provides the machine with a smaller amount of classified data from humans than the machine has access to more unclassified data.

Representation learning” is a term that refers to the process of learning how to represent data. This is a set of methods that transform raw data into a representation, or feature, which can be used in machine-learning tasks. Features are learned using labeled input in supervised learning.

Convolutional neural networks” is a term. “Convolutional neural networks” or?CNNs is another name. These networks of interconnected data, which are biologically inspired, are used in deep learning to detect, segment, and recognize relevant objects and regions in datasets. CNNs analyze raw data using multiple arrays and break it down into stages. Then, they examine the data for learning features.

Transfer learning” is a term that refers to the process of transferring information. A machine that stores the information needed to solve one problem correctly or incorrectly in order to solve another of the same or similar nature. Inductive learning is also known as transfer learning. Transfer learning may make use of data from past tasks, for instance.

Active learning” is a term that refers to a process of machine learning. “Active learning” is a method of machine-learning in which the machine chooses examples to receive training data from, instead of passively receiving examples selected by an external entity. As a machine learns, it can be permitted to choose examples it deems most useful for learning. This is in contrast to relying on an external expert or system to identify and provide examples.

Click here to view the patent on Google Patents.