Invented by Anna Petrovskaya, Peter Varvak, Intel Corp

AR preparation involves creating digital content that can be overlaid onto the real world. This includes 3D models, animations, and other visual elements. There are a variety of software tools available for AR preparation, ranging from simple drag-and-drop interfaces to complex programming environments. Some popular AR preparation tools include Unity, Vuforia, and ARKit.

AR processing involves the real-time tracking and rendering of digital content onto the real world. This requires sophisticated algorithms and hardware, including cameras, sensors, and processors. There are a number of companies that specialize in AR processing, such as Magic Leap, Meta, and HoloLens.

AR application involves integrating AR technology into specific use cases, such as gaming, education, or healthcare. This requires a deep understanding of the target audience and the specific needs of the application. There are a growing number of companies that specialize in AR application development, such as Niantic, Snap, and Google.

The market for systems and methods for AR preparation, processing, and application is expected to grow significantly in the coming years. According to a report by MarketsandMarkets, the global AR market is expected to reach $72.7 billion by 2024, with a compound annual growth rate of 46.6% from 2019 to 2024.

One of the key drivers of this growth is the increasing adoption of AR in industries such as retail, healthcare, and manufacturing. For example, AR can be used to create virtual showrooms for retail products, allowing customers to see how products would look in their homes before making a purchase. In healthcare, AR can be used to create virtual simulations for medical training and surgery planning. In manufacturing, AR can be used to create virtual assembly instructions and training materials.

Another driver of growth is the increasing availability of AR hardware, such as smart glasses and head-mounted displays. These devices allow for a more immersive and interactive AR experience, and are becoming more affordable and accessible to consumers and businesses alike.

Overall, the market for systems and methods for AR preparation, processing, and application is poised for significant growth in the coming years. As AR technology continues to evolve and become more sophisticated, we can expect to see a wide range of new and innovative applications emerge across a variety of industries.

The Intel Corp invention works as follows

Various disclosed embodiments provide methods and systems for acquiring depth information about an environment, such as in various augmented reality apps. Passively scanning a device can be done by a user (e.g., tablet, mobile phone, etc.). The system may collect depth data in different regions to gather information about the environment. These scans may be incorporated into an internal 3-D model by the system. The model can then be combined with other data acquisitions to determine the device’s position and orientation in the environment. These determinations can be made in real-time, or very close to real-time in some embodiments. Multiple augmented reality applications can be made using high-fidelity orientation or position determination. This may be possible with the same device that acquired depth data, or a different device.

Background for Systems and Methods for Augmented Reality Preparation, Processing, and Application

Despite many exciting new digital technologies being realized, much of the digital universe’s power is still largely disconnected from physical reality. The Internet, for example, provides easy access to huge amounts of information. However, this information is often analyzed and evaluated without any direct reference to real-world objects and people. Digital data is not suitable for interaction with real world environments, but it can be indirectly reflected in real-world environments. A user who wants to interface digital and real-world worlds will often need to convert real-world data into digital format and vice versa. Before furniture is bought, measurements are taken by hand. Chat interfaces are awkward and force participants to use artificial greetings and protocols that are not used in real life. Instead of technology adapting to end users, technology adapts to end users, creating a facility to use keyboards, mice and joysticks as well as touchscreens and other unnatural interfaces.

End users feel the digital-reality gap, but developers also feel it. Video game developers don’t have the ability to determine the real-world environment of a user, so they create games that can only be played within the artificial boundaries of their device. Even the most sophisticated movie studios will often use uncomfortable costumes to capture real-world performances that can be later manipulated at a digital console.

Although some efforts have been made, it is not possible to create an “augmented reality?” Although some efforts have been made to provide an?augmented reality? experience, they typically require that the real world environment is adapted again to the technology’s needs (rather than vice versa). These applications might require that a beacon, pattern, texture or physical marker be placed on a surface so that an imaging device can recognize it and project synthetic objects onto it. This approach, like the ones described above, places additional demands on the real-world and does not allow technology to adapt to it.

Ideally, users and developers should not adapt to the technology they use, but can instead passively or semi-actively use their technology in real-world activities. There is a need to create systems and methods that make it easier to apply digital resources in real-world situations.

Various disclosed embodiments provide methods and systems for acquiring depth information about an environment, such as in various augmented reality apps. Passively scanning a device can be done by a user (e.g., tablet, mobile phone, etc.). The system may collect depth data in different regions to gather information about the environment. These scans may be incorporated into an internal 3-D model by the system. The model can then be combined with other data acquisitions to determine the device’s position and orientation in the environment. These determinations can be made in real-time, or very close to real-time in some embodiments. Multiple augmented reality applications can be made using high-fidelity position and orientation determination. This may be possible with the same device that acquired the depth data, or a different one.

Using a variety of disclosed embodiments, virtual objects can appear to persist in space and/or time just like real objects. A user may find a real-world sofa or a virtual character in the same place by rotating 360 degrees around a room. Different embodiments can determine how the camera (e.g., an RGBD depth-capturing camera or a depth sensor) is placed relative to the model (or some static reference coordinate systems (?world coordinates)). ); and (b), the 3D shape and dimensions of the surrounding environment, e.g. to render shadows and occlusions (of virtual objects, or vice versa). Problem (b), which is more difficult than problem (a), assumes that (a) has been solved. In the past, the movie industry has solved? Both (a) and (2) can be achieved by embedding virtual content in a real-time video stream captured previously using graphics artists. The movie must be manually modified frame-by-frame by the artists, who will insert images of virtual objects in the right positions. Artists must create shadows and anticipate the occluded areas of virtual objects. This can delay or even stop the development process for months. Some of the disclosed embodiments, however, can achieve similar or even identical effects in real time with a video stream that is being received.

We will now discuss in detail various examples of the disclosed methods. These examples will be described in detail in the following description. However, a skilled practitioner of the relevant art will be able to recognize that many of the techniques described herein can be used without these details. The techniques may also include other obvious features that are not covered herein. Some well-known functions and structures may not be described or shown in detail, to avoid confusing the relevant description.

The terminology below should be understood in the broadest possible way, even though it is used in conjunction with specific examples of the embodiments. Some terms may be highlighted below. However, any terminology that is intended to be restricted in interpretation will be explicitly and overtly defined in this section.

1. Overview-Example System Topology

Various embodiments present systems and methods to generate virtual objects in an augmented reality context (e.g., where a user holds a tablet, headpiece, head-mounted-display, or other device capable for capturing an image and presenting it on a screen, or capable of rendering in a user’s field of view or projecting into a user’s eyes, but more generally, in any situation wherein virtual images may be presented in a real-world context). These virtual objects can exist in space and time in a manner that is similar to real objects. The object might reappear in the user’s field of vision as they scan a room in an orientation and position similar to real objects.

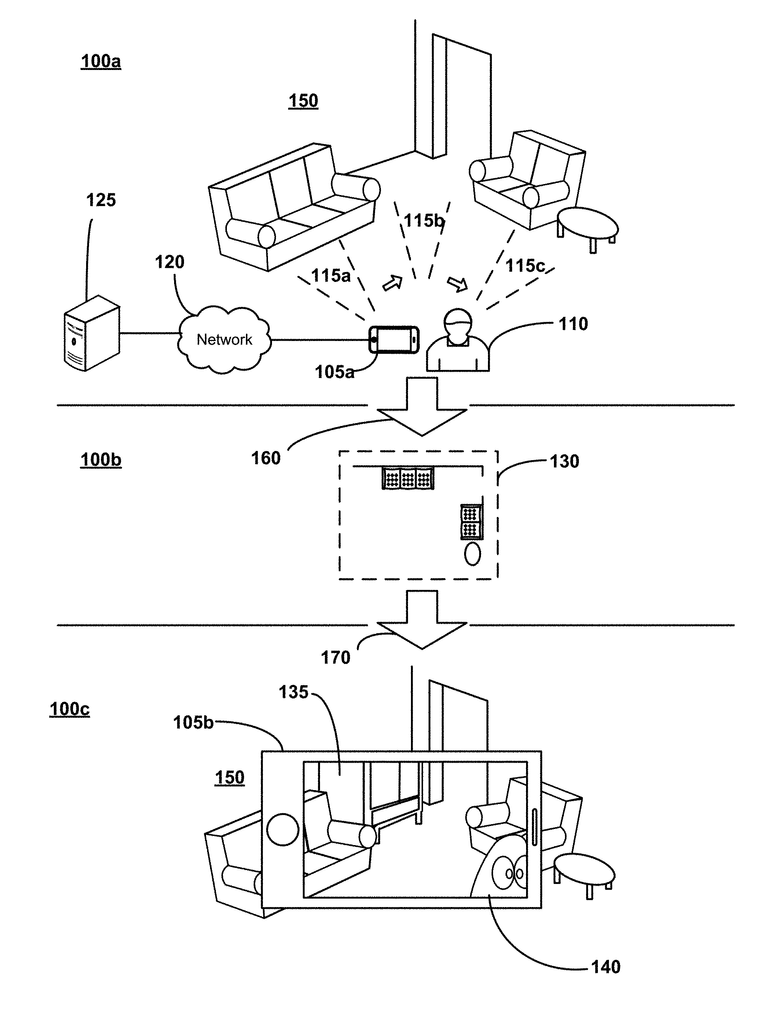

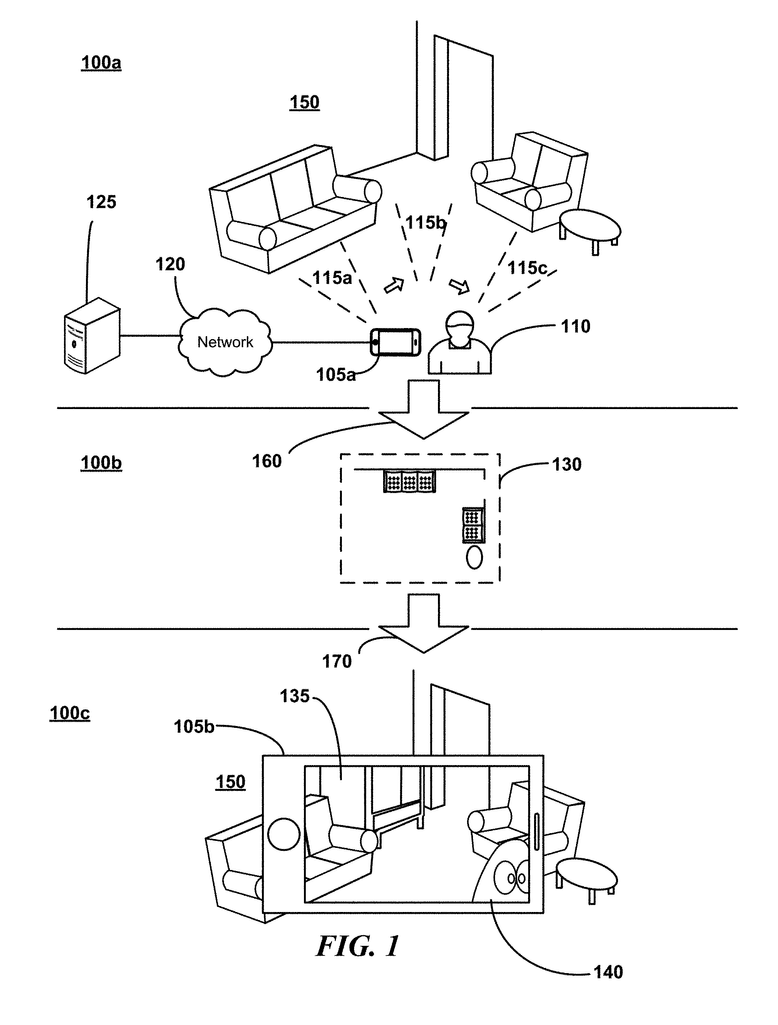

An AR (augmented reality) device (which may also be the capture device (105 b) can then use 170 of the model 130 together with incoming depth data to present an AR experience 100 c. A user might hold the AR device (105 b) in view 150. The AR system may display real-time images of the environment 150 on the AR device105 b. In some cases, virtual elements may be added to the images (some embodiments may convert the real-time images into a textured mesh as described herein). Here, a virtual piece 135 of furniture is shown behind a real-world couch. A virtual character 140 can be seen in the same way. The character is rotated to the right and down to bring it fully into view. AR device 105b may have multiple cameras (e.g. The AR device 105b may have more than one camera (e.g.

The model 130 can also be used as a standalone device, such as to create a virtual environment that is similar to the real world or to measure the environment in real time. Although the model 130 is shown in a home setting, it can be used in any other environment (e.g., in an office or in an industrial environment), as well as inside an animal’s body.

To display virtual objects (such a virtual piece of furniture 135 or virtual character 140) accurately to the user, certain embodiments define: (a) How the camera(s), on the AR device105 b, are positioned relative to model 130 or object or any static reference coordinate system (referred herein as ‘world coordinates?). Some embodiments also define (b) the 3D environment, to allow, e.g. for shadows to be properly rendered (e.g. as shown for virtual piece 135 in FIG. 1). Problem (a), also known as camera localization, pose estimation, e.g., determining the position and orientation for the camera in 3D space.

Various of these disclosed embodiments provide superior methods to determine how the camera (eyes?) are positioned relative to the model (or some static reference coordinate systems (?world coordinates?) These embodiments provide superior accuracy of localization, which mitigate virtual object jitter and misplacement?undesirable artifacts that may destroy the illusion of a virtual object being positioned in real space. These issues are avoided by prior art devices that rely on specific markers. However, these markers must be embedded in the environment and can be cumbersome to use. These markers limit the range of AR functions that can be performed.

Many of the disclosed embodiments offer, e.g., more than the existing AR solutions. Operation in real-time; operation without user intervention; display virtual objects in the correct location and without any jitter; no modification to the environment or other cumbersome preparations. Presentation to a user with an easy-to use package (e.g. It can be manufactured at affordable prices and are available in a variety of formats, including smart phones, tablets, and goggles. You will be able to see that not all embodiments have all of these features.

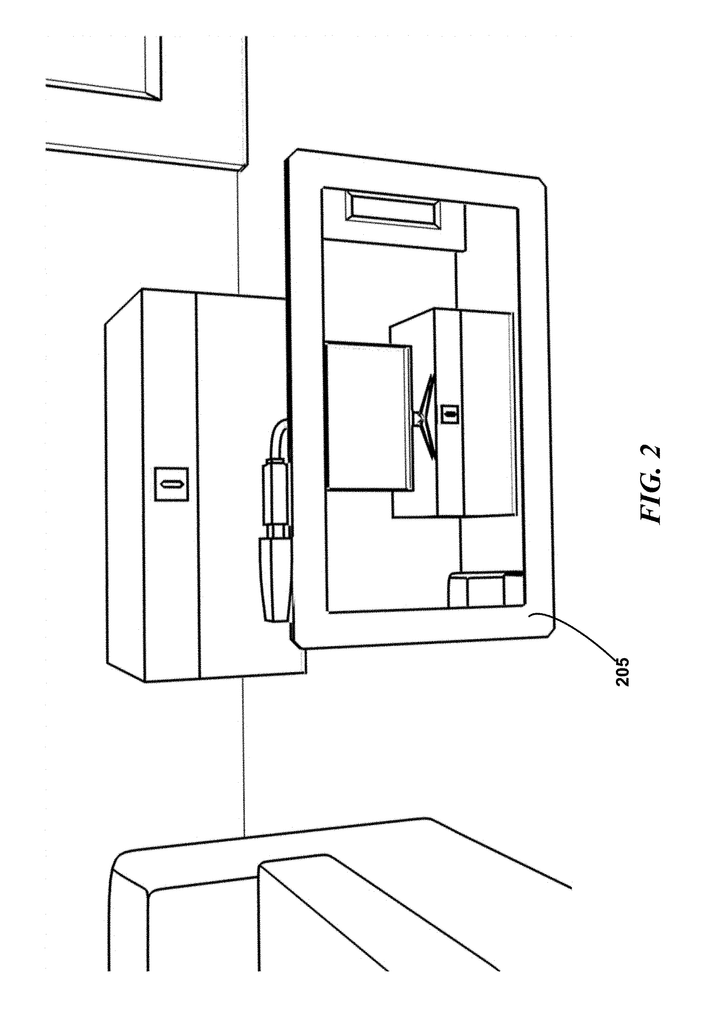

FIG. FIG. 2 shows an example of an embodiment. It depicts a virtual TV playing a home video in AR device 205, atop a piece of real-world furniture. Although the TV is not physically present in the real world, AR device 205 may allow users to see their surroundings and be able distinguish between real and virtual objects.

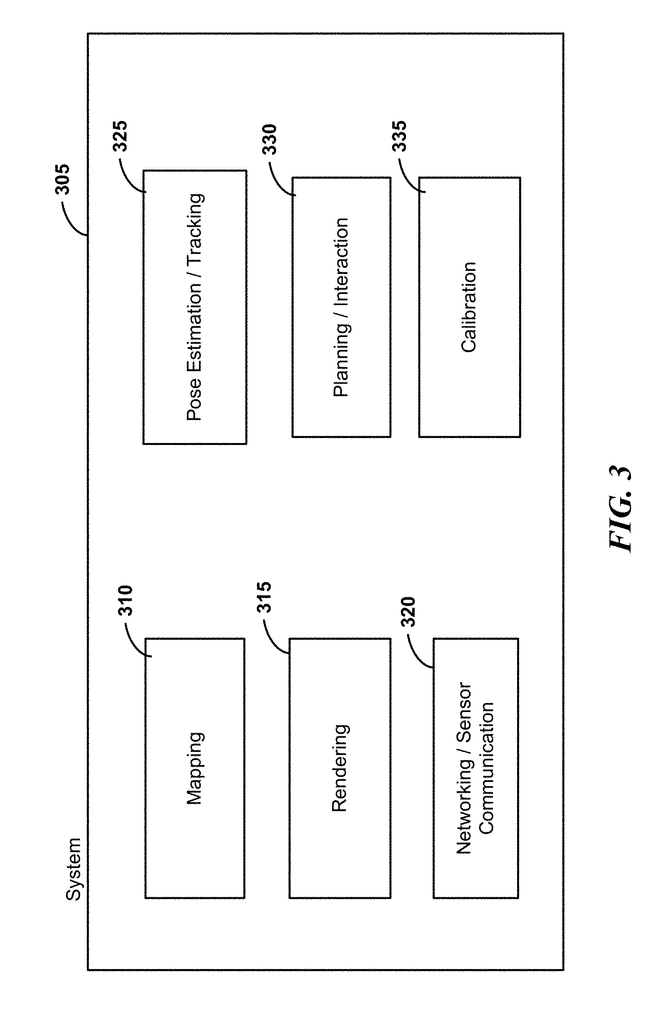

FIG. “FIG. These operational components could include the following sub-systems. Although they are shown here as parts of an overall system 305 (e.g. servers in a “cloud”), it is possible to separate the components into different computer systems. These components are shown as parts of a single overall system 305, but they can be separated into separate computer systems (e.g. servers in a?cloud?). For example, one system may comprise a capture device. A second system might receive depth frames and position data from the capture device. It may then implement a mapping component 310 in order to create a model. The remaining components may be implemented by a third system. You will be able to recognize other divisions of functionality. Some embodiments only include the functions and/or structures that are associated with a module.

Also, although tracking is described herein using a user device as a reference, one will see that certain embodiments can implement applications using data collected and processed using alternate form factors. One example is that depth sensors or other sensors can be placed around a user’s home and a device to project images onto a contact lens is provided. The data may be used to create an AR experience for the users by projecting the correct image onto the contact lenses. While third-party devices can capture depth frames of the environment, the AR functions are performed by the personal device. To make it easier to understand, components can be discussed together. However, the functionality described here may not appear in all functional divisions or form factors.

2. “2.

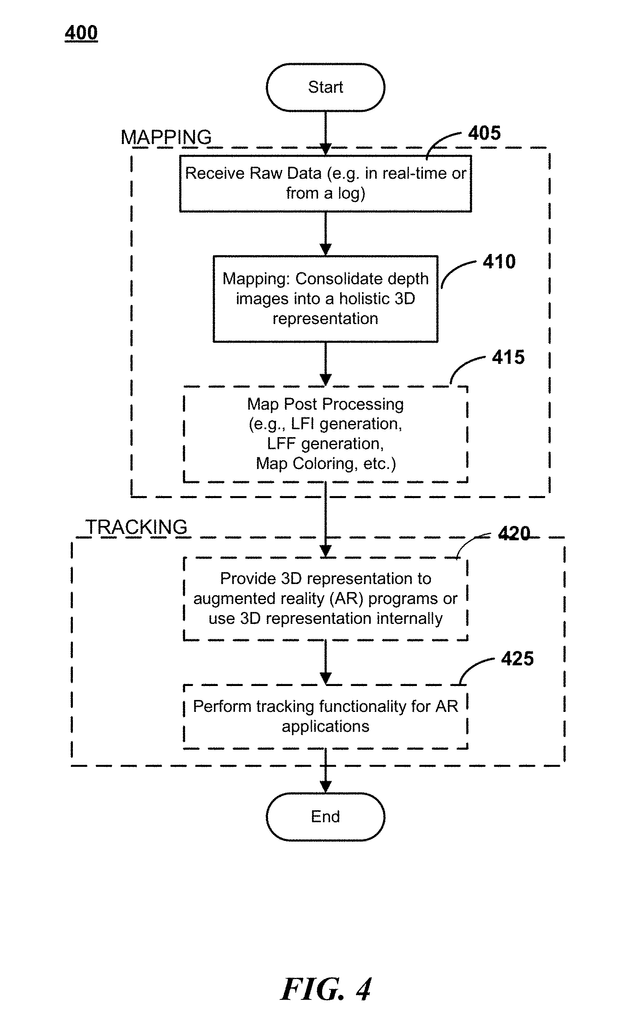

Many of these disclosed features can be found in system operations. These may appear as firmware, software, or hardware. Or a combination of both. (e.g. the implementation could take place on-chip). FIG. FIG. 4. Block 405 may contain the raw depth frame, image frames, and/or capture device direction data. This data could include inertial measurement units data such as acceleration, gyroscopic or magnetometer data. These data can be obtained from a log that was previously created by a capture system, or directly from the capture device. A user may scan the environment by walking around it with the capture device. Variations in which a device moves or rotates (e.g. where it is attached to a robot or animal) will be captured and recognized. The capture device might record information about the location (accelerometer and/or gyroscopic and/or magnetometer and/or GPS data and/or encoder data etc. Each capture may include a depth frame and possibly a visual picture frame.

Click here to view the patent on Google Patents.