Invented by Venkataramana; Dwaraka, Miao; Amy, Schwartzberg; Alex, Shiferaw; Anteneh, Talukder; Naziul, Redisch; Jason, George, III; James

Cloud networks have become the heart of modern businesses, but keeping them safe is hard. Security group rules are vital for protecting your data, but managing them is not simple. Let’s explore a new solution from a recent patent application that uses smart computers to automatically manage these rules, making your cloud safer and faster, with less effort.

Background and Market Context

As more businesses move to the cloud, protecting digital assets becomes a top concern. Cloud providers, like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud, offer flexible computing power, storage, and networking. These resources are shared over the internet, which means they are always exposed to some risk. Attackers are always looking for weak spots to steal data or disrupt services. That is why cloud security is so important.

One of the main ways to protect cloud systems is by setting up security group rules. You can think of these rules like guards at the door. They decide who can come in or go out of your cloud resources. Each rule can allow or block certain types of internet traffic based on things like IP addresses or port numbers. For example, a rule might allow only web traffic on port 80 or block all connections from a certain country.

But here’s the challenge: as businesses grow, they create more rules. Some companies have thousands of rules for just one group of servers. Sometimes these rules overlap or even contradict each other. Over time, old rules stick around, even if they are no longer needed. This creates confusion. It makes it hard to know which rule is protecting what, and sometimes it opens new ways for hackers to get in. The more rules there are, the harder it becomes to spot weaknesses and close them.

Cloud providers set limits to help control this. For example, AWS allows up to 100 rules per security group. But even with limits, the number of rules can quickly get out of hand. Managing them by hand is slow, hard, and often leads to mistakes. A human might not notice that two rules do the same job or that an old rule is no longer useful. When this happens, it slows down the system and can create security holes.

Today, there is a clear need for a better way to manage these rules. Companies want a system that can automatically watch which rules are being used, find old or unused rules, and remove them safely. This would save time, reduce risks, and keep cloud systems running smoothly.

Scientific Rationale and Prior Art

The idea of controlling traffic with rules is not new. Firewalls, which act as barriers between safe and unsafe networks, have been around for decades. Traditional firewalls use rules to allow or block traffic based on addresses, ports, and protocols. Security groups in the cloud work in a similar way. They filter traffic so that only what you want is allowed through.

Earlier approaches to managing these rules have mostly relied on manual work. System administrators check logs, look at each rule, and try to guess which ones are still needed. Some tools help by showing traffic statistics or highlighting possible issues, but they do not automatically clean up unused rules. They also struggle when rules overlap or when the same traffic could be allowed by several different rules.

Some cloud tools can show which rules have been used recently, but they do not go further. They do not suggest which rules are safe to remove. They do not check if a rule is “shadowed”—that is, completely covered by another, stricter rule. This means some rules are never actually used, but remain in the system, adding clutter and risk.

A few solutions try to automate rule management by using simple usage counters. However, they do not analyze detailed traffic logs to understand exactly which rule let a packet through. They also do not consider the order of rules and how one rule might make another unnecessary. As cloud environments get more complex, these simple methods are not enough.

Recent advances in log analysis and automation offer new hope. Cloud environments now generate detailed logs for every packet or flow of data. These logs can show exactly which packets were allowed or denied, and when. By matching log entries to security group rules, it is possible to get a clear picture of which rules are actually doing work and which are just taking up space.

But this is not easy. The log files are huge, and the rules are often complicated. It is too much for a human to sort through in real time. That is where the new invention comes in: using computers to do this job automatically, quickly, and accurately.

Invention Description and Key Innovations

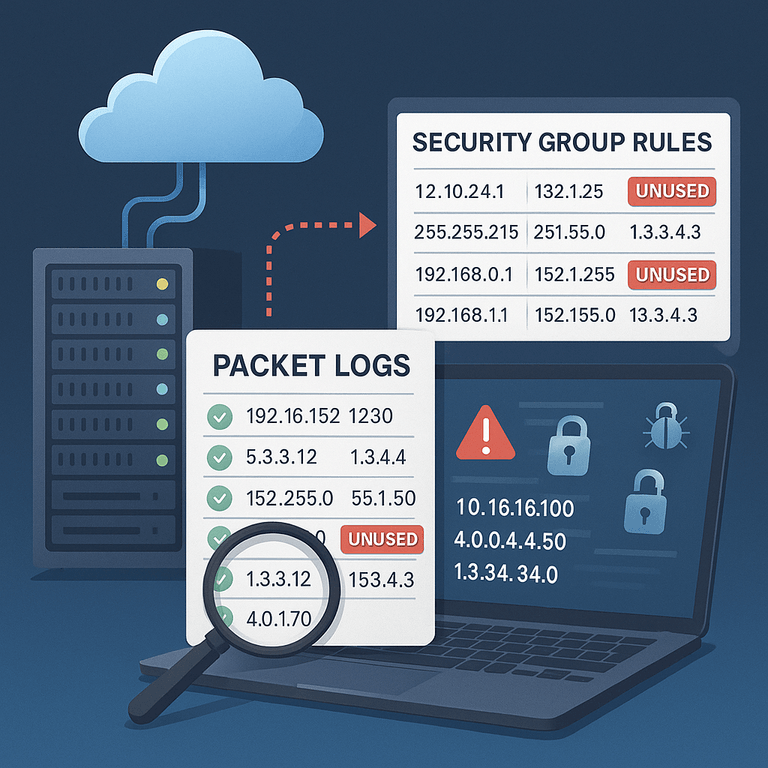

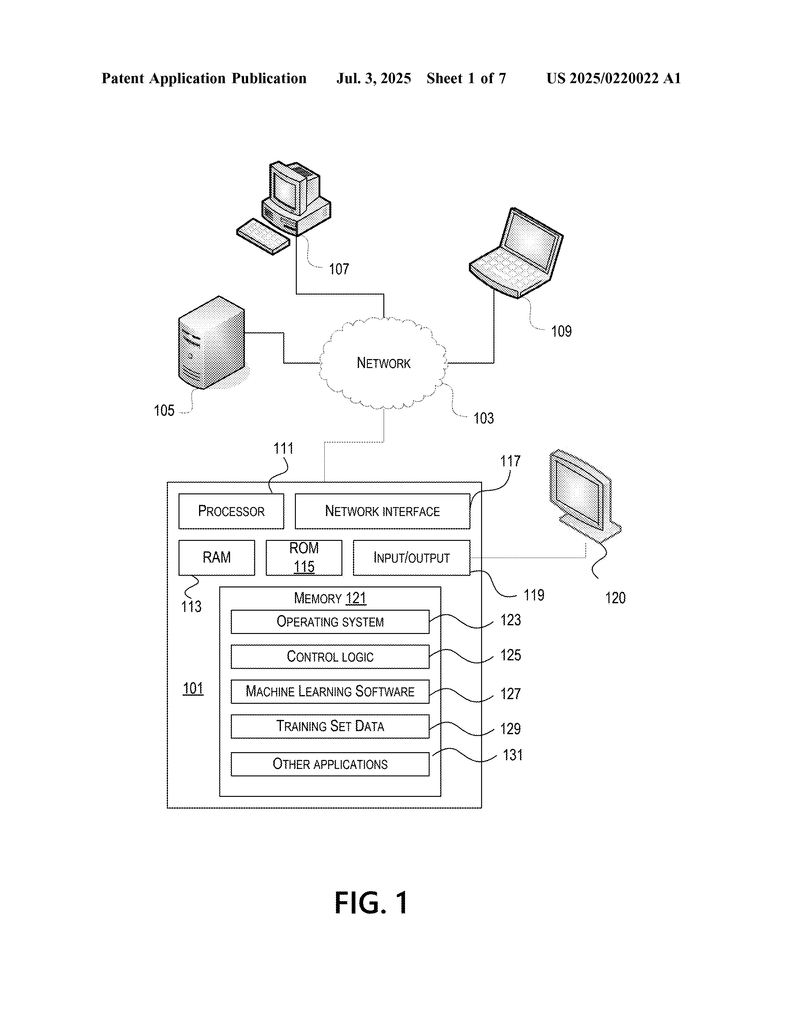

This new solution introduces a way for computers to automatically manage security group rules in the cloud by looking at packet logs. The idea is simple but powerful: Let the computer track which rules are really being used, figure out which ones are not, and remove the ones that are not needed. This helps keep the system safe and makes it run faster.

Here’s how the invention works, step by step:

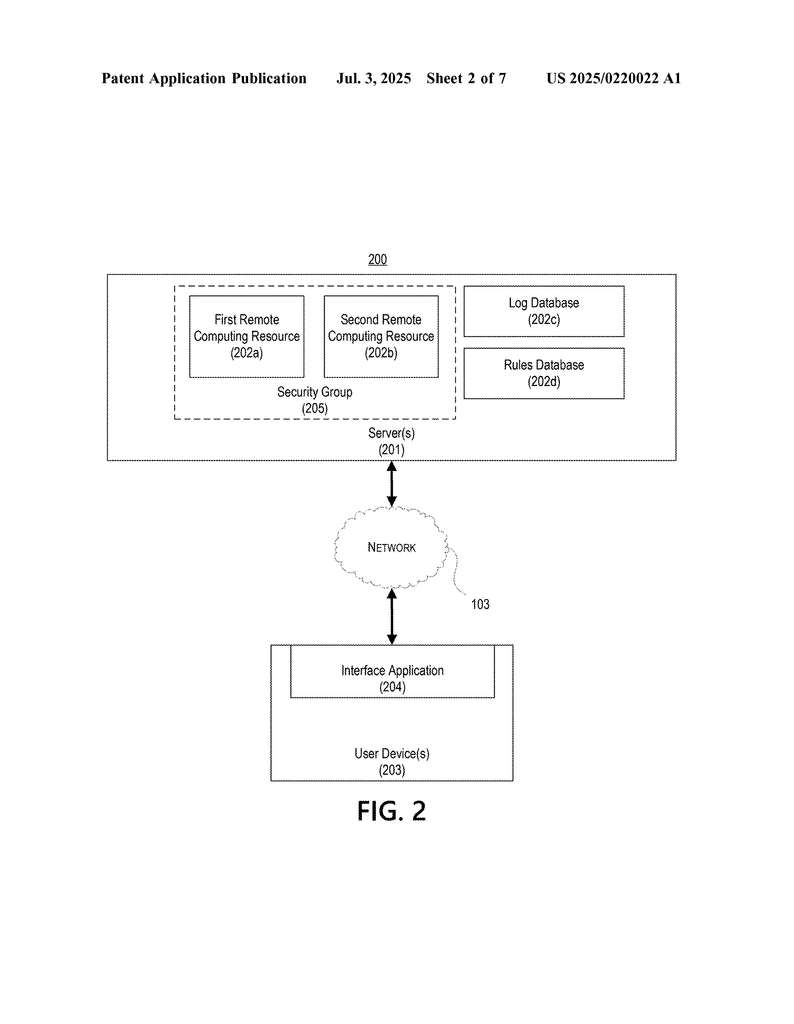

1. Collecting Log Data

The computer starts by collecting logs from the cloud. These logs show every packet or flow of data that comes in or goes out of a security group. For example, in AWS, these are called VPC Flow Logs. Each log entry records details like the source and destination, the ports, and whether the traffic was allowed or blocked.

2. Gathering Security Group Rules

Next, the computer finds all the rules that are set up for the security group. These rules say things like “allow web traffic from these addresses” or “block all traffic on certain ports.” This information is usually kept in a database.

3. Sorting Rules by Permissiveness

The computer then sorts the rules from most strict (least permissive) to least strict (most permissive). This is important because, in security, the strictest rule often matters the most. For example, a rule that blocks all traffic from a certain address should take priority over a rule that allows traffic from a wider range.

4. Matching Log Entries to Rules

For each log entry, the computer checks which rule allowed or blocked that traffic. It does this by going through the sorted list of rules, from strictest to loosest, and finds the first rule that matches. This way, it can tell exactly which rule was responsible for each packet.

5. Marking Rules as Used, Unused, or Shadowed

As the computer goes through the logs, it keeps track of which rules are actually being used. If a rule never matches any packet in the logs, it is marked as unused. If a rule could have matched some traffic, but a stricter rule matched it first, it is marked as “shadowed.” Shadowed rules are not needed because they are always overridden by other rules.

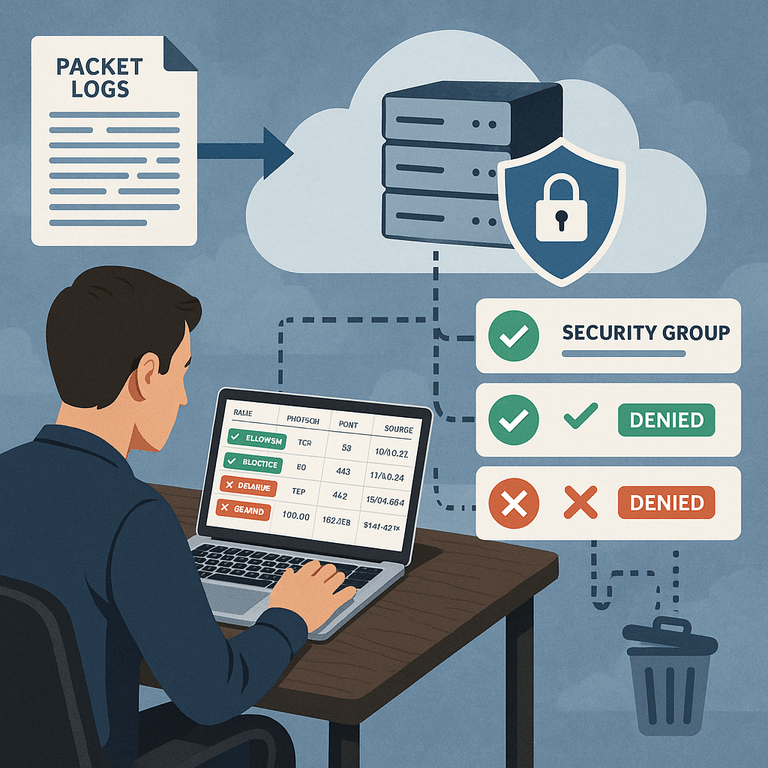

6. Removing or Disabling Unused and Shadowed Rules

Once the computer knows which rules are not being used, it can safely remove them. This can mean deleting the rule or simply turning it off. By doing this, the system becomes safer because there are fewer ways for attackers to sneak in. It also makes the system faster, because there are fewer rules to check for each packet.

7. Keeping Stakeholders in the Loop

Before a rule is removed, the computer can alert the people responsible for managing the system. They can approve or reject the removal, just in case the rule is needed for a special reason. This step makes sure nothing important is lost by accident.

8. Updating and Reporting

The computer creates reports that show which rules are used, which are shadowed, and which can be removed. This gives security teams a clear view of their system and helps them make good decisions about future changes.

This system can work with many cloud platforms, but it is especially helpful for environments like AWS, where security groups and flow logs are standard features. The computer can handle large amounts of data quickly, making it possible to keep up with even the busiest cloud networks.

Let’s look at what sets this solution apart:

– Automated Analysis: The computer does all the work, from reading logs to finding which rules are needed. There is no need for manual checks.

– Least Permissive Rule Matching: By always matching the strictest rule first, the system ensures that the most secure option is used.

– Shadowed Rule Detection: The system finds rules that are never used because stricter ones always apply. This helps remove clutter and keeps security tight.

– Safe Rule Removal: Unused and shadowed rules are identified and can be disabled or deleted, but only with approval from the right people.

– Continuous Improvement: As traffic patterns change, the system keeps watching and updating, so the security setup always fits the current needs.

– Speed and Scalability: The process can handle huge logs and many rules, which would be impossible for a human to do in real time.

– Integration with Cloud Tools: The invention works with existing cloud features like flow logs and security group APIs, so it fits easily into modern setups.

The result is a cloud environment that is easier to manage, safer from attack, and runs more smoothly. Security teams can focus on real risks instead of chasing after old or unused rules. The business can move faster and with more confidence, knowing their cloud is protected by the smartest possible system.

Conclusion

Managing security group rules in the cloud is a vital but tough job. Too many rules create confusion, slow down the system, and open doors for attackers. This new invention uses smart computers to automatically watch, analyze, and clean up security rules by studying real traffic logs. It finds which rules are helpful, which are not needed, and which are covered by stricter rules. By removing clutter and focusing on what matters, cloud systems become safer and faster. This approach changes the way cloud security is handled, moving from slow, manual checks to fast, automatic protection. For any business using the cloud, this is a big step forward in keeping their digital world safe.

Click here https://ppubs.uspto.gov/pubwebapp/ and search 20250220022.