Invented by Spong; Mason

Extended Reality, or XR, is changing the way people interact with digital worlds. The technology behind XR blends real and virtual environments, creating a new way for users to see, touch, and move digital objects as if they are real. One of the most exciting new ideas in XR is letting users move virtual objects simply by making a pinch gesture with their fingers. This blog will dive deep into how this works, why it matters, and what makes this invention stand out.

Background and Market Context

XR is a big word, but it covers technologies you may have heard of before: virtual reality (VR), augmented reality (AR), and mixed reality (MR). XR is about putting digital things into your real world or taking you into a digital world. Think of wearing special glasses or a headset that can show you things that are not really there—like a dinosaur walking in your living room, or a 3D map floating in front of your eyes.

Many people today use XR for games, learning, or work. Kids may play games where they catch virtual monsters in their backyard. Doctors can use XR to practice surgeries without touching a real patient. Teachers can walk students through the solar system without leaving the classroom. Artists can build 3D models in mid-air, and workers can get step-by-step help fixing machines, all while seeing both the real and the digital world at once.

But XR has one big challenge: how do you tell the computer what you want to do? In the real world, you can just reach out and touch something. In XR, it’s not that simple. Many headsets and glasses do not have buttons or joysticks. Even if they do, looking away from the digital object to find a button is not fun. Touchscreens and controllers can get in the way, feel clumsy, or take you out of the experience.

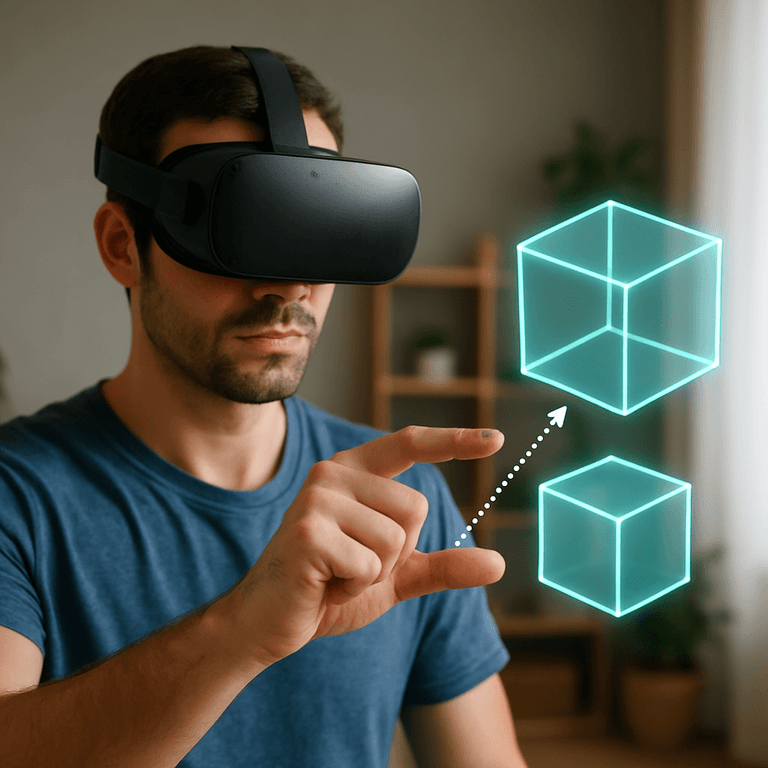

What people want is to use their hands, just like in real life. If you could pinch your fingers together and grab a floating object, move your hand, and have the object follow, that would feel natural. It would make XR easy for anyone to use, from a child playing a game to a worker moving a digital part on top of a real machine.

The XR market is growing fast. Companies big and small are trying to make XR gear lighter, more comfortable, and more useful. A big part of this is making the way people interact with XR as natural as possible. The more “real” XR feels, the more people will use it. That’s why ideas like moving virtual objects using just a pinch gesture are so important. They bring XR closer to feeling like the real world.

Scientific Rationale and Prior Art

To understand why this invention matters, let’s step back and see what came before. XR systems have tried many ways to let users interact with digital objects. Early systems used controllers—sticks with buttons, like in video games. Some used touchpads or even gloves packed with sensors. Others tried voice commands or simple hand waves seen by cameras.

Controllers work, but they are not natural. You have to learn what each button does. Touchpads and buttons on glasses or headsets are small, and you have to take your hand off the object you want to move. Gloves with sensors can be heavy, hot, and expensive. Voice commands can be slow and may not work in noisy places.

Some XR systems use cameras to watch your hands. They try to figure out if you are pointing, waving, or making shapes. This hand-tracking is getting better every year. Still, just knowing where your hand is does not always make it easy to grab and move a virtual thing. Systems often only let you pick up objects at their center, which feels odd. If you try to “grab” an object off to the side, the whole object might jump, break the illusion, or not move as expected.

Another problem is that tracking gestures like a pinch—touching your thumb and index finger together—can be hard. Cameras need to see your hand clearly, and the XR system must tell the difference between a real pinch, a wave, or just scratching your nose. Some XR systems use simple rules, like “if the thumb and finger are close enough, it’s a pinch.” But this can lead to mistakes, like moving an object when you didn’t mean to.

There have been some attempts to use machine learning—teaching computers to spot patterns, like the shape of your hand when you pinch. These models look at pictures of hands and learn to tell a pinch from other gestures. Machine learning can make pinch detection much more accurate, even with different hand shapes, skin colors, or lighting.

But even with better hand tracking, most XR systems do not let you grab an object exactly where you touch it. Imagine grabbing a cup by the handle, but the cup always moves as if you grabbed it in the middle. That’s not what you expect. For XR to feel right, the system needs to know exactly where you “grabbed” the object and move it as if your fingers are holding that spot.

The patent application at the heart of this article solves this. It combines good hand tracking, smart pinch detection, and a way to move objects from any point you grab them, not just the center. It even keeps track of your hand as you move, updating the virtual object in real-time. This makes the experience smooth, natural, and fun.

Invention Description and Key Innovations

Let’s break down how this new XR system works and what makes it special. The main idea is simple: you see a virtual object in the XR world, you make a pinch gesture with your hand, and you can move the object just like you would in real life.

Here’s how it works step by step:

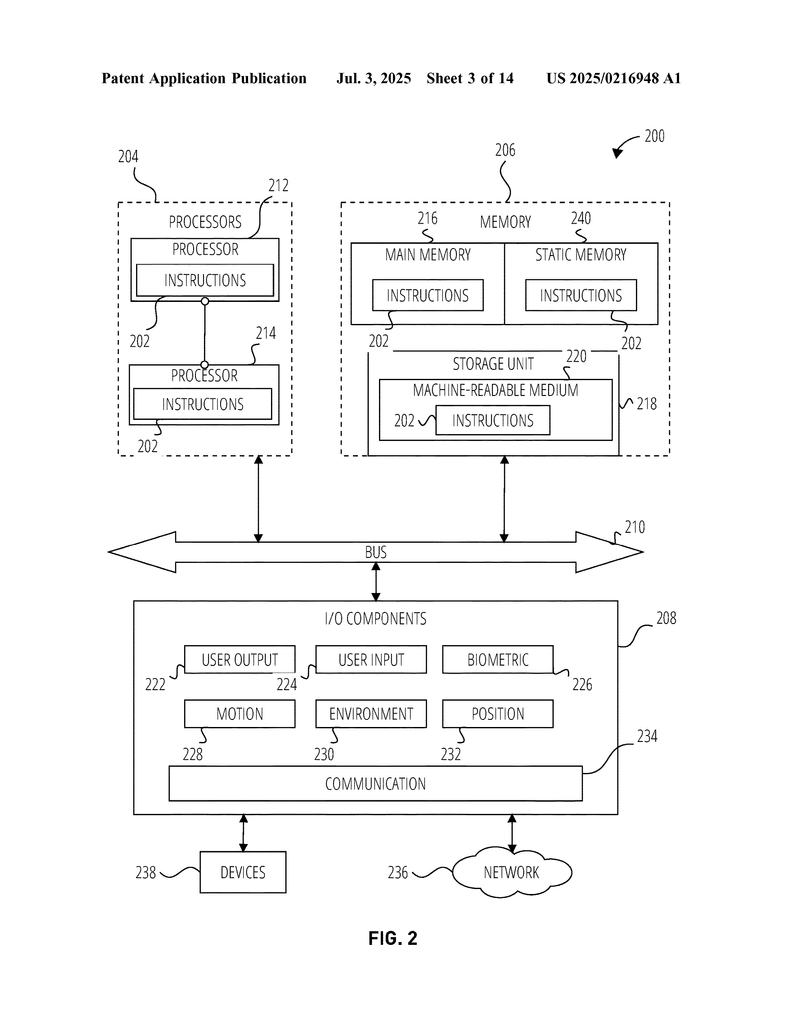

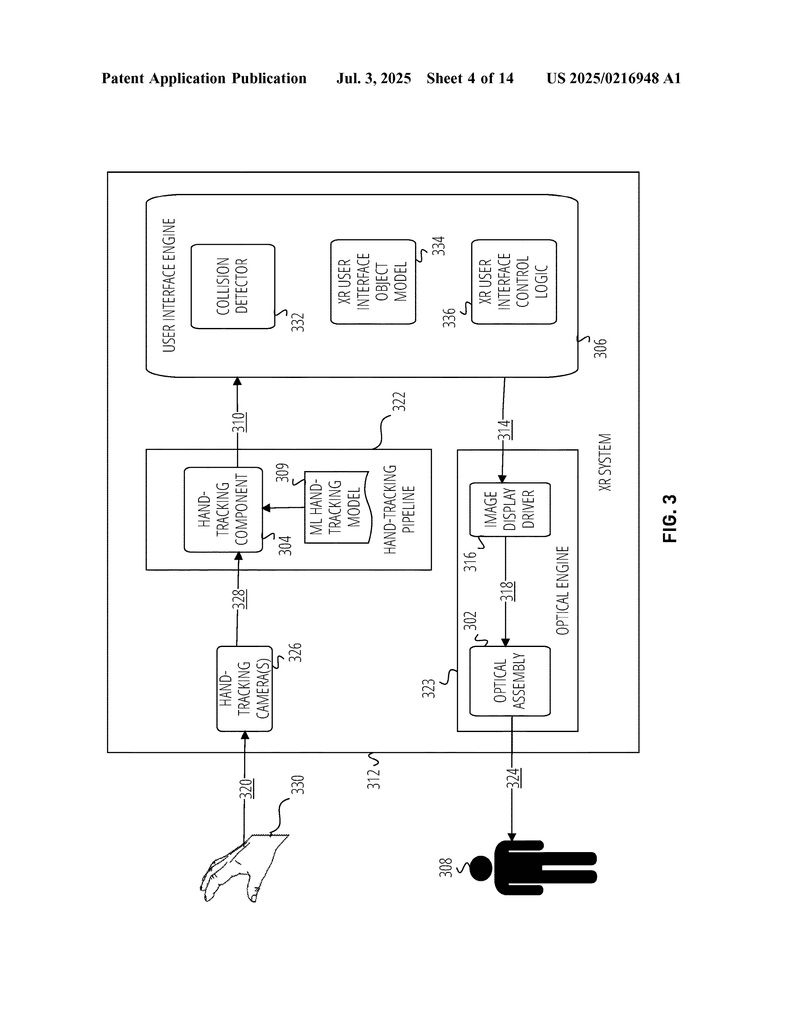

First, the XR system uses cameras to watch your hand. The cameras send images to a computer inside the headset or glasses. Software looks at these images, figures out where your hand is, and tries to spot when you make a pinch gesture. This can be done using simple distance checks—are your thumb and index finger close enough?—or better yet, using machine learning that has been trained to spot pinches in all kinds of hands and lighting.

When you pinch, the system doesn’t just see that you pinched somewhere near the object. It figures out exactly where your fingers were in the XR space. It checks if your pinch is inside the “boundary” of a virtual object. This boundary can be a simple box around the object, or a more complex 3D shape called a “collider.” If your pinch is inside, the system knows you want to grab that object.

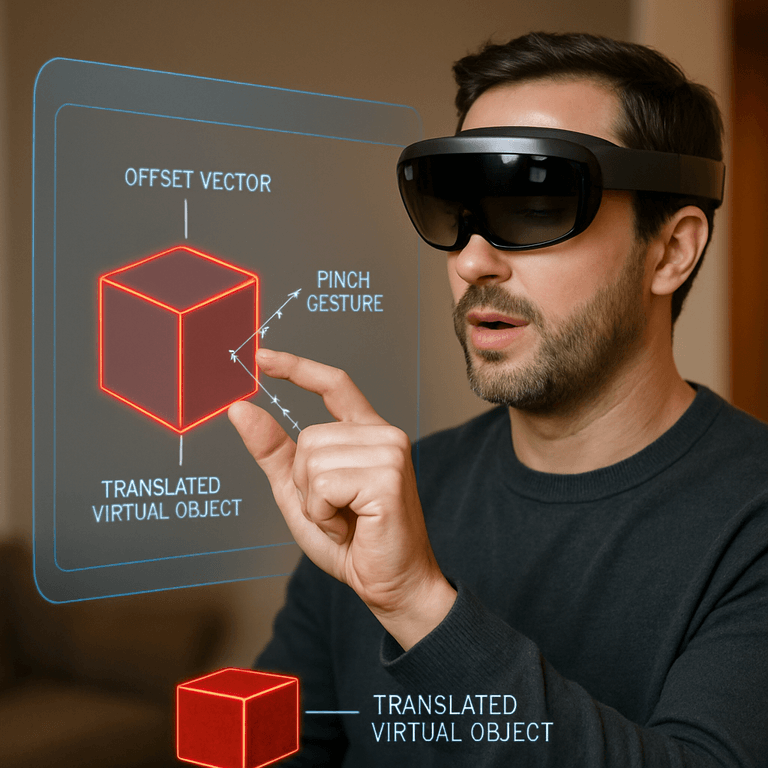

Now comes the key part: instead of always grabbing the object by its center, the system measures the offset vector. This means it figures out the distance and direction from the center of the object to the exact spot where you pinched. It’s like grabbing a real cup by the edge, not the middle.

When you move your hand while holding the pinch, the system tracks your new finger location. It uses the offset vector to keep the same spot of the object under your fingers, just like if you were holding it in real life. If you let go (open your fingers), the system sees the pinch has ended and stops moving the object.

This approach makes moving objects in XR feel much more natural. You can pick up a box by the corner, a virtual ball by the side, or a tool by the handle. The object moves with you, staying under your fingers, no matter how you grabbed it. This is a big step forward from past systems that only let you grab things by their center.

There are more clever parts in this invention. The system keeps checking, in real-time, where your hand is and updates the object’s position as you move. If you keep holding the pinch, it keeps following your hand. When you let go, it stops. If you try to grab outside the object, nothing happens, so you don’t move things by mistake.

The system can also use boundaries defined by special collider shapes, so you can grab oddly-shaped objects just as you would in real life. By using machine learning to detect the pinch, it works for many different people, in many settings, making the system reliable and easy for everyone to use.

The invention can be used in many kinds of XR devices, including head-worn glasses, contact lenses with displays, or even on tablets and phones with hand-tracking cameras. The computer inside the device can be a small chip or a powerful processor. The invention can run on the device itself, or use the cloud for more complex calculations.

This system is fast, natural, and easy to use. It does not need extra hardware like gloves or controllers. It works with just your hands and eyes. By letting you grab and move digital objects as you would in the real world, this invention makes XR more useful and more fun for everyone.

Conclusion

The future of XR depends on making the digital world feel as real as possible. This new way of moving virtual objects—using a simple pinch and tracking exactly where you grabbed—brings us one step closer. It solves old problems of awkward controls and makes XR feel natural for users of all ages. Whether you are playing, learning, or working, this invention lets you use your hands the way you always have. The result is an XR experience that is smoother, smarter, and more human than ever before.

Click here https://ppubs.uspto.gov/pubwebapp/ and search 20250216948.