Invented by KANDULA; Srikanth, BANSAL; Deepak, TEWARI; Rishabh, DE GRACE; Gerald Roy

Modern data centers face a big challenge: moving huge amounts of data quickly and smoothly, especially when running artificial intelligence (AI) workloads. A new patent application describes an enhanced router, or “smart switch,” that can help solve this problem. This blog will explain why this matters, how the science and older solutions have worked, and what makes the new invention stand out.

Background and Market Context

Almost everything we do online today depends on data centers. These are large rooms full of computers and networking equipment, housing the “cloud” that powers apps, websites, and artificial intelligence (AI). When you use a voice assistant or upload a photo, it’s these data centers that do the heavy lifting.

Data centers need to move data between computers, or “hosts,” as fast as possible. This is especially true for AI. AI workloads like training a chatbot or recognizing pictures require moving large numbers of data packets very quickly between many servers. If the network is slow or crowded, AI tasks take longer and cost more.

Cloud providers and big companies want their data centers to be as fast and efficient as possible. They have started using software defined networking (SDN), which makes it easier to control and change how networks work. SDN lets companies manage traffic, set rules, and adjust resources on the fly. But even with SDN, there are limits to how much data can be moved, and how quickly computers can “talk” to each other.

One of the biggest pain points for AI is the time it takes to move and combine data during training. Imagine a group of computers, each working on a small part of a giant puzzle. After each step, they need to share what they’ve learned with each other. This means sending lots of messages back and forth, which can slow things down if the network isn’t smart about handling this traffic.

Traditional routers and switches just pass data along without doing any work on it. All the heavy lifting—like combining (aggregating) or broadcasting data—happens at the end points (servers or GPUs). This wastes bandwidth and time because the same data often gets sent multiple times, or servers have to wait for everything to arrive before combining it.

To keep up with growing demand for AI, companies need a way to make networks smarter and more helpful. That’s where the enhanced router comes in. By doing some of the data work inside the network itself, this new approach promises to cut down on traffic, reduce wait times, and make data centers run more smoothly.

In summary, cloud providers, enterprises, and AI researchers all want faster, more efficient networks. The market is huge, and any improvement in how networks handle AI workloads can save money, boost performance, and open new possibilities for smart services.

Scientific Rationale and Prior Art

To understand what’s new in this patent, it’s helpful to look at what has come before.

Traditional networks are built around a simple rule: routers and switches move data from place to place, but don’t try to understand or change it. All the “thinking” happens at the endpoints—servers, GPUs, or CPUs. If you want to broadcast data to many computers (like sharing AI model updates), or combine data from different sources (like adding together results), each computer has to send and receive lots of messages. This creates a lot of repeat traffic, slowing everything down.

Researchers and engineers have tried to make things better in several ways. One common solution is to use multicast, where the network sends a single copy of data to a group instead of sending many copies separately. Another is to use in-network aggregation, where some switches or routers can add together data (like summing gradients in AI) before sending the result on. These ideas have been used in high-performance computing (HPC) clusters and some specialized AI networks.

Older solutions often require special hardware or custom protocols. Some use programmable switches or network interface cards (NICs) that can run small programs to change how packets are handled. For example, protocols like Infiniband and technologies like P4 or eBPF allow some simple processing in the network. However, these solutions are often hard to standardize, don’t work well with every type of workload, or can’t be easily managed across different pieces of hardware.

Another challenge is making sure that network devices know which workloads need special handling. In many cases, networks can’t “see” what type of data is being sent, so they just treat everything the same way.

Cloud providers have started using software defined networking (SDN) to control how traffic moves, but SDN mostly focuses on routing and security, not on making the network itself “smarter” about the content of data packets.

In all these earlier approaches, there’s a need for a common way to tell network devices what to do with certain types of data. That’s where APIs (application programming interfaces) come in. Some projects have tried to create APIs for programming network devices, but these are often limited to specific vendors or types of hardware.

In short, while there have been efforts to improve how networks handle complex workloads, most solutions are either too specialized, too hard to manage, or don’t work well across different data center environments. There’s a clear need for a flexible, standard approach that lets network devices help with AI workloads in a way that’s easy to control and works with the existing SDN infrastructure.

Invention Description and Key Innovations

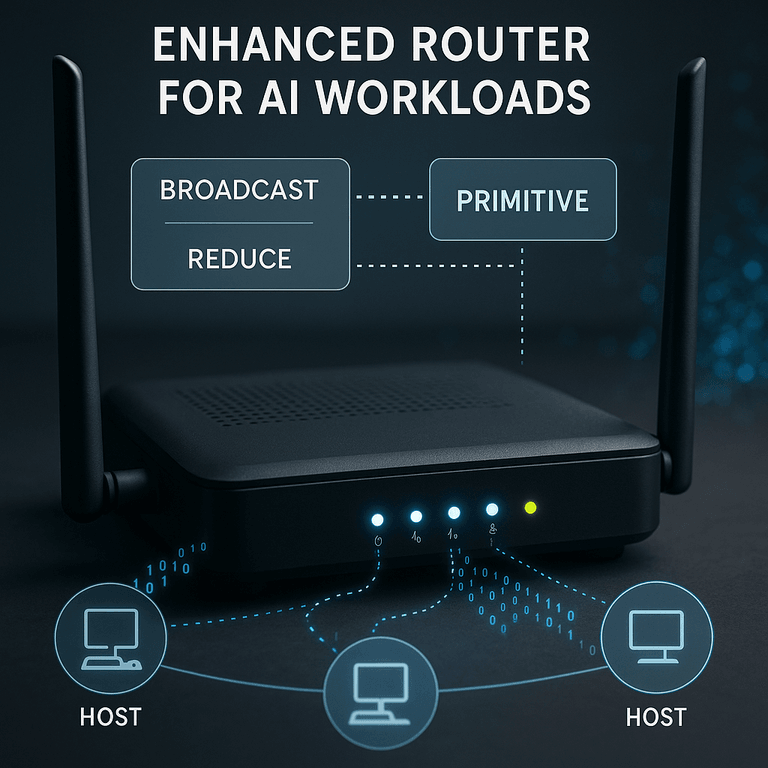

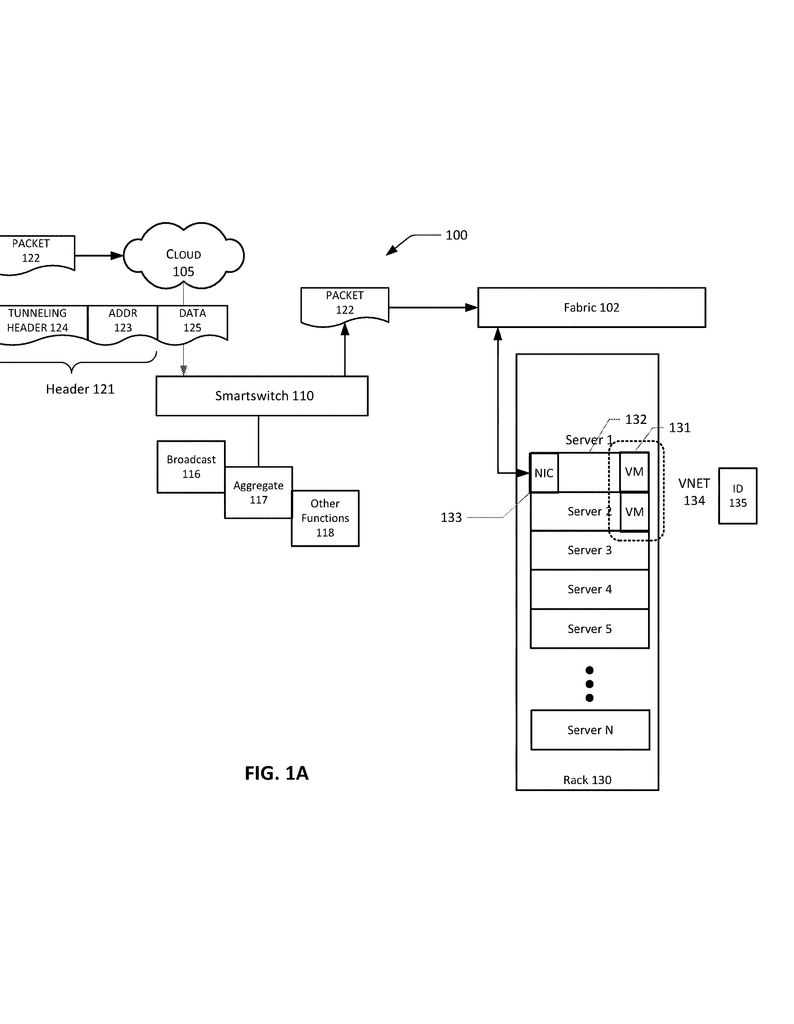

The patent describes a new type of router—called an “enhanced router” or “smart switch”—that can process data packets as they move through the network, not just at the endpoints. Here’s how it works and why it matters.

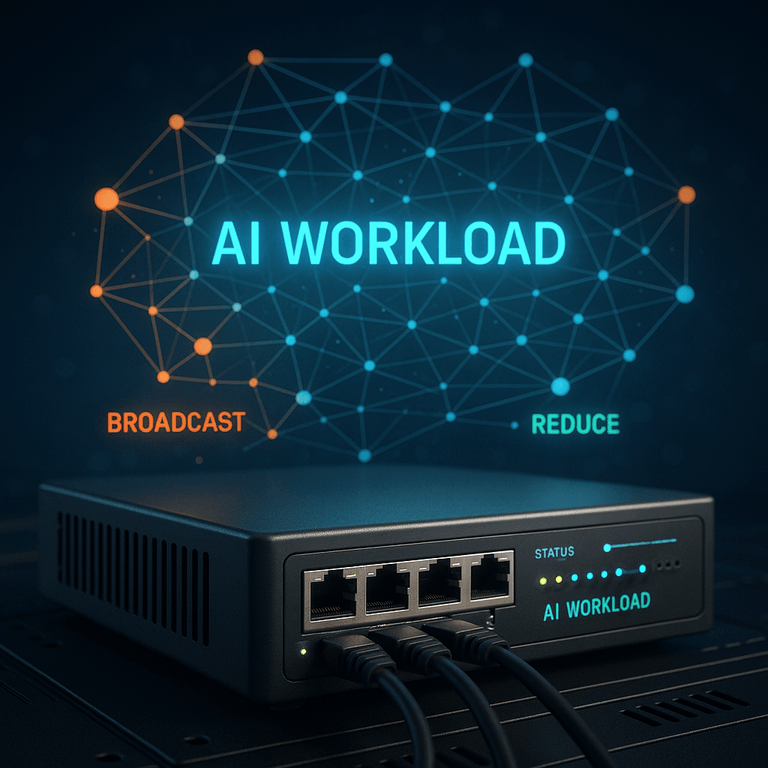

At its core, the enhanced router is designed to perform “primitives” on data packets. A primitive is a basic operation, like broadcasting a message, combining data from different sources, or compressing information. These are the building blocks of complex AI tasks.

Unlike old routers, which just send data along, the enhanced router looks inside each packet and can decide what to do based on special instructions (“primitives”) it receives from the SDN control plane. This means operators can tell the router to perform certain actions on data packets before they reach the servers. For example, the router can:

– Broadcast a data packet to all servers working on the same AI task, saving bandwidth.

– Aggregate (sum or combine) data from multiple sources in the network, reducing the number of messages sent.

– Compress gradients (used in AI training) to shrink the size of messages, making things faster.

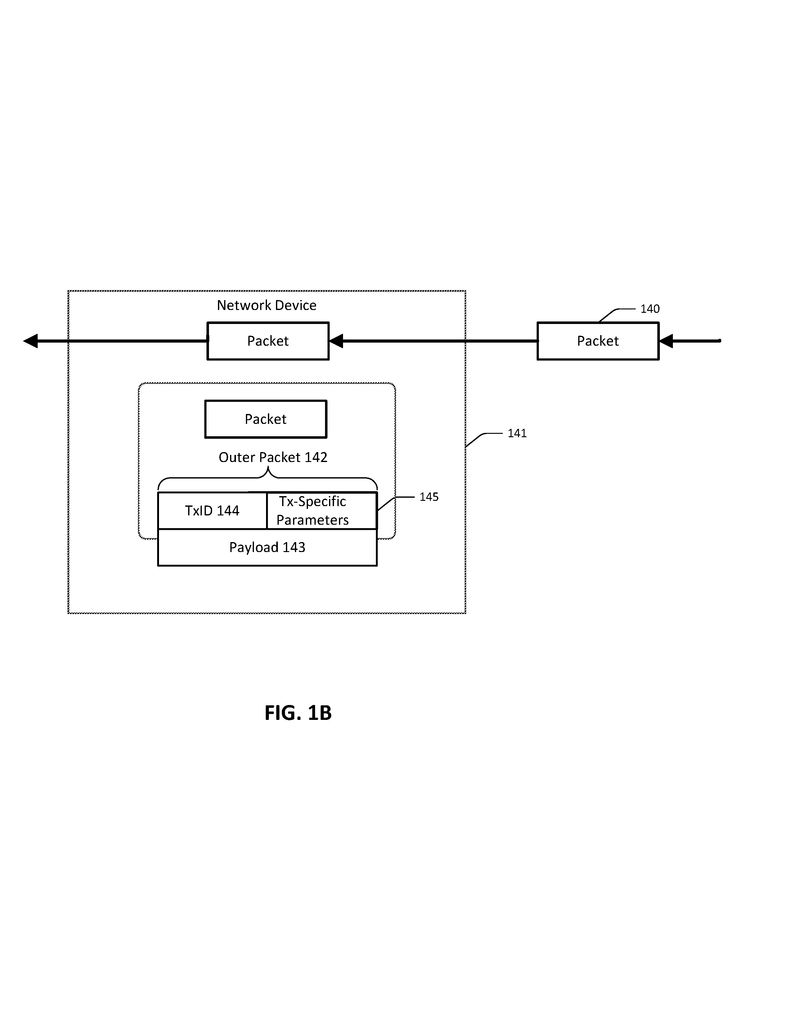

To make this work, the router relies on a new protocol for sending instructions. Packets include a header with a unique identifier (like a VXLAN ID) that tells the router which workload a packet belongs to and what primitive to perform. The SDN controller uses an API to program the router, so everything can be managed centrally and updated easily.

The system supports different types of transformations:

– One-to-one: A single packet is changed and sent on. For example, compressing data or changing a field.

– One-to-many: One packet is copied and sent to many destinations. For example, broadcasting a model update to all GPUs.

– Many-to-one: Multiple packets are combined into one. For example, adding together gradient updates from several servers.

The enhanced router can be implemented in many ways. It could be a smart switch with data processing units (DPUs), a network interface card (NIC) with extra processing power, or a pool of programmable NICs. It can be deployed in different places in the data center network, like at the top of a rack or in the network core.

The invention also includes a standard API that lets different vendors and devices work together. This API allows the SDN control plane to send instructions—like which primitive to use, when to use it, and what data to operate on. The matching logic can use simple rules, like “if the destination is a broadcast address, send this packet to all group members.” The router can hold partial results for a short time, combine them, and send out the finished product.

For AI workloads, this means faster training, less network traffic, and better use of expensive hardware like GPUs. By moving some work into the network, the system avoids sending the same data over and over, cuts delays, and speeds up results. It also works for other types of workloads, not just AI, making it a flexible tool for modern data centers.

In summary, the key innovations are:

– In-network execution of primitives: The router can perform basic operations on data packets, not just pass them along.

– Flexible API and protocol: The system uses a standard way to tell routers what to do, making it easy to manage and update.

– Support for different transformations: The router can handle one-to-one, one-to-many, and many-to-one operations, covering a wide range of tasks.

– Integration with SDN: The system fits into modern SDN-managed data centers, allowing central control and automation.

This approach unlocks new possibilities for speeding up AI workloads, saving bandwidth, and making data centers more efficient.

Conclusion

Data centers are the backbone of today’s digital world, and AI workloads are pushing their limits. The enhanced router described in this patent offers a fresh solution by performing key operations inside the network itself. By supporting flexible primitives, using a smart API, and working hand-in-hand with SDN, this invention can make AI training and other heavy workloads much faster and more efficient. The result is a smarter, more agile data center that can keep up with the demands of AI and cloud computing, helping businesses save time, cut costs, and deliver better services to everyone.

Click here https://ppubs.uspto.gov/pubwebapp/ and search 20250219943.