Invented by Rank; Schuyler K., Kleiman; Laura J., Peterson; Patrick M.

Machine learning is changing how computers understand human language. But making these systems better is not easy. A new patent application shows a smart way to improve these models by comparing them, using both artificial intelligence and human help. In this article, we’ll explain the background, the science behind this idea, and what makes this invention special.

Background and Market Context

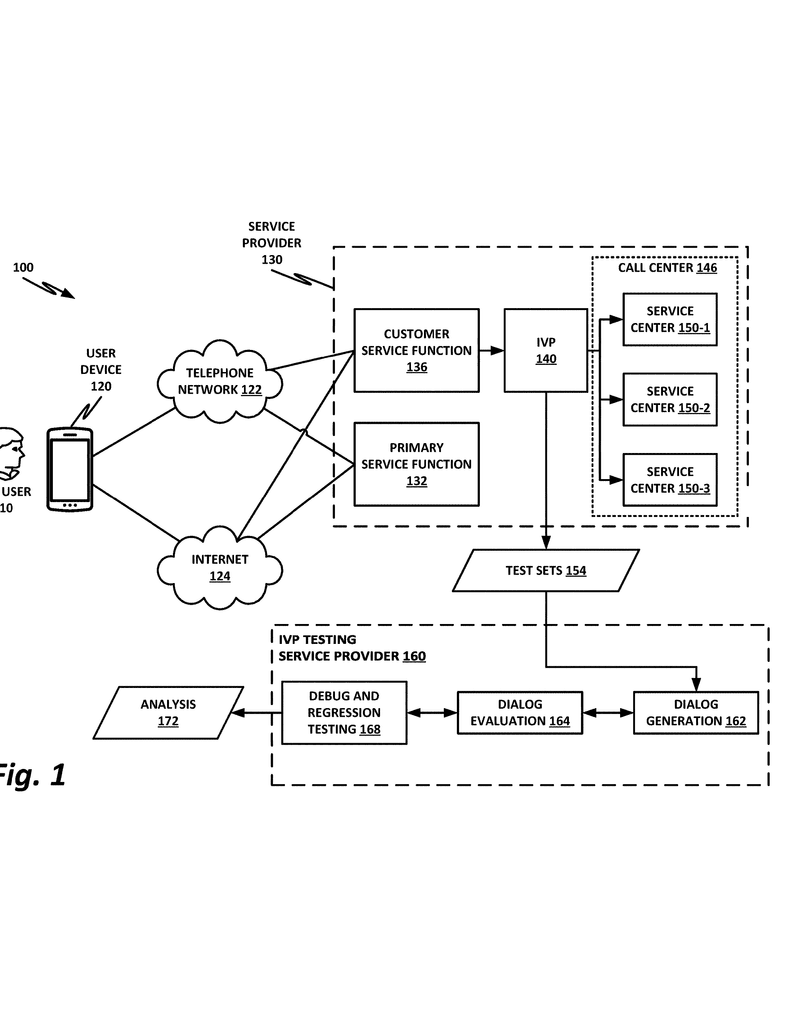

Every day, millions of people call customer service, talk to virtual assistants, or chat with online bots. These systems try to understand what people want, using machine learning (ML) models trained to guess a person’s “intent” from what they say or type. For example, if you call your bank and say, “I lost my card,” the system should know you want to report a lost card and direct you to the right help.

These ML models are everywhere—in your phone’s voice assistant, in the chatbots of shopping sites, and in call centers. Their job is to make sure people get help quickly, without getting stuck in endless menus or talking to the wrong person. The better the model, the happier the customers and the more money companies save.

But it’s hard to make these models work well. People don’t always say things the same way. One person might say, “I need a new card,” while someone else says, “My credit card is gone.” Both mean the same thing, but the computer has to learn to catch these differences.

Training these models takes a lot of work. Experts look at real customer conversations and write example phrases—called “prompts”—to help the model learn. The more examples for each intent (like “report lost card” or “ask about balance”), the better the model gets. In big systems, there can be dozens or even hundreds of different intents, each needing many examples. This means thousands of prompts to create, check, and use for training.

To make things easier, companies now use artificial intelligence tools like large language models (LLMs) to help generate these prompts. But these tools aren’t perfect—they can repeat the same style or miss how real people talk. Human experts still need to review and edit what the AI creates.

Once a new version of a model is trained, it’s important to check if it’s actually better than the old one. Testing every single prompt takes too much time. This is where the new patent idea comes in: compare the old and new models side by side, but only focus on the prompts where they disagree. This smart shortcut lets human reviewers work faster, focusing only on the tricky cases that really matter.

This invention matters because companies everywhere want to make their voice and chat systems smarter and more helpful. Every improvement means fewer annoyed customers, less time wasted, and more efficient service. As more people interact with computers through speech and text, the need for better ML models keeps growing.

Scientific Rationale and Prior Art

Machine learning models, especially those used for understanding language, work by learning from lots of examples. These examples show the model what kinds of words or phrases mean which “intent.” For example, “I want to cancel my flight” and “Please stop my booking” might both mean the same thing and should be tagged with the same intent.

Traditionally, experts build up huge collections of these example prompts by listening to real conversations or imagining what people might say. They then train the ML model using these examples, tweaking it over time as new ways of saying things come up. Sometimes, the model is retrained with even more examples, or with newly discovered ways that customers ask for help.

Earlier methods for improving these models have a few common features:

First, they rely heavily on human labor. Experts must listen, write, and tag thousands of prompts. This is slow and can be boring, leading to mistakes or lack of variety.

Second, as models get bigger and cover more intents, the number of examples needed explodes. It’s hard for even teams of experts to cover all the different ways people might speak or type.

Third, when a new model is trained, it’s not easy to tell if it’s better than the old one. Companies often test both models on lots of prompts, but reviewing every single result is not practical.

Recently, some tools have used generative AI—like large language models—to suggest more example prompts. These systems can quickly make up new ways of saying things, but sometimes their examples are too similar or not realistic. Human experts still have to guide the AI, ask for more variety, and throw out weird or unlikely prompts.

Comparing two models is also not new. In software, “A/B testing” is common, where two versions are tested to see which works better. But with language models, the problem is trickier. If both models agree on most prompts, reviewing every answer is a waste of time. The new patent’s trick is to only look at the cases where the old and new model give different answers. This focuses the human review on the most important, difficult, or ambiguous cases.

Before this patent, most systems either compared all results or relied on automatic metrics, which might miss subtle mistakes. No one had put together a system that uses both AI to help generate prompts and a smart comparison method to speed up human evaluation.

The result is a more efficient way to improve models, making the process faster, less expensive, and more accurate. This is important as voice and chat systems become more common and more complex.

Invention Description and Key Innovations

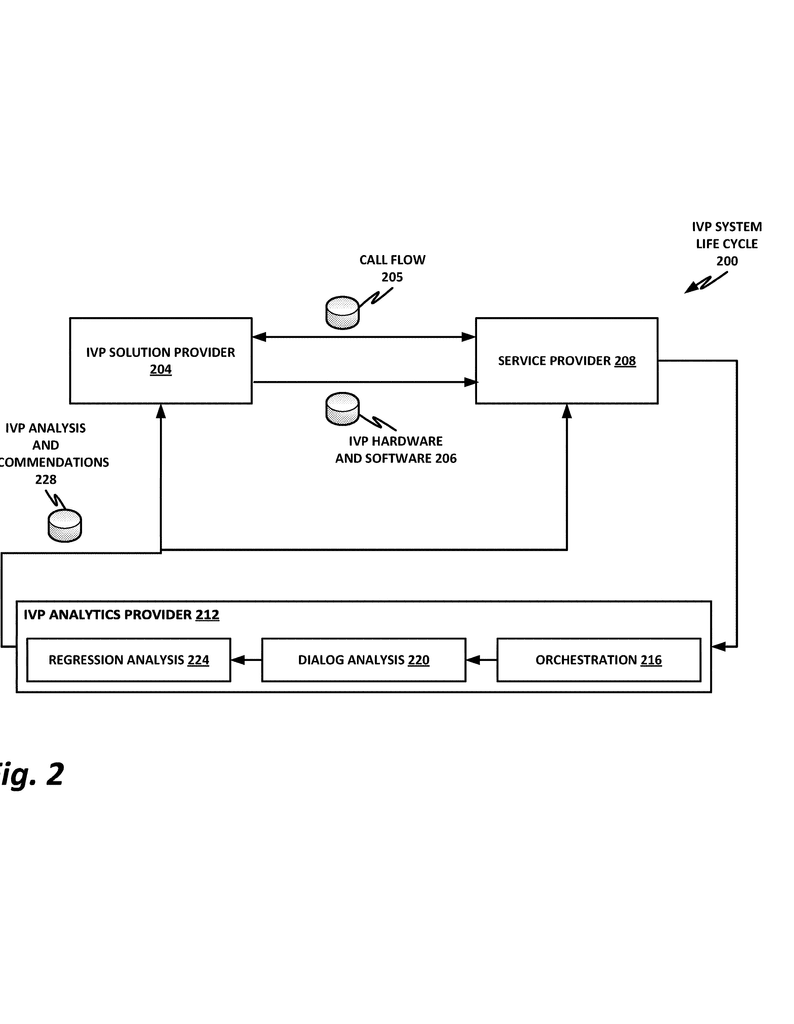

This invention is about a new way to improve ML models that guess what people mean when they talk or type. The system is built to work with any kind of ML intent model, like those used in voice assistants, chatbots, or call center IVR systems.

Here’s how the process works, step by step, in simple terms:

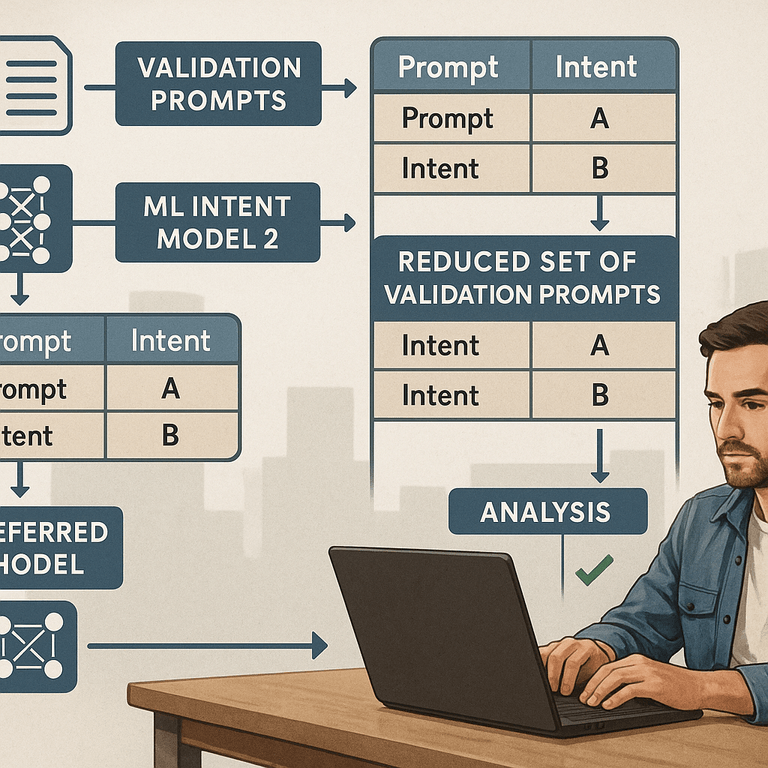

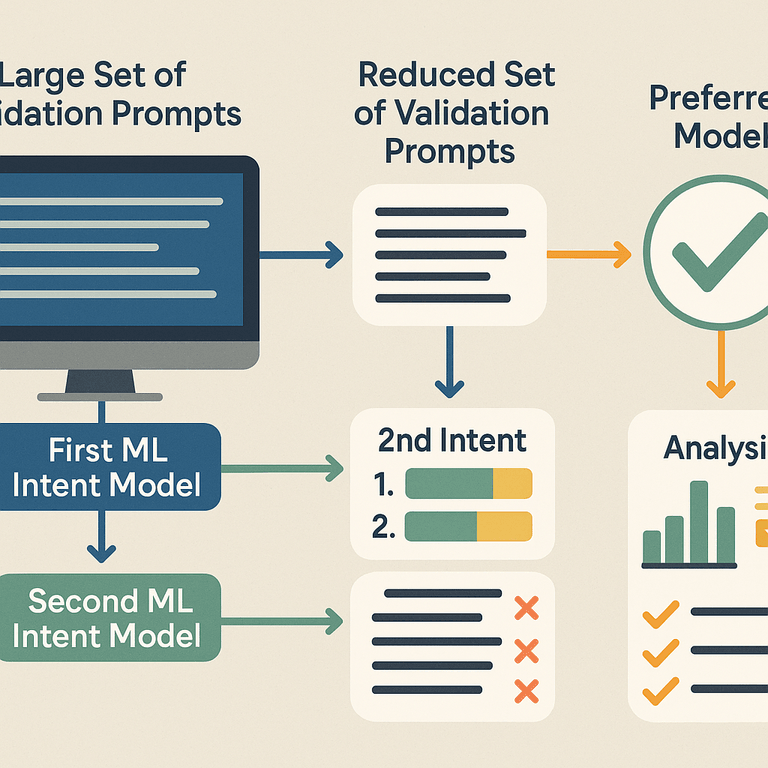

First, a large set of “validation prompts” is created. These are real or made-up examples of things people might say or type. They can be gathered from past customer conversations, made by humans, or generated with the help of a large language model (LLM) like ChatGPT.

The old version of the ML model is tested with all these prompts. For each prompt, the model picks which “intent” it thinks matches what the user wants.

Next, the new version of the ML model (which has been retrained with more or better prompts) is tested on the same set of prompts. Again, it picks an intent for each one.

Now comes the clever part. The system checks where the old and new models gave different answers. If both models agree, there’s no need to check those cases—either both are right or both are wrong, but changing the model won’t help. The focus is only on the “disagreement cases,” a much smaller set.

A human analyst now reviews just these disagreement cases. For each, they decide which model made the right call—or if neither did.

The model that gets more “hits” (correct answers in these tricky cases) is picked as the better model. If the new model does better, it becomes the main model. If the old one does better, it stays in use.

This whole process can be repeated again and again, as new prompts are added and models are updated.

What makes this invention stand out are several smart details:

– The use of AI (large language models) to help create many different example prompts, saving human time and making sure there’s lots of variety. But the system also lets human experts guide the AI, asking for more creative or realistic examples and throwing out any that don’t make sense.

– The comparison method focuses human effort where it matters most—on the prompts where the old and new models disagree. This cuts down review time a lot, sometimes to just 10-20% of the total prompts.

– The method works for both voice-based and text-based systems, and for any kind of intent model.

– The process can use either supervised or unsupervised training, making it flexible for different kinds of ML models.

– The system is built to handle large numbers of intents (50 or more is mentioned), with each intent supported by lots of example prompts (again, 50 or more per intent).

– The invention also supports generating and validating “entities” (like names, dates, or product types) using both AI and human review.

– The method can be implemented in software, on any suitable hardware, or in virtualized or containerized environments, making it easy to fit into modern IT setups.

The real-world result is a process that gets better models faster, with less human labor and fewer mistakes. Companies can keep their voice and chat systems up to date, quickly responding as people’s ways of speaking change.

This system is not just for big call centers. Any company that uses ML to understand what people want—banks, airlines, online stores, tech support, and more—can benefit. The invention is flexible, efficient, and ready for the real world.

Conclusion

Improving machine learning models for understanding human language is a big challenge. This patent shows a practical, smart way to make the process faster and better. By using both AI and human review, and by focusing on the most important prompts, companies can build systems that serve customers more accurately and with less effort.

As more of our daily life involves talking or typing to computers, these kinds of inventions will be key to making technology easier and friendlier for everyone. This method is a step forward, offering a clear path to better, more reliable ML-powered voice and chat systems.

If you are building or improving a voice assistant, chatbot, or any service that relies on understanding what people want, this invention’s approach can make your work easier and your results better.

Click here https://ppubs.uspto.gov/pubwebapp/ and search 20250218430.