Invented by Wilka Carvalho, Bryant Chen, Benjamin J. Edwards, Taesung Lee, Ian M. Molloy, Jialong Zhang, International Business Machines Corp

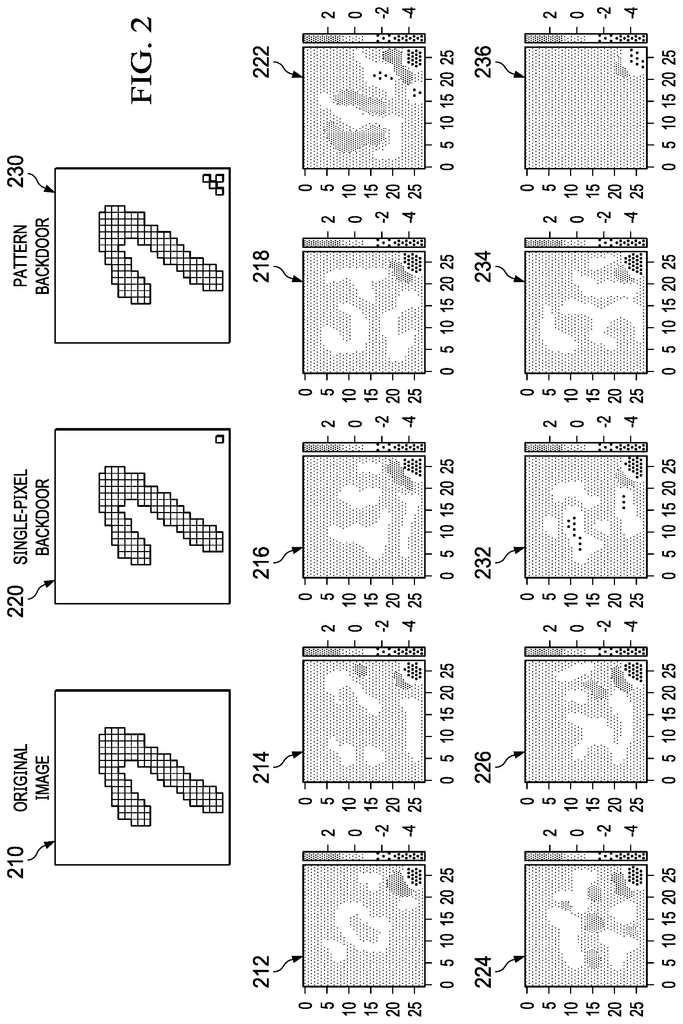

To address this issue, researchers have been exploring the use of gradients to detect backdoors within neural networks. Gradients are mathematical functions that describe the rate of change of a variable with respect to another variable. In the context of neural networks, gradients are used to optimize the network’s performance by adjusting its weights and biases.

The idea behind using gradients to detect backdoors is that a backdoor will cause a significant change in the gradients of the network. This change can be detected by analyzing the gradients of the network during training and testing. If a significant change is detected, it may indicate the presence of a backdoor.

Several studies have been conducted to test the effectiveness of using gradients to detect backdoors. One such study, conducted by researchers at the University of California, Berkeley, found that the method was able to detect backdoors with an accuracy of up to 98%. The study also found that the method was able to detect backdoors that were inserted using various techniques, including gradient masking and weight perturbation.

The market for using gradients to detect backdoors within neural networks is still in its early stages. However, as the use of neural networks continues to grow, the need for effective backdoor detection methods will become increasingly important. This is particularly true in applications where the consequences of a backdoor being exploited could be severe, such as in autonomous vehicles or medical devices.

There are several companies and organizations that are already working on developing and commercializing backdoor detection methods using gradients. One such company is Deep Instinct, which offers a deep learning-based cybersecurity platform that includes backdoor detection capabilities. Another is the Defense Advanced Research Projects Agency (DARPA), which has launched a program called Guaranteeing AI Robustness against Deception (GARD) that aims to develop methods for detecting and mitigating backdoors in neural networks.

In conclusion, the market for using gradients to detect backdoors within neural networks is still in its early stages, but it has the potential to become an important area of research and development in the field of cybersecurity. As the use of neural networks continues to grow, the need for effective backdoor detection methods will become increasingly important, and companies and organizations that are able to develop and commercialize these methods will be well-positioned to succeed in this emerging market.

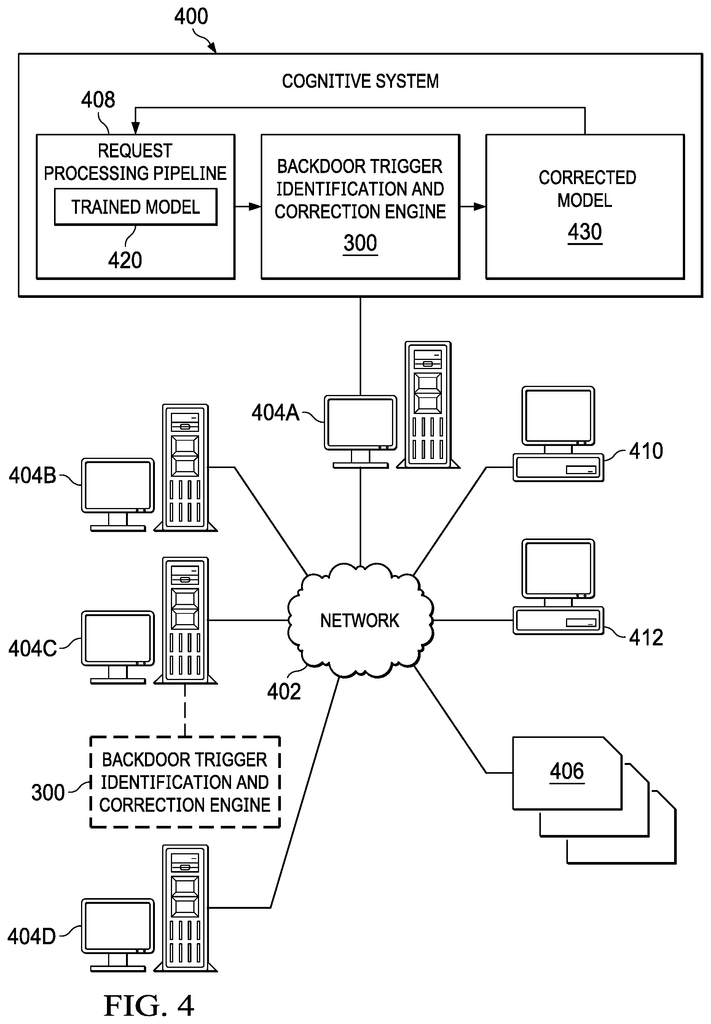

The International Business Machines Corp invention works as follows

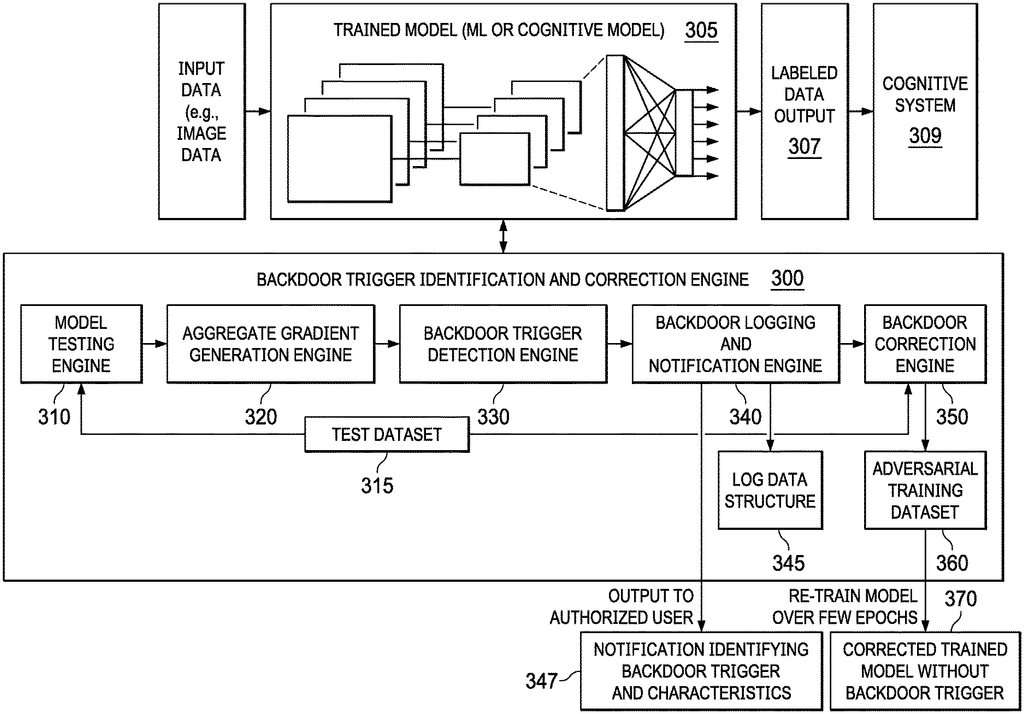

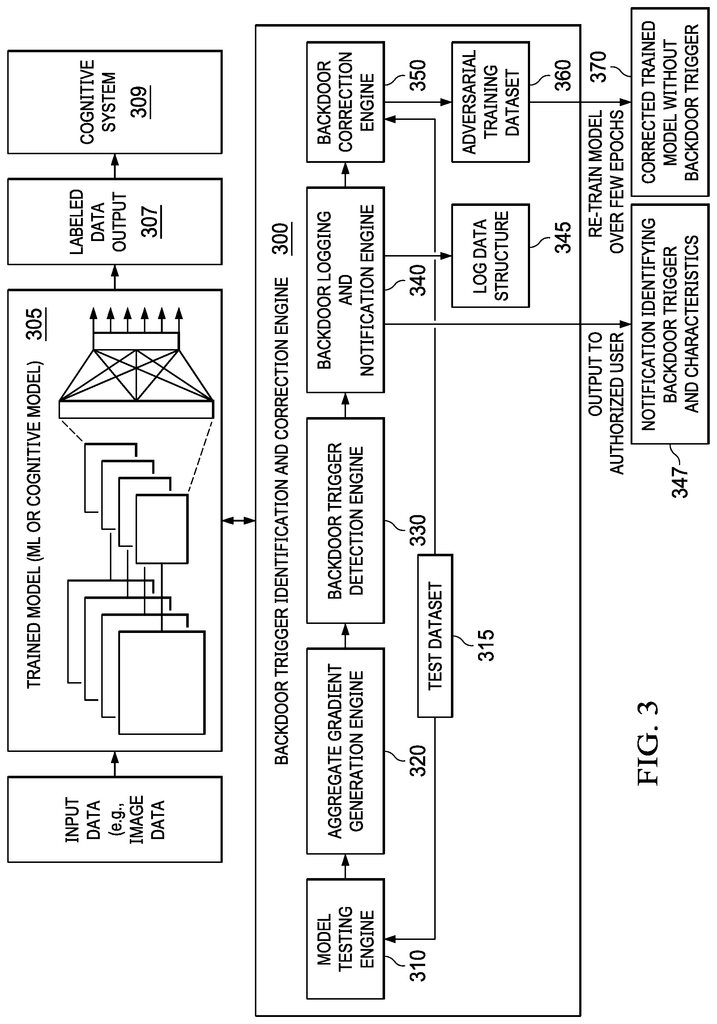

Mechanisms for evaluating a machine learning model are provided to determine if the model has a trigger. The mechanisms generate output classifications based on a test data set and then generate gradient data for that test data set based upon the output of the processing of the test database. The mechanisms analyze gradient data in order to identify patterns of elements within the dataset that are indicative of a “backdoor trigger”. In response to an analysis that identifies the pattern of elements indicative a backdoor, the mechanisms produce outputs that indicate the existence of a trigger backdoor in the machine learning model.

Background for Using gradients to detect backdoors within neural networks

The present application is a general improvement to a data processing method and apparatus, and relates more specifically to gradients for detecting backdoors in neural network.

Deep learning is part a larger family of machine-learning methods that are based on learning data models rather than task-specific algorithms. Some representations are loosely built on the interpretation of information processing patterns and communication patterns within a biological nervous systems, such as neural code that attempts to define relationships between stimuli and neuronal reactions in the brain. The research aims to develop efficient systems that can learn these representations using large, unlabeled datasets.

Deep learning architectures, such as deep neural network, deep belief network, and recurrent networks, were applied in fields such as computer vision, speech, natural language processing and audio recognition, social networks filtering, machine translating, and bioinformatics, where they produced comparable and, in some cases, superior results to human experts.

Neural Network Based Deep Learning” is a group of machine learning algorithms which use a cascade many layers of nonlinear processors for feature extraction and transform. Each layer takes the output of the previous one as its input. These algorithms can be either supervised or unsupervised, and their applications include pattern recognition (supervised) and classification. Deep learning based on neural networks is based upon the learning of several levels of representations or features of data. Higher level features are derived from lower-level features in order to create a hierarchical structure. The problem that needs to be solved will determine the composition of each layer of neural network nonlinear units. Deep learning has used layers such as hidden layers in an artificial neural net and complex propositional formulas. “They may also include latent variable organized layer-wise within deep generative models, such as nodes in deep Boltzmann and belief networks.

This Summary is intended to present a number of concepts in a simplified format that are described in greater detail in the Detailed Description. This Summary does not aim to identify the key features or factors of the claimed matter. Nor is it meant to be used as a tool to limit the scope.

In an illustrative example, a method for evaluating a machine learning model is provided in a system that includes a processor, and a memory. The memory contains instructions which the processor executes to configure the processor so as to implement a Backdoor Trigger Identification Engine, to determine if the machine learning has a trigger. The method involves processing a test data set by the trained machine-learning model to generate output classifications, and then generating gradient data for the same test dataset by the backdoor identification engine based on outputs generated from processing the test data set. The backdoor-trigger identification engine analyzes the gradient data in order to identify patterns of elements within a test dataset that are indicative of a trigger. The backdoor-trigger identification engine can also generate an output that indicates the presence of the backdoor in the machine learning model in response to a pattern of elements identified by the analysis.

In other illustrative examples, a program that can be read by a computer is included in a computer-readable medium. When executed on a computer device, the computer readable software causes the device to perform different operations and combinations thereof, as described above in relation to the method illustrated embodiment.

In yet another illustrative example, a system/apparatus may be provided. The system/apparatus can comprise one or multiple processors, and a memory coupled with the one or several processors. The memory can contain instructions that, when executed by one or more of the processors, cause them to perform a variety of operations and combinations thereof, as described above in relation to the method illustrated embodiment.

The following detailed description will show the features and benefits of the invention.

BRIEF DESCRIPTION ABOUT THE VIEWS FROM THE DRAWINGS

The following detailed description, when read with the accompanying illustrations, will help you better understand the invention as well as its preferred mode of usage and other objectives and benefits.

FIG. “FIG.

FIG. “FIG.

FIG. “FIG.

FIG. “FIG.

FIG. “FIG.

FIG. “FIG. 6 is a flowchart illustrating an example operation to identify and correct a backdoor triggering present in a model trained in accordance with an illustrative embodiment.

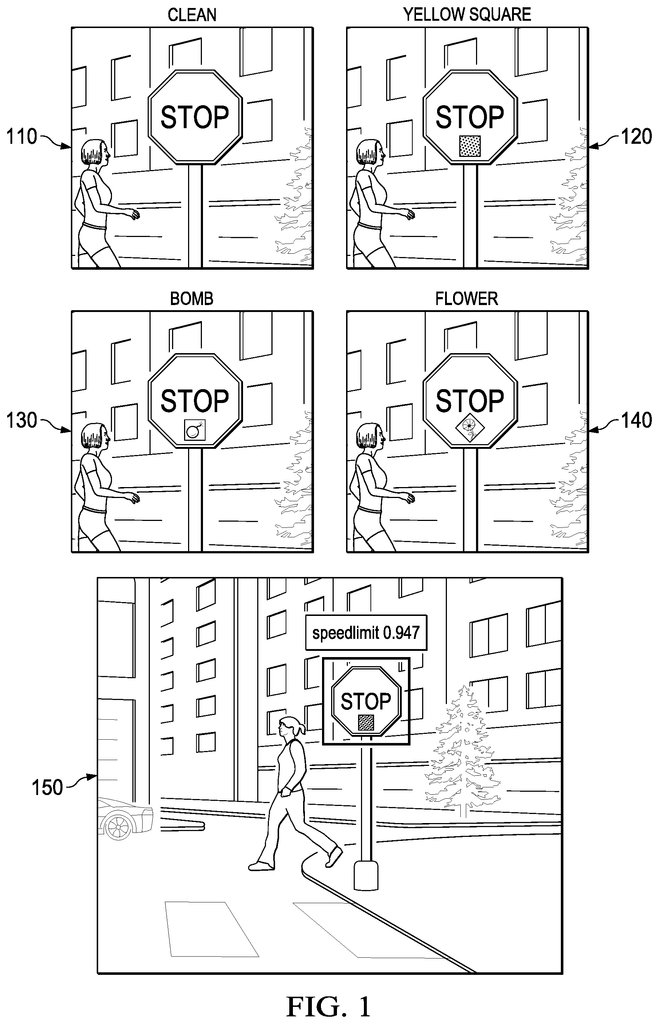

Many cognitive systems use trained cognitive models or machine learning, such as neural networks, for their cognitive operations. Machine learning or cognitive models can be used for image analysis, facial or fingerprint recognition, speech patterns analysis, or similar analysis in a cognitive security systems, such as a surveillance system or biometric authentication system. Recent examples of machine learning and cognitive models are used in vehicle systems such as collision avoidance, navigation, and autonomous vehicles. Machine learning or cognitive models can be implemented many different ways. Examples include but are not limited too, neural networks and deep learning systems. Deep learning neural networks and other similar technologies.

In many cases, cognitive or machine learning models are used in conjunction with cognitive systems. They perform a classification function, which then allows the cognitive system to perform more complex reasoning or analysis. In an autonomous vehicle navigation system for example, the machine-learning model is used to classify captured images into objects classes so that it can discern what objects are in the environment and make navigation decisions in order to ensure the safety and drivability of the vehicle.

To train these cognitive or machine-learning models (hereafter called’models’), a large amount of training data is required. In order to get the right outputs (e.g. correct classifications), it is necessary to have a lot of data and a lot of time spent on training. Many models are therefore trained with training data or training operations that have been outsourced in whole or part. It leaves a security hole where an intruder could create a “backdoor”. The training data and training done will affect the model. The “backdoor” The?backdoor’

Outsourcing the creation of data for training and/or the training of the model may be a security vulnerability which could lead to the introduction such “backdoors” into the generated model. Other situations can occur when the training is not being outsourced. Conditions may exist that allow an intruder to introduce backdoors which could lead to misclassifications and improper operation of a model. Reuse of trained models can lead to situations where an existing backdoor in a model is used for another implementation or purpose. The new application may then be susceptible to that backdoor. When reusing a model that has been trained for a different implementation or purpose, there may be a need to do some additional training. This training is less than what was done for the original model. This can present more opportunities for backdoors in the model.

Click here to view the patent on Google Patents.