Invented by Nicholas Edward Gillian, Jaime Lien, Patrick M. Amihood, Ivan Poupyrev, Google LLC

Machine learning algorithms play a crucial role in radar-based gesture detection systems as they enable the recognition and interpretation of different gestures performed by users. These algorithms are trained on large datasets to accurately identify and classify specific gestures, allowing users to control devices and systems through simple hand movements.

One of the key advantages of user-customizable machine learning in radar-based gesture detection is its ability to adapt to individual users’ unique gestures. By allowing users to train the machine learning algorithms with their own gestures, the system becomes more accurate and personalized, leading to a more seamless and intuitive user experience.

The market for user-customizable machine learning in radar-based gesture detection is driven by several factors. Firstly, the increasing adoption of touchless interfaces in various industries, including automotive, healthcare, and consumer electronics, is fueling the demand for advanced gesture recognition technologies. These industries are looking for ways to enhance user experience and improve safety by reducing the need for physical contact with devices.

Secondly, the advancements in radar technology have made it more affordable and accessible, enabling the integration of radar-based gesture detection systems into a wide range of devices and applications. This has opened up new opportunities for companies to develop innovative solutions that leverage machine learning algorithms to enable user-customizable gesture recognition.

Furthermore, the growing interest in personalized and customizable technologies is also driving the market for user-customizable machine learning in radar-based gesture detection. Users are increasingly seeking personalized experiences that cater to their specific needs and preferences. By allowing users to train the machine learning algorithms with their own gestures, companies can offer a more tailored and personalized user experience, leading to higher customer satisfaction and loyalty.

In terms of market players, several companies are actively involved in developing and commercializing user-customizable machine learning in radar-based gesture detection systems. These companies are investing in research and development to improve the accuracy and reliability of their algorithms, as well as to expand the range of gestures that can be recognized.

The market for user-customizable machine learning in radar-based gesture detection is expected to witness significant growth in the coming years. According to a report by MarketsandMarkets, the global gesture recognition market is projected to reach $32.3 billion by 2025, with a compound annual growth rate of 23.9% from 2020 to 2025. This growth is driven by the increasing demand for touchless interfaces, advancements in radar technology, and the rising trend of personalized and customizable technologies.

In conclusion, the market for user-customizable machine learning in radar-based gesture detection is experiencing rapid growth due to the increasing demand for touchless and intuitive human-machine interfaces. This technology allows users to train machine learning algorithms with their own gestures, leading to a more accurate and personalized gesture recognition system. With the advancements in radar technology and the growing interest in personalized technologies, the market for user-customizable machine learning in radar-based gesture detection is expected to continue expanding in the coming years.

The Google LLC invention works as follows

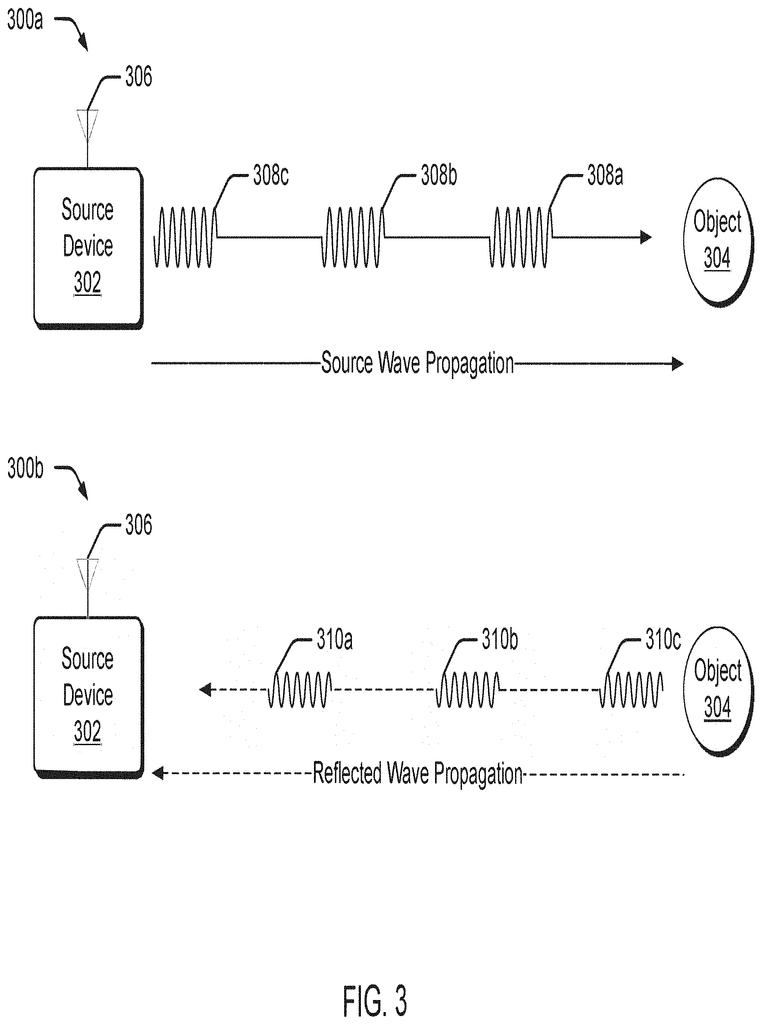

Various embodiments learn dynamically user-customizable entry gestures. The user can switch a radar-based system for gesture detection into a learning mode. The radar-based system detects the gesture by emitting a radar field. The radar-based system detects gestures by receiving incoming radio frequency signals (RF). These RF signals are generated when the gesture reflects an outgoing signal. It then analyzes these RF signals in order to identify one or more characteristics of the gesture. The radar-based system detects the gesture by re-configuring a corresponding input-identification system.

Background for User-customizable machine-learning in radar-based gesture detection

This background description is intended to provide a general context for the disclosure. Except as otherwise indicated, the material described herein is not expressly or impliedly acknowledged to be prior to the disclosure in this section or the claims attached.

Computers and applications assign default input mechanisms to action responses as a means to interpret user commands. A word processor application will interpret pressing the space bar on a keypad as a command for entering a blank space in a document. A user may want to replace the default input method with a custom interaction. The user may want to call for assistance, but cannot reach their mobile phone or enter the predetermined input to make a call. Or, they might have a disability which prevents them from entering the necessary input. Predetermined inputs on a computer device can be restrictive and unsuitable for the user.

This Summary is intended to present a number of concepts that will be further explained in the Detailed description. This summary is not meant to identify the key features or essential elements of the claimed subject material.

A user can train the radar-based system to learn dynamically user-customizable gestures. In certain cases, a user can dynamically perform an unknown gesture to the radar based gesture system without touching or grabbing a device that is coupled with the radar based gesture system. The radar-based system can then learn about one or more of the gesture’s identifying characteristics and create a machine learning model that can later be used to identify it.

Overview

Various embodiments learn dynamically user-customizable entry gestures. A user can switch a radar-based system for gesture detection into a learning mode. The radar-based system detects the gesture by emitting a radar field. The radar-based system detects radio frequency signals (RFs) generated by the outgoing field that reflect off the new hand gesture. It then analyzes these RFs to identify one or more characteristics of the new hand gesture. The radar-based system detects the new gesture by re-configuring a corresponding input-identification system.

In the discussion that follows, an example of a possible environment in which to use various embodiments is described. The discussion will then move on to RF signal properties that can be used in one or more embodiments. Then, dynamic learning is described for user-customizable gestures. “Finally, an example device will be described that can employ various embodiments of machine learning of user-customizable input gestures.

Example Environment

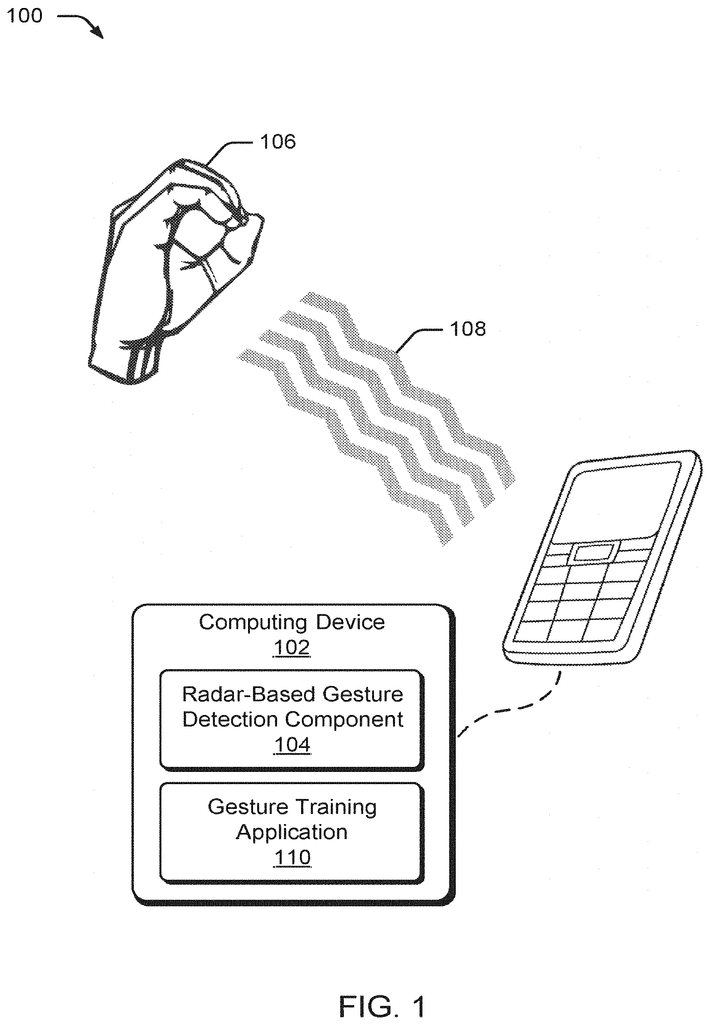

FIG. The figure 1 shows an example of a radar-based gesture learning environment. In the example environment 100, computing device 102 has a radar-based component 104 that can wirelessly detect, detect, and recognize gestures made by hand 106. This example environment illustrates computing device 102 as a mobile phone, but other devices could be used without deviating from the scope and claims of the subject matter.

Radar based gesture detection component (104) represents functionality which wirelessly captures the characteristics of an object target, shown here as hand 106. In this example radar-based gesture component 104 is part of the computing device 102. In some instances, radar-based component 104 can not only capture characteristics about the hand 106 but also identify a particular gesture performed by hand. The gesture or characteristic can be any suitable type, including the size, direction, and micro-gesture of a hand. The term micro-gesture is used to describe a gesture that can be distinguished from other gestures based on differences in movement using a scale of millimeters or sub-millimeters. Micro-gesture refers to a gesture which can be distinguished from others based on differences of movement on a scale between millimeters and sub-millimeters. The radar-based component 104 may also be configured to detect gestures of a larger size than micro-gestures (e.g. a macro-gesture identified by differences that are coarser than micro-gestures, such as centimeters and meters).

Hand 106 is a target that the radar-based gesture detector component 104 has detected. Hand 106 is in free space. Hand 106 is in free space and has no devices that can communicate or couple with computing device 102, or radar-based gesture detector component 104. This example is based on detecting a hand, but it should be noted that the radar-based gesture detector component 104 could be used to detect any suitable target object. “A finger, etc.

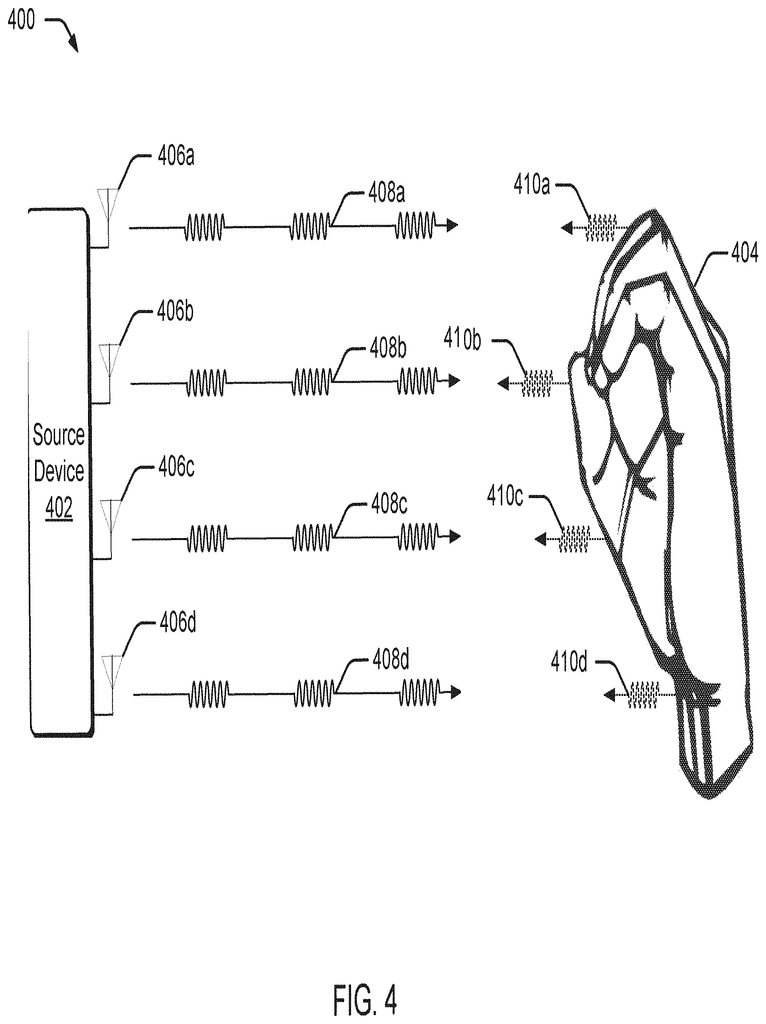

Signals 108″ are one or more RF signal transmitted and received by the radar-based gesture detector component 104. In some embodiments the radar-based gesture detector component 104 sends a signal with a single antenna that is directed toward hand 106. In some embodiments, several radar signals, each with its own antenna, are transmitted. At least a part of the transmitted signal is reflected back to the radar-based gesture detector component 104, and processed as described further below. Signals 108 may have any combination of energy levels, carrier frequencies, burst periods, pulse widths, modulation types, waveforms, phase relationships, etc. Some of the signals 108 can be different from each other to create a diversity scheme. For example, time diversity schemes transmit multiple versions of a signal at different times, frequency diversity schemes transmit signals using different frequency channels, space diversity schemes transmit signals via different propagation paths etc.

Computing Device 102 includes also gesture training application 110. The gesture training application 110, among other things represents functionality which configures the radar-based component 104 in order to detect and to learn gestures new to it or that it is not familiar with. A user may access gesture training app 110 to read a guide on how to customize gestures to train the radar-based component for gesture detection. The gesture training application 110 controls the gesture learning process by hiding any parameters that are used by the radar-based component 104 for initiation of transmission signals 108 and to configure the component.

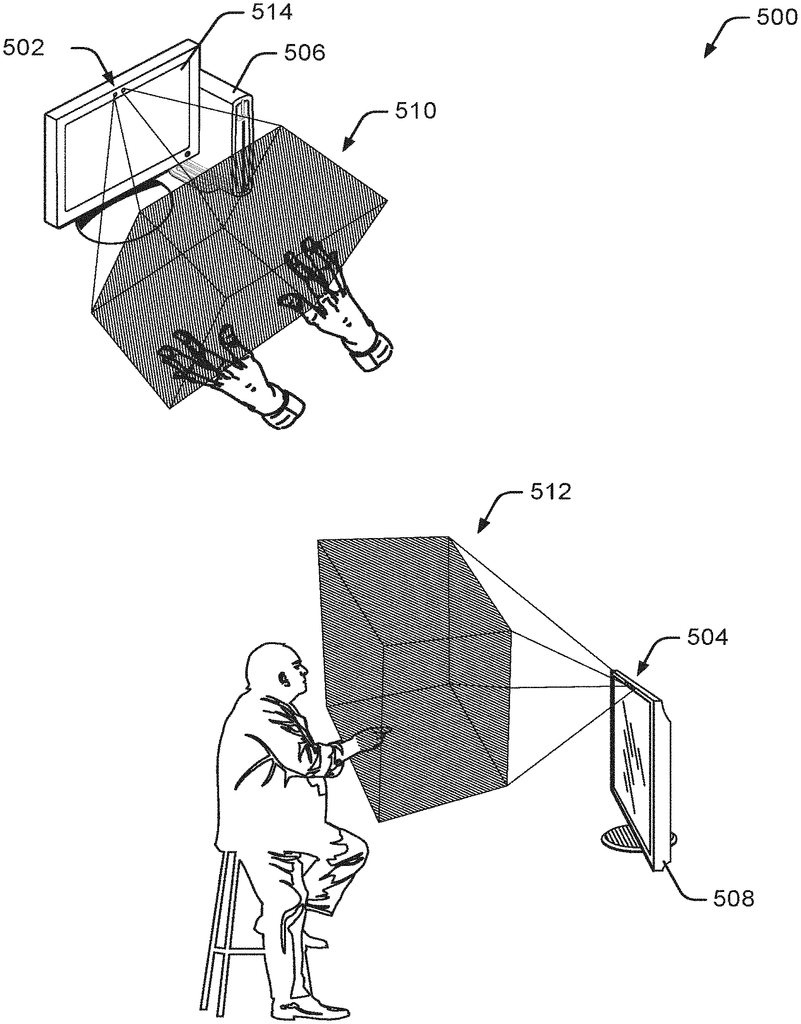

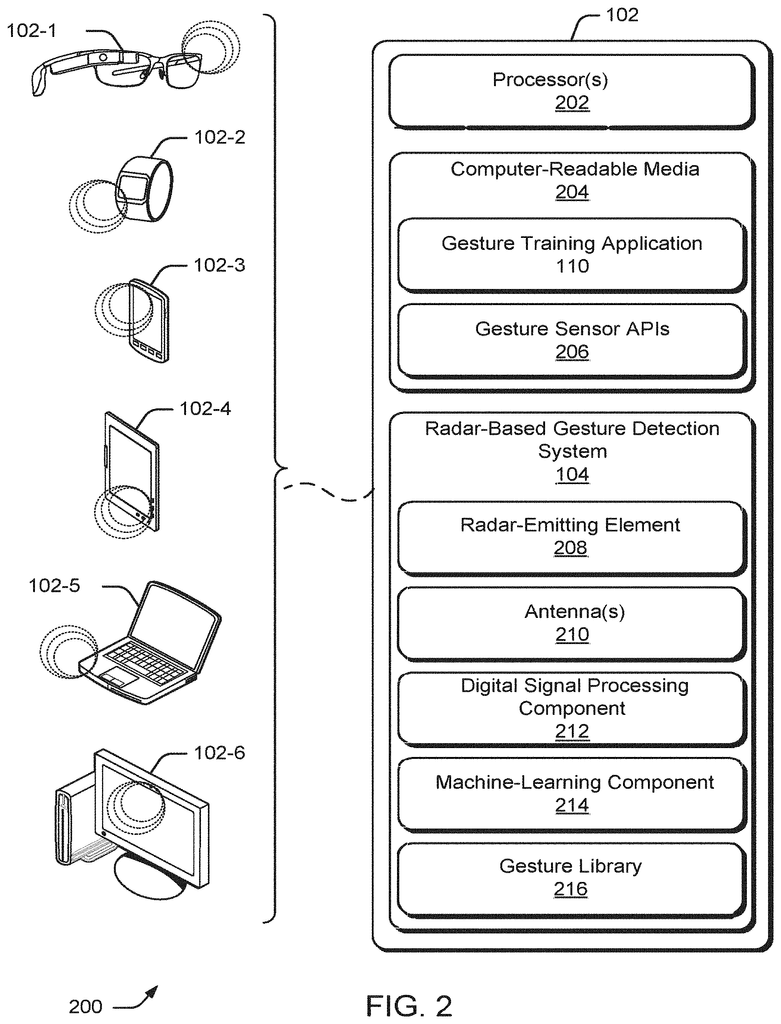

The following figure illustrates an example implementation of computing device 102 from FIG. “Now that we have described the general environment in which wireless hand gesture detection may be implemented, let’s look at FIG. 1 in greater detail. Computing device 102 can be any type of computer device that is suitable for implementing various embodiments. This example includes, but is not limited to, smart glasses 102-1 and smart watches 102-2. Mobile phones 102-3 and tablet 102-4 are also included. Laptop 102-5 and display monitor 1026 are also included. These are only examples to illustrate the subject matter. Any other type of computing device that is suitable can be used without departing the scope of the claim.

The computing device 102 is comprised of processors 202, and computer-readable media. The Gesture Training Application 110 in FIG. The processors 202 can execute the instructions of FIG. 1 or an operating system (not depicted) on the computer-readable medium 204 to interface or invoke some or all the functionalities described in this document, for example through gesture sensor Application Programming Interfaces 206.

Gesture Sensor APIs 206 provides programming access to various routines, and functionality, incorporated in radar-based gesture detector component 104. Radar-based gesture recognition component 104, for example, can be programmed with a programmatic (socket, shared memory or registers read/write, hardware interrupts etc.). This can be used with gesture sensor APIs to provide a communication or configuration method for applications that are not part of radar-based gesture component 104. In some embodiments gesture sensor APIs provide high-level accessibility to radar-based gesture detector component 104 to abstract implementation details or hardware access, to request notifications for identified events, to query results, etc. The gesture sensor APIs can provide low-level control of the radar-based component 104. A calling program may be able to configure radar-based component 104 directly or partially. In some cases gesture sensor APIs provide programmatic input configuration parameters to configure transmit signals, e.g. signals 108 in FIG. These APIs allow programs, such as gesture training application 110, to incorporate the functionality provided by radar-based gesture detection component 104 into executable code. These APIs allow programs such as the gesture training application 110 to integrate functionality provided by radar based gesture detection component in executable code. In this case, gesture training app 110 can invoke or call gesture sensor APIs to request or register an event notification when micro-gestures are detected, disable or enable wireless gesture recognition on computing device 102 and so on. In some cases, gesture sensor APIs can include or access low-level hardware drivers to interface with radar-based component 104. The APIs can also be used in conjunction with other algorithms on the radar-based component to modify algorithms, extract information, and configure them. Change the operating mode of the radar-based component 104.

Radar based gesture detection component (104) represents functionality which wirelessly detects hand gestures. Radar-based component 104 for gesture detection can be implemented on a chip that is embedded in computing device 102. This could be a System on Chip (SoC). It is important to note that the gesture sensor component may be implemented in other ways, including one or more Integrated Circuits, as a processor configured to access instructions stored in memory or embedded in hardware, as hardware with embedded software, a printed-circuit board with hardware components or a combination thereof. Radar-based gesture detection components 104 include radar-emitting elements 208, antennas 210, digital signals processing component(s)212, machine learning component214, and gesture libraries 216. These can all be used together to wirelessly detect gestures by using radar techniques.

Generally, the radar-emitting component 208 is configured in a way that it provides a radar field. In certain cases, the field can be configured to reflect at least a portion of a target. The radar field may also be configured so that it can penetrate through fabric or other obstacles and reflect off human tissue. The fabrics or obstructions include wood, plastic, glass, cotton, wool and nylon, as well as similar fibers. Human tissue, like a hand, can also reflect from these materials.

A radar can have a small field, like 0 or 1 micrometers up to 1. 5 meters or an intermediate size such as 1-30 meters. These sizes are only for discussion and any other range suitable can be used. The radar-based component 104 can be configured to process and receive the reflections of the field when it is intermediate in size. This allows the component to detect large-body gestures by analyzing the reflections caused by human tissue, such as those from arm or leg movements. The radar field can also be configured so that the component 104 detects smaller, more precise gestures such as micro-gestures. Examples of intermediate-sized fields are those where a user uses gestures to turn off a TV from a couch or change the volume or song on a stereo from across the room, or turn off an oven (a near field is also useful in this case), or turn on or off lights in a particular room. Radar-emitting element 208 can be configured to emit continuously modulated radiation, ultra-wideband radiation, or submillimeter-frequency radiation.

Antennas 210 transmit and received RF signals. In some cases the radar-emitting elements 208 and antennas 210 are coupled to transmit a field of radar. This is accomplished by converting electrical waves into electromagnetic waves to transmit and vice versa to receive. The radar-based gesture component 104 may include as many antennas as desired in any configuration. The antennas may be configured in a variety of ways, such as a parabolic, monopole, dipole, or helical configuration. In some embodiments antenna(s), 210, are built on-chip, e.g. as part of a SoC, while in others, antenna(s), 210, are metal components, hardware, or other separate components. They attach to or are part of the radar-based gesture detector component 104. An antenna may be single-purpose, for example, one antenna aimed at transmitting signals and another aimed at receiving signals. Multi-purpose antennas (e.g. an antenna that is used for both transmitting and receiving signal) are also possible. Some embodiments use a variety of combinations of antennas. For example, one embodiment uses two single-purpose, transmission-oriented antennas in conjunction with four single purpose, reception-oriented antennas. Placement, size and/or shape can be selected to enhance a particular transmission pattern or diversity system, such as one designed to capture data about a hand micro-gesture. The antennas may be physically separated by a certain distance, allowing the radar-based gesture detector component 104, to transmit and receive signals aimed at a target object using different channels, radio frequencies and distances. In some cases antennas 210 can be spatially distributed in order to support triangulation, while other antennas may be collocated for beamforming. “While not shown, each antenna corresponds to a transceiver that physically manages and routes the outgoing signal for transmission as well as the incoming signal for capture and analyses.

Digital signal processing components 212 represent digitally capturing a signal and processing it. Digital signal processing component (212) samples analog RF received by antennas 210 and generates digital samples which represent the RF signals. These samples are then processed to extract information regarding the target object. Digital signal processing component (212) can also control the configuration of signals transmitted and generated by radar-emitting elements 208 or antenna(s). For example, it could configure a number of signals in a particular diversity scheme, like beamforming diversity. Digital signal processing component receives configuration parameters to control the transmission parameters of an RF signal (e.g. frequency channel, power levels, etc.). The APIs for gesture sensors 206 are one example. Digital signal processing component (212) modifies the RF signals based on the configuration parameter. The signal processing algorithms or functions of digital component 212 may be included in a library that is also configurable and/or accessible via gesture sensor APIs. Digital signal processing component can be configured or programmed via gesture sensor APIs (and the corresponding interface of radar based gesture detection component 104). This allows for dynamic selection and/or reconfiguration. Digital signal processing component can be implemented as hardware, software, firmware or a combination of these.

Click here to view the patent on Google Patents.