Invented by Stuart Platt, Mohammed Aafaq Khan, Sony Interactive Entertainment America LLC

One of the key drivers of this market is the increasing adoption of VR in gaming and entertainment. The gaming industry has always been at the forefront of technological advancements, and VR is no exception. With 3D digital content editing tools, game developers can create realistic and captivating virtual worlds that enhance the gaming experience. From designing intricate landscapes to crafting lifelike characters, these tools enable developers to push the boundaries of what is possible in virtual reality gaming.

Beyond gaming, the market for 3D digital content editing is also expanding in other sectors such as architecture, engineering, and healthcare. Architects can use VR to visualize and present their designs in a more immersive manner, allowing clients to experience spaces before they are built. Engineers can simulate and test complex systems in a virtual environment, leading to more efficient and cost-effective solutions. In healthcare, VR is being used for training medical professionals, treating phobias, and even assisting in surgical procedures. All these applications require sophisticated 3D digital content editing tools to create realistic and accurate virtual representations.

Furthermore, the rise of social VR platforms and virtual meetings has further fueled the demand for 3D digital content editing. As people seek ways to connect and collaborate remotely, VR offers a unique opportunity to create shared virtual spaces. These spaces can be customized and personalized using 3D digital content editing, allowing users to express their creativity and individuality. From hosting virtual events to conducting virtual meetings, the ability to edit and manipulate 3D content is crucial for creating engaging and interactive experiences.

As the market for 3D digital content editing continues to grow, so does the need for innovative and user-friendly tools. Companies in this space are constantly developing new software and hardware solutions to meet the evolving demands of creators. From intuitive modeling and sculpting tools to advanced rendering and animation capabilities, these tools empower creators to bring their visions to life in virtual reality.

However, challenges remain in this market. The complexity of 3D digital content editing requires a certain level of expertise, which can be a barrier for entry for some creators. Additionally, the hardware requirements for VR can be costly, limiting the accessibility of these tools to a wider audience. As technology advances and becomes more affordable, these challenges are expected to diminish, opening up new opportunities for growth in the market.

In conclusion, the market for 3D digital content editing for virtual reality is experiencing significant growth and is poised to revolutionize various industries. From gaming and entertainment to architecture and healthcare, the demand for immersive and interactive experiences is driving the need for sophisticated tools to create realistic virtual environments. As technology continues to advance and become more accessible, the potential for this market is limitless, and we can expect to see even more innovative applications of 3D digital content editing in the future.

The Sony Interactive Entertainment America LLC invention works as follows

A method of editing. The method involves receiving a series of digital VR scenes that are interactive, with each VR scene displaying a 3D gaming world. The method involves placing the sequences of interactive VR scenes in a 3D edit space. The method involves sending the view of the 3D space, including at least one sequence of interactive VR scene to the HMD so that the user can see it. The method comprises receiving at least one editing command via a user device. The method comprises modifying the interactive VR scene sequence in response to an input editing command.

Background for Three-dimensional digital content editing for virtual reality

The use of high-powered graphics allows game developers to create 3D interactive and immersive gaming applications. These gaming applications contain 3D environments created by adding objects to a 3D environment. These objects can follow the laws of physics to define their interaction.

The creation process of a 3D gaming environment requires multiple editing steps. The game developer, for example, uses a 3D Content Creator application to create the 3D Gaming Environment. The game developer typically views the interface of a 3D content creator on a 2D display in order to edit. The 3D content is then converted to stereoscopic format and the edits are included. The game developer can modify any edits made to the 2D screen if the results are not satisfactory. The edits are converted to 3D and then compiled in the HMD for viewing. The process can be repeated until the result is satisfactory.

The disconnect between the 3D generated content and the editing environment in 2D is a major problem. Edits are made to the 3D gaming environments without knowing exactly how the 3D content will look in the HMD. The edits are done using a 2D interface for the 3D creator application. “The same problems occur when creating 3D video content, which may not be interactive.

It would be advantageous to integrate the 3D gaming environment or 3D video content with the editing environment.

In this context, embodiments of the disclosure are revealed.

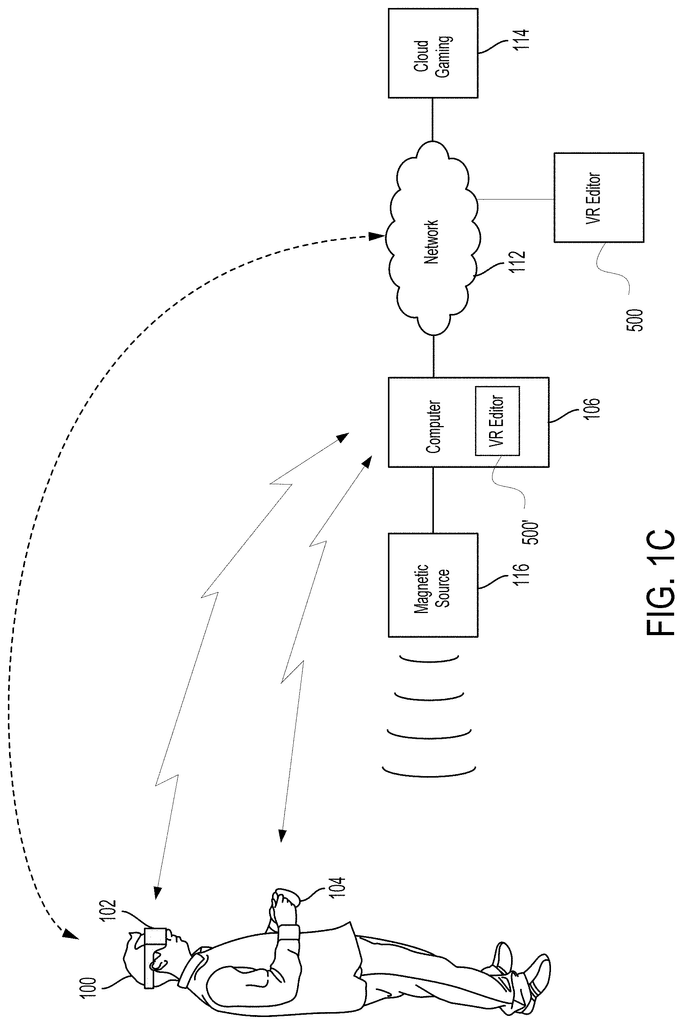

Embodiments” of the present disclosure are related to providing a 3D editing space, for editing 3D digital contents, such as 3D environments in a gaming application, 3D videos etc. The 3D space can be used to edit digital content. This includes arranging and rearranging digital content. The 3D editing space also allows real-time previews of the edited results. This means that an editor can edit while wearing a HMD configured for 3D or stereoscopic viewing and then view the results without having to remove the HMD.

In one embodiment, it is described a method of editing digital content. The method involves receiving a series of VR interactive scenes of digital content. Each VR interactive scene shows a 3D gaming world. The method involves placing the sequences of interactive VR scenes in a 3D edit space. The method involves sending the view of the 3D space, including at least one sequence of interactive VR scene to the HMD so that the user can see it. At least one editing command is received from the device of the user. Modifying the sequence of VR interactive scenes in response the editing input command is part of the method. The at least editing command is given through an input enabled when wearing the HMD, and viewing the 3D edit space via the headset so as to show real-time views of modifications made to the interactive VR scene sequence.

In a further embodiment, an electronic system is described. The computer system includes a processor, and a memory coupled to the processor, which contains instructions that when executed by the system cause it to execute an editing method. The method involves receiving a series of VR interactive scenes, with each VR interactive scene displaying a 3D gaming world. The method involves placing the sequences of interactive VR scenes in a 3D edit space. The method involves sending the view of the 3D space, including at least one sequence of interactive VR scene to the HMD so that the user can see it. At least one editing command is received from the device of the user. Modifying the sequence of VR interactive scenes in response the editing input command is part of the method. The at least editing command is given through an input enabled when wearing the HMD, and viewing the 3D edit space via the headset so as to show real-time views of modifications made to the interactive VR scene sequence.

In another embodiment, it is disclosed a nontransitory computer-readable media storing a program for implementing graphics pipeline. The computer-readable media includes instructions for editing. The computer-readable medium contains program instructions for receiving an interactive VR sequence of digital content. Each interactive VR scene shows a 3D gaming world. The computer-readable media includes program instructions to place the sequence of VR scenes in a 3D editing area. The computer-readable media includes instructions for sending to the HMD a view showing the 3D space, including at least one sequence of interactive VR scene. The computer-readable media includes program instructions to receive at least one editing command from the device of the user. The computer-readable media includes program instructions to modify the sequence of VR interactive scenes in response the input editing command. The at least editing command is given through an input enabled when wearing the HMD, and viewing the 3D edit space via the headset so as to show real-time views of modifications made to the interactive VR scene sequence.

The following detailed description taken together with the accompanying illustrations, which illustrate the principles of disclosure by way of an example, will reveal other aspects of the disclosure.

The following detailed description includes many specific details to illustrate the invention. However, any person of ordinary skill will understand that the disclosure is capable of many modifications and variations. The following aspects of the disclosure are described without losing generality and without imposing any limitations on the claims that follow.

Generally, the different embodiments of this disclosure are related to the provision of a 3D editing space, which is used for editing 3D digital contents, such as 3D environments in gaming applications, 3D video, etc. The 3D space is designed for editing digital content. This includes arranging and rearranging digital content. The 3D editing area is also responsive to input commands sent through a keyboard, mouse, motion controllers or other user interfaces. The 3D editing space can also be responsive to commands sent through tracking body parts. This includes hand gestures and body gestures. The 3D space can include interactive VR scenes with digital content. For viewing and editing interactive VR scenes, the sequence of VR scenes can be arranged linearly within the 3D space. The sequence of VR scenes can also be arranged helical within the 3D space to allow for viewing and editing interactive VR scenes. The 3D editing space also allows real-time previews of the edited results. This means that an editor can edit while wearing a HMD configured for 3D or stereoscopic viewing and view the results without having to remove the HMD. The editor does not have to repeatedly put on and remove the HMD when moving between the editing space (which can be a virtual 2D editing environment), when editing the content, and the 3D viewing area when reviewing the edited contents.

The following example will describe the various embodiments with reference to various drawings.

The specification refers to “video game” throughout. “Throughout the specification, references to ‘video game? It is used to describe any interactive application that can be controlled by input commands. As an example, interactive applications include word processing, video editing, video game editing, and gaming. The terms gaming application and video game are interchangeable. The term “digital content” is also used. “Digital content” is intended to refer to 2D or 3-D digital content, including, for example, video games, gaming apps, and videos.

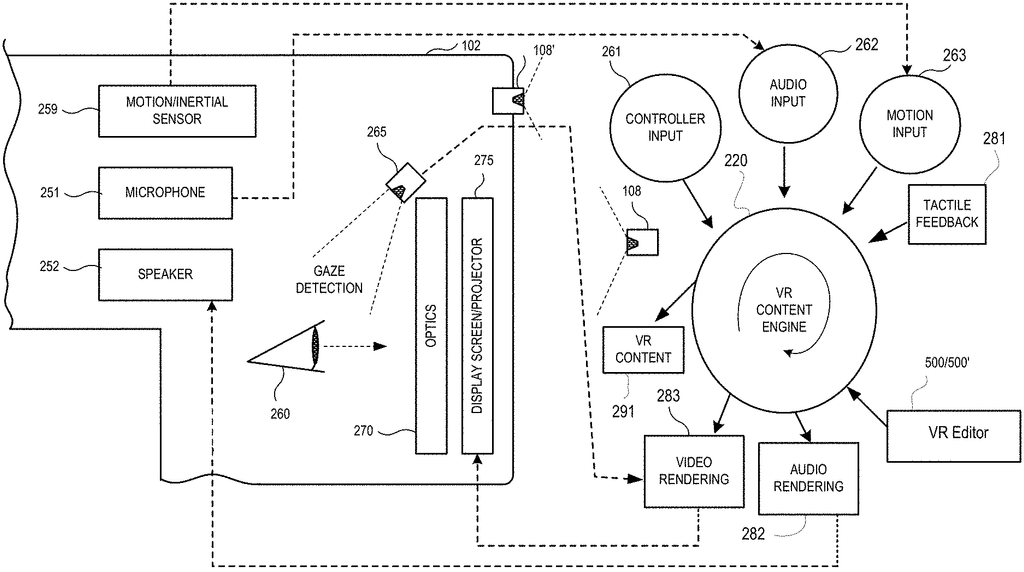

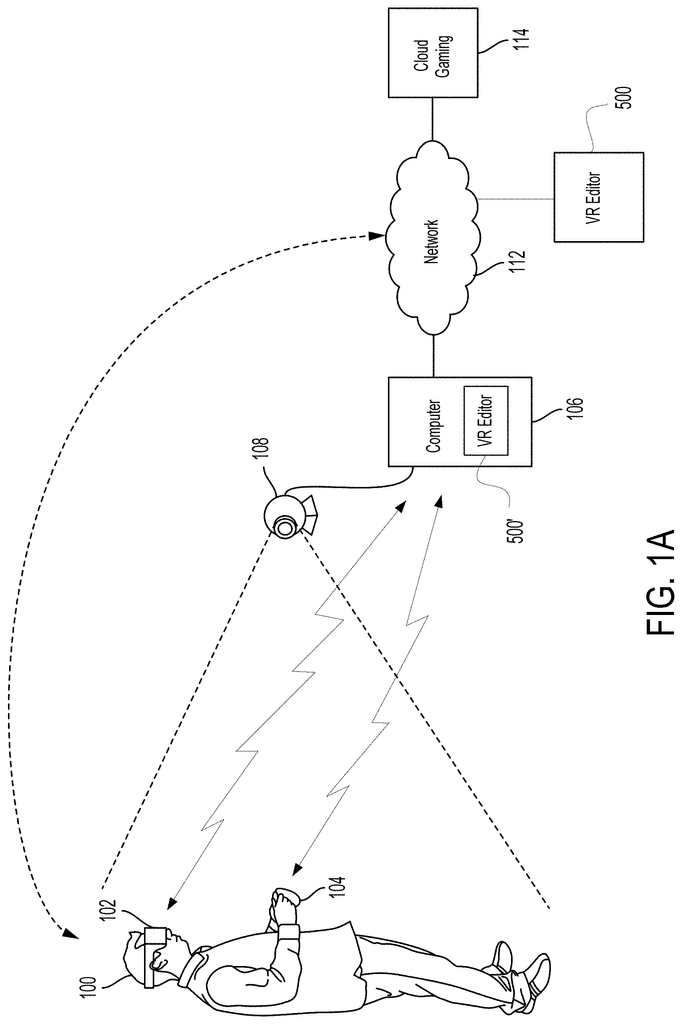

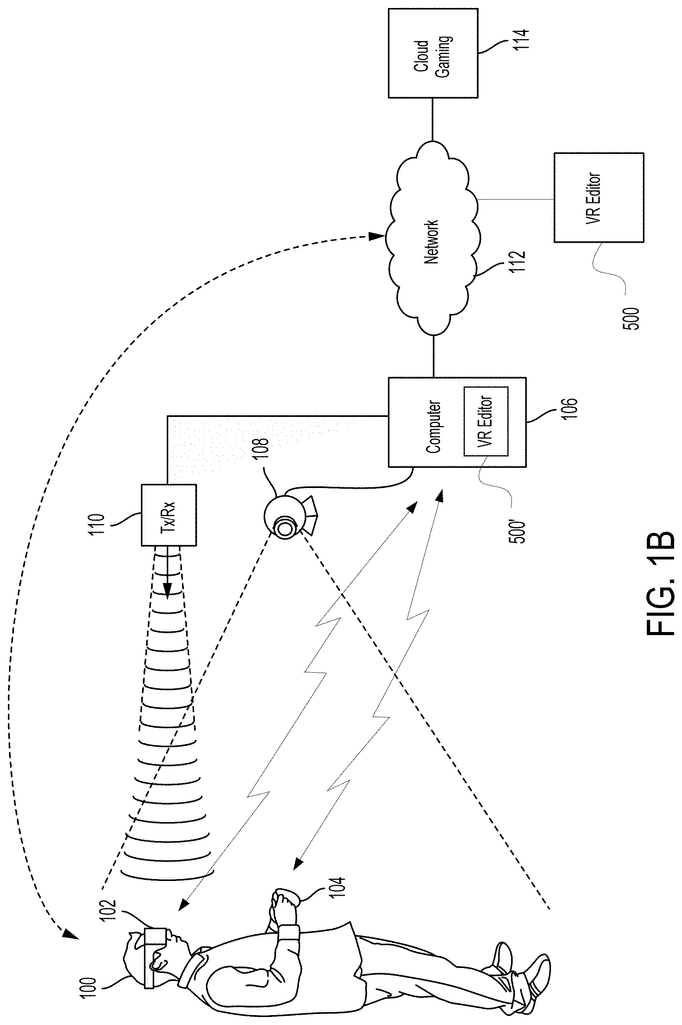

FIG. According to an embodiment of the present invention, 1A shows a system of interactive gameplay for a videogame. The user 100 wears an HMD 102. This HMD 102 can be worn as glasses, goggles or helmets and can display a video from an interactive game or content from interactive applications. By placing display mechanisms close to the user’s eye, the HMD 102 offers a highly immersive experience. The HMD 102 is able to provide large display areas for each eye of the user, which can even cover the entire field of vision of the user.

In one embodiment, a HMD 102 may be connected to a gaming console or computer 106. The computer 106 connection can be wireless or wired. In certain implementations, HMD 102 can also communicate with computer 106 via alternative channels or mechanisms, such as network 112, to which HMD 102 is connected. The computer 106 may be any known general-purpose or special-purpose computer. Examples include but are not limited to a gaming consoles, personal computers, laptops, tablets, mobile devices, cellular phones, tablets, thin clients, set top boxes, media streaming devices, etc. In one embodiment, computer 106 is configured to run a videogame and output video and audio for rendering by HMD 102. The computer 106 may be configured to run an interactive application that outputs VR content to the HMD. In one embodiment computer 106 can be configured via local VR Editor 500 to edit 3D digital content. In one embodiment, computer 106 is configured to via local VR editor 500?provide a 3D edit space for editing 3D content, such 3D gaming environments, 3D videos etc. The 3D edit space allows real-time preview of the edited results, so that the editor can edit while wearing a HMD configured for 3D or stereoscopic viewing and view the results without removing the headset. In another embodiment of the invention, computer 106 can be configured to work with a server back-end that provides a 3D editing environment.

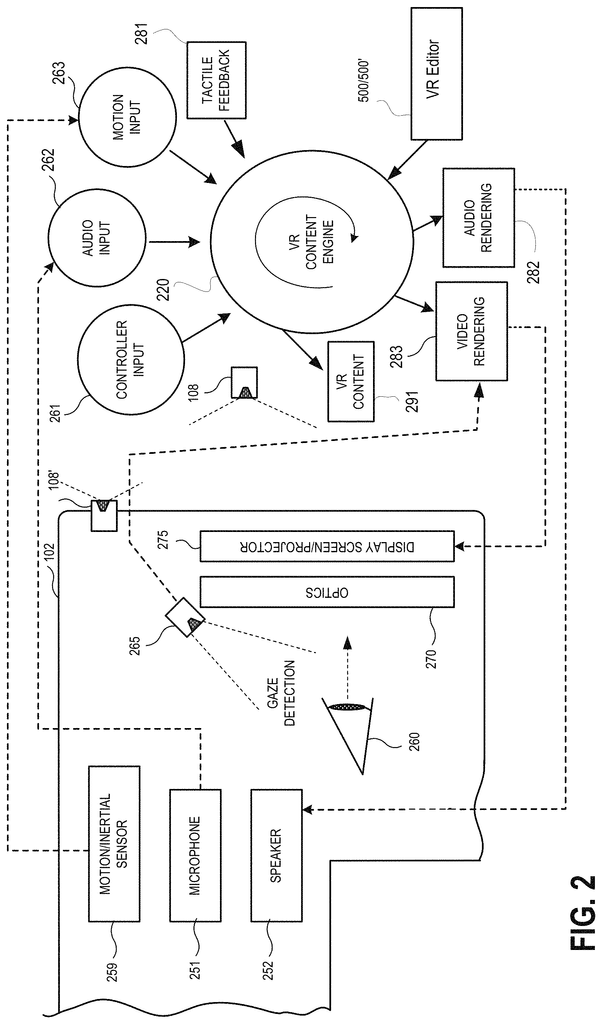

The user can use a controller 104 for input to a video game, or to edit 3D digital content. Connection to computer 106 may be wireless or wired. A camera 108 may also be configured to take one or more pictures of the interactive environment where the user 100 is situated. These images can be analyzed in order to determine the position and movements of user 100, the HMD, and controller 104. In one embodiment, a controller 104 may include a light element or other markers that can be tracked in order to determine the location and orientation of the controller. HMD 102 can also include one or multiple lights that are tracked to determine its location and orientation. Camera 108 implements tracking functionality that allows input commands to be generated by movement of controller 104 or body parts of user 100 (e.g. hand). Camera 108 may include one or multiple microphones for capturing sound in the interactive environment. The sound captured by a microphone can be processed in order to determine the location of a source. The sound from a location that has been identified can be processed or used selectively to exclude other sounds. The camera 108 may also include multiple image capture devices, such as a depth camera or stereoscopic pair of cameras. stereoscopic camera pair), an IR or depth camera, or combinations thereof.

In another embodiment the computer 106 is a thin client communicating over a network to a cloud gaming service provider 112. Cloud gaming provider 112 executes and maintains the video game played by user 102, and/or a 3D editor space for editing 3D digital contents. The computer 106 sends inputs received from the HMD, controller, and camera to the cloud gaming provider. This provider then processes these inputs in order to change the state of the video game. The computer 106 receives the output of the video game in the form of video data, audio and haptic data. The computer 106 can process the data further before transmitting or it may directly send the data to relevant devices. The controller 104 receives vibration feedback commands based on the haptic data. For example, the video and audio streams can be provided to HMD 102. In other embodiments the computer 106 interfaces with the VR Editor 500 at a server back-end that is configured to offer a 3D edit space for editing 3D content, such 3D gaming environments, 3D videos etc. The 3D edit space allows real-time preview of the edited results, so that the editor may edit while wearing a HMD configured for 3D or stereoscopic viewing and view the results without removing the headset.

In one embodiment, HMD 102 and controller 104 may be networked device that connects to the network 110 in order to communicate with cloud gaming provider 112 The computer 106, for example, may be a local device such as a network router that facilitates network traffic but does not perform video game processing. The HMD 102 and controller 104 may connect to the network via wired or wireless connections.

In a further embodiment, the computer may execute part of the game while the remainder of the game is executed by a cloud gaming service provider 112. In some embodiments, parts of the video games may be also executed on HMD. The cloud gaming provider 112 may, for example, service a request to download the video game from computer 106. The cloud gaming provider 112 can execute a part of the videogame and send game content to computer 106 so that it can be rendered on the HMD. Computer 106 can communicate with cloud gaming provider 112. This is done via a network 110. The inputs from the HMD, controller and camera 108 are sent to the cloud gaming service provider 112 while the video game downloads on the computer 106. Cloud gaming provider 112 uses inputs to change the state of the video game. “The output of the video game is sent to the computer 106, which then sends it to the devices.

Click here to view the patent on Google Patents.