Invented by Sivabalan Manivasagam, Shenlong Wang, Wei-Chiu Ma, Kelvin Ka Wing Wong, Wenyuan Zeng, Raquel Urtasun, Uatc LLC

Sensor data plays a vital role in numerous industries, including autonomous vehicles, robotics, healthcare, cybersecurity, and smart cities. However, obtaining real-world sensor data can be challenging due to factors such as cost, privacy concerns, limited availability, or the need for specific scenarios. This is where synthetic sensor data generation comes into play, offering a cost-effective and scalable solution.

Machine learning algorithms rely on large volumes of diverse and representative data to learn patterns and make accurate predictions. Synthetic sensor data generation leverages ML techniques to create artificial data that closely resembles real-world sensor data. By mimicking the characteristics, distributions, and patterns of real data, synthetic data allows ML models to train and validate in a controlled environment, reducing the need for extensive real data collection.

The market for systems and methods for generating synthetic sensor data via machine learning is driven by several factors. Firstly, the increasing adoption of AI and ML across industries necessitates the availability of high-quality training data. Synthetic sensor data provides a reliable and scalable source of diverse data, enabling organizations to train their models more efficiently.

Secondly, the growing complexity of AI applications demands extensive testing and validation. Synthetic sensor data allows organizations to simulate various scenarios, including rare or dangerous situations, without the need for real-world experimentation. This accelerates the development and deployment of AI systems, ensuring their robustness and reliability.

Moreover, privacy concerns and data regulations have become critical considerations for businesses. Synthetic sensor data generation offers a privacy-preserving alternative, eliminating the need to handle sensitive or personally identifiable information. This enables organizations to comply with data protection regulations while still benefiting from AI and ML technologies.

The market for systems and methods for generating synthetic sensor data via machine learning is witnessing a surge in innovation and competition. Numerous companies and research institutions are developing advanced techniques and tools to generate synthetic data that closely resembles real-world sensor data. These solutions incorporate ML algorithms, generative models, and domain-specific knowledge to produce high-fidelity synthetic sensor data.

Furthermore, the integration of synthetic sensor data generation with cloud computing and edge computing technologies is expanding the market’s potential. Cloud-based platforms offer scalable and on-demand synthetic data generation capabilities, while edge computing enables real-time data synthesis for applications that require low latency and high responsiveness.

However, challenges remain in the market for synthetic sensor data generation. Ensuring the quality and diversity of synthetic data is crucial to avoid biases and limitations in ML models. Additionally, the ability to generate data that accurately represents rare or outlier scenarios is essential for training robust AI systems.

In conclusion, the market for systems and methods for generating synthetic sensor data via machine learning is experiencing rapid growth due to the increasing demand for AI and ML applications. Synthetic sensor data offers a cost-effective and scalable solution for training and testing ML models, enabling organizations to accelerate their AI development and improve algorithm accuracy. With ongoing advancements in ML techniques and the integration of cloud and edge computing, the market for synthetic sensor data generation is poised for further expansion and innovation.

The Uatc LLC invention works as follows

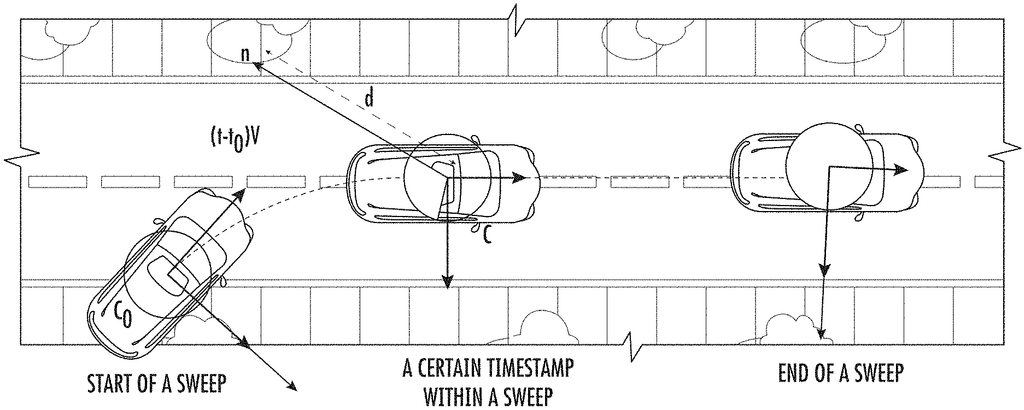

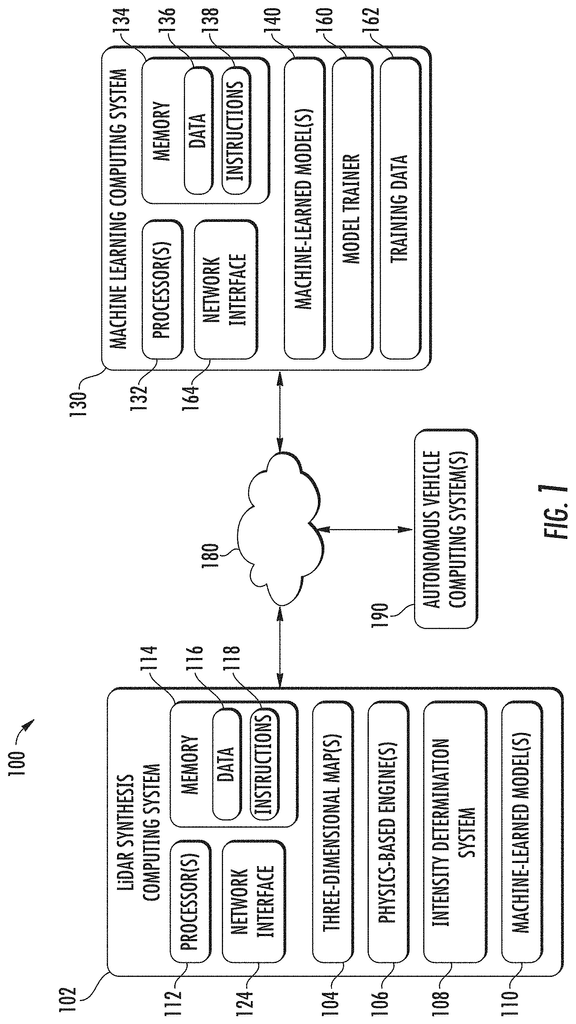

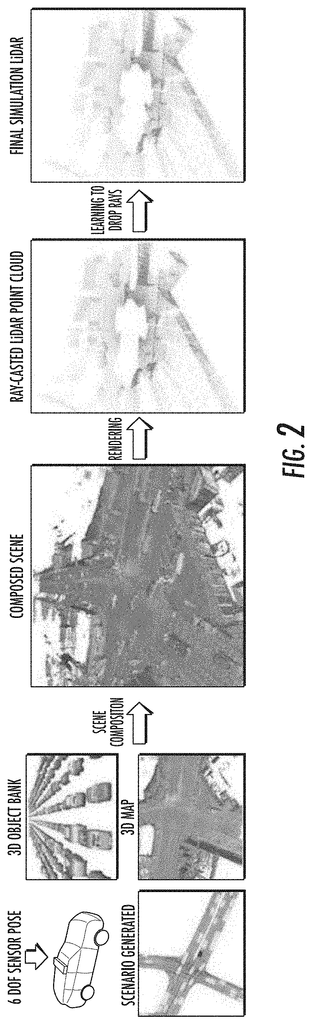

The present disclosure provides methods and systems that combine machine learning with physics-based systems to produce synthetic LiDAR data which accurately mimics the real-world LiDAR system. Aspects of the disclosure, in particular, combine machine-learned models like deep neural networks with physics-based rendering to simulate the intensity and geometry of the LiDAR sensors. A physics-based approach to ray casting can be used with a three-dimensional environment map in order to create an initial point cloud. This mimics LiDAR information. A machine-learned algorithm can be used to predict dropout probabilities of one or more points within the initial three-dimensional cloud. This results in a three-dimensional cloud that more accurately simulates LiDAR data.

Background for Systems and Methods for Generating Synthetic Sensor Data via Machine Learning

Various sensors can collect data that can be used to analyze the environment by different systems, such as autonomous vehicles.

Light detection and range (LiDAR), sensors are one example. LiDAR measures distances to objects using pulsed laser light. The reflected pulses are measured by a sensor. Different laser wavelengths and return times can be used to create three-dimensional representations. Three-dimensional point clouds are one example of a three-dimensional representation. “RADAR sensors are another example of these sensors.

Autonomous vehicles are one example of LiDAR application. A LiDAR-equipped autonomous vehicle is able to create a 3D representation of the environment around it (road surface, buildings and pedestrians). The autonomous vehicle may attempt to understand the surrounding environment using various processing techniques performed on the LiDAR collected by the LiDAR System. The autonomous vehicle, once it has a good understanding of the surrounding environment, can use different control techniques to navigate in that environment.

Aspects, and benefits of embodiments of this disclosure will be described in part.

One example of the disclosure is a computer-implemented technique to generate synthetic data for light detection and range (LiDAR). A computing system containing one or more computing units can be used to obtain a three-dimensional environment map. The computing system determines a trajectory which describes a series locations of a virtual item relative to an environment over time. The computing system performs ray casting according to the trajectory on the three-dimensional maps to produce an initial three-dimensional cloud of points. The computing system processes the initial three-dimensional cloud using a machine learned model to predict the dropout probability of one or more points. The computing system generates an adjusted three dimensional pointcloud from the initial point cloud, based, at least in part, on the dropout probabilities that the machine-learned models predicted for one or more points.

Another aspect of the disclosure is a computer-implemented technique to generate synthetic data for radio detection and range (RADAR). A computing system containing one or more computing units can be used to obtain a three-dimensional environment map. The computing system determines a trajectory that describes the location of a virtual item relative to an environment over time. The computing system performs a data synthesis on the three-dimensional maps according to the trajectory in order to generate synthetic data which comprises an initial point cloud of a plurality points. The computing system processes the initial three-dimensional cloud using a machine learned model to predict the dropout probability of one or more points. The computing system generates an adjusted three dimensional pointcloud from the initial point cloud, based, at least in part, on the dropout probabilities that the machine-learned models predicted for one or more points.

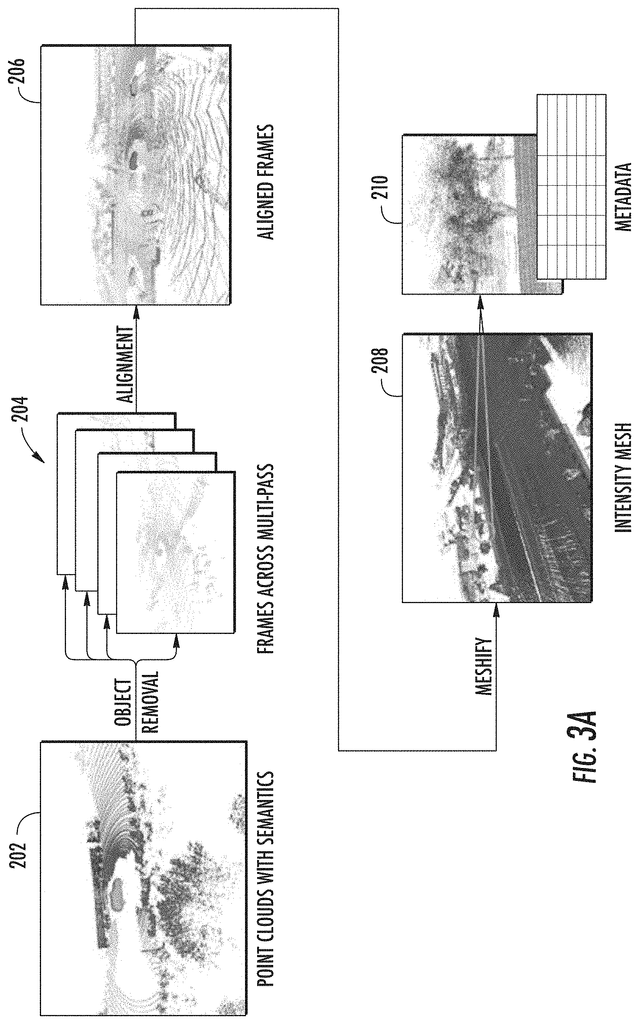

The operations include: obtaining a ground truth three-dimensional point cloud collected by a physical LiDAR system as the physical LiDAR system traveled along a trajectory through an environment; generating a ground truth dropout mask for the ground fact three-dimensional point cloud; obtaining a map of the environment in three dimensions according to the trajectory to generate an initial 3D point cloud that comprises a plurality of points; processing the initial 3D-pointcloud using the machine-learned models to generate a dropout probability map that provides a The operations include: obtaining an initial three dimensional pointcloud that includes a plurality points using a machine-learned map; obtaining an environment three-dimensional mapping; performing raycasting on the map in accordance with the trajectory; and processing the initial point cloud by the machine learned model to produce a dropout probabilities map.

The operations include: obtaining a ground truth three-dimensional point cloud collected by a physical RADAR system as the physical RADAR system traveled along a trajectory through an environment; generating a ground truth dropout mask for the ground fact three-dimensional point cloud; obtaining a map of the environment in three dimensions, performing a data synthesis technique on the map according to the trajectory to generate synthetic RADAR that comprises a plurality of points, processing the initial 3-dimensional point cloud using the machine-learned The operations include obtaining an initial three dimensional pointcloud that includes a plurality points, a ground-truth three-dimensional cloud generated by a physical system as it traveled along a trajectory in an environment, generating a ground-truth dropout mask, and obtaining three-dimensional maps of the environment.

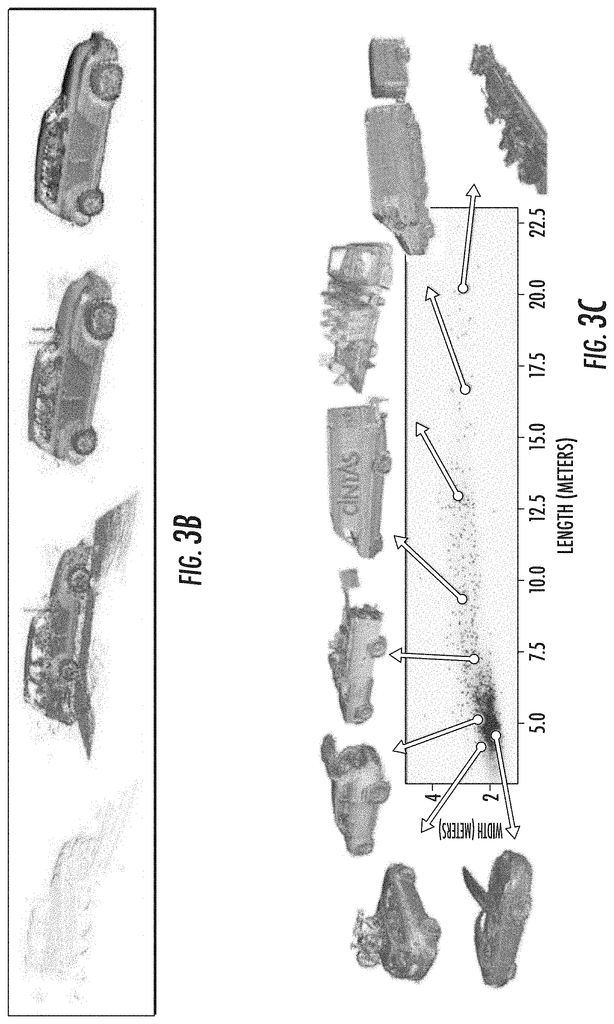

Another example aspect of the present disclosure is directed to one or more non-transitory computer-readable media that collectively store instructions that, when executed by a computing system comprising one or more computing devices, cause the computing system to generate three-dimensional representations of objects by performing operations. The operations include obtaining, by the computing system, one or more sets of real-world LiDAR data physically collected by one or more LiDAR systems in a real-world environment, the one or more sets of real-world LiDAR data respectively comprising one or more three-dimensional point clouds. The operations include defining, by the computing system, a three-dimensional bounding box for an object included in the real-world environment. The operations include identifying, by the computing system, points from the one or more three-dimensional point clouds that are included within the three-dimensional bounding box to generate a set of accumulated points. The operations include generating, by the computing system, a three-dimensional model of the object based at least in part on the set of accumulated points.

Another aspect of the disclosure is directed at one or multiple non-transitory computers-readable media which collectively store instructions. When executed by a computer system consisting of one or several computing devices, the computing system generates three-dimensional representations by performing operations. The computing system can obtain one or multiple sets of real world RADAR collected by one of more RADARs in a real environment. These sets each comprise one or several three-dimensional point cloud. The computing system defines a three-dimensional boundary box for an item in the real-world. The computing system identifies, within the bounding box, the points of one or more point clouds in three dimensions. This is done to create a collection of points. The computing system generates a three-dimensional object model based in part on the accumulated points.

The autonomous vehicle technology described in this document can improve the safety and comfort of passengers, the environment of the vehicle, the rider’s experience, or the operator’s experience, as well other improvements described herein. The autonomous vehicle technology described in the present disclosure may also improve an autonomous vehicle’s ability to provide services to other people and to support members of a community, such as those with limited mobility or who are not served by alternative transportation options. The autonomous vehicle disclosed herein may also reduce traffic congestion and provide alternative forms of transportation which may have environmental benefits.

Another aspect of the present disclosure is directed to various systems and apparatuses, nontransitory computer-readable mediums, user interfaces, electronic devices, and other systems.

These and other features, aspects and benefits of different embodiments of this disclosure will be better understood if you refer to the following description. These accompanying drawings are included in and form a part this specification and illustrate examples of the present disclosure. They also serve to explain related principles.

Generally, this disclosure is directed at systems and methods which combine physics-based system with machine learning in order to produce synthetic LiDAR data which accurately mimics the real-world LiDAR sensors. Aspects of the disclosure, in particular, combine machine-learned models like deep neural networks with physics-based rendering to simulate the intensity and geometry of the LiDAR sensors. A physics-based approach to ray casting can be applied on a map of a three-dimensional environment in order to create an initial point cloud which mimics LiDAR. A machine-learned algorithm can be used to predict dropout probabilities of one or more points within the initial three-dimensional cloud. This results in an adjusted three-dimensional cloud that simulates LiDAR data more realistically. The simulated LiDAR can be used as input to test autonomous vehicle control systems. The present disclosure provides systems and methods that improve the quality and quantity of synthesized LiDAR over purely physics-based rendering. The improved quality in the synthesized LiDAR cloud shows the potential for this LiDAR simulation and its application to generate realistic sensor data that will improve the safety of autonomous vehicles.

LiDAR sensors are the preferred sensor for robotics.” It is because they produce semi-dense 3D points clouds, from which 3D estimation can be much easier and more accurate than using cameras. Deep learning can be used for 3D object recognition, 3D semantic segmentation and online mapping using 3D point cloud data.

To develop a robust robotics system, such as a car that drives itself, it is necessary to test the system under as many scenarios and conditions as possible. It is difficult to test some corner cases, such as uncooperative animals or rare events such as traffic accidents. It is therefore necessary to develop simulation systems that are high-fidelity and reliable, which could be used to test the robot’s reaction under these circumstances.

However most simulation systems focus on simulating behavior and physics, instead of sensory input. This isolates the robot?s perception system from a simulating environment. The performance of the perception system is important in these safety-critical scenarios. Modern perception systems are built on deep learning. Their performance can be improved with more labeled information. Even with crowd-sourcing, it is expensive to obtain accurate 3D labels.

An alternative that is much more cost-effective would be to use simulation to create new views of the real world (e.g. in the form simulated sensor data, such as simulated LiDAR). It is important to have a wide range of examples for rare events and safety-critical situations. These are essential to building reliable self driving cars.

Many existing approaches to LiDAR simulator for autonomous driving focus primarily on using handcrafted 3D primitives such as buildings, cars and trees. The scene was ray cast using graphics engines to create virtual LiDAR. This simulated LiDAR is accurate in representing the handcrafted virtual environment, but it does not reflect the statistics or characteristics of the real-world LiDAR. Virtual LiDAR has sharper occlusions and is cleaner. Real LiDAR, on the other hand, contains both spurious and missing points. “There are many factors that contribute to the lack realism. These include unrealistic meshes and virtual worlds as well as simplified physics assumptions.

In particular, LiDAR generated by physics-based render has many artifacts.” The artifacts occur because the meshes generated from real-world scans do not have a perfect geometrical shape. “Meshes created from real-world scans may contain errors such as holes, and in the normals and position of objects. This is due to noise in sensors, localization errors, segmentation errors, etc.

In addition to geometry, it is just one part of the equation. LiDAR point cloud intensity returns are used in many applications, including lane detection and construction detection. The reflectivity of certain materials can be very useful. It is difficult to simulate intensity returns because they are dependent on so many factors, including incident angle, material reflection, laser bias and atmospheric transmittance.

Click here to view the patent on Google Patents.