Invented by Stefan Johannes Josef HOLZER, Yuheng Ren, Abhishek Kar, Alexander Jay Bruen Trevor, Krunal Ketan Chande, Martin Josef Nikolaus Saelzle, Radu Bogdan Rusu, Fyusion Inc

Real-time mobile device capture refers to the ability to capture and process images and video in real-time using a mobile device. This technology has been around for a while, but recent advancements in mobile hardware and software have made it more accessible and efficient. This has led to an increase in the use of real-time mobile device capture for various applications, including AR/VR art-styled content generation.

AR/VR art-styled content generation involves the use of augmented reality (AR) and virtual reality (VR) technologies to create immersive and interactive art experiences. This can include anything from 3D sculptures and paintings to interactive installations and performances. The ability to capture and generate this type of content in real-time using a mobile device has opened up new possibilities for artists and creators.

One of the main drivers of this market is the increasing demand for immersive experiences among consumers. AR/VR art-styled content offers a unique and engaging way for consumers to interact with art and entertainment. This has led to a growing interest in this type of content from both consumers and businesses.

Another driver of this market is the increasing availability of AR/VR hardware and software. As these technologies become more accessible and affordable, more people are able to create and experience AR/VR art-styled content. This has led to a growing community of artists and creators who are pushing the boundaries of what is possible with these technologies.

Overall, the market for real-time mobile device capture and generation of AR/VR art-styled content is poised for significant growth in the coming years. As technology continues to advance and consumer demand for immersive experiences increases, we can expect to see more innovative and exciting AR/VR art-styled content being created and shared.

The Fyusion Inc invention works as follows

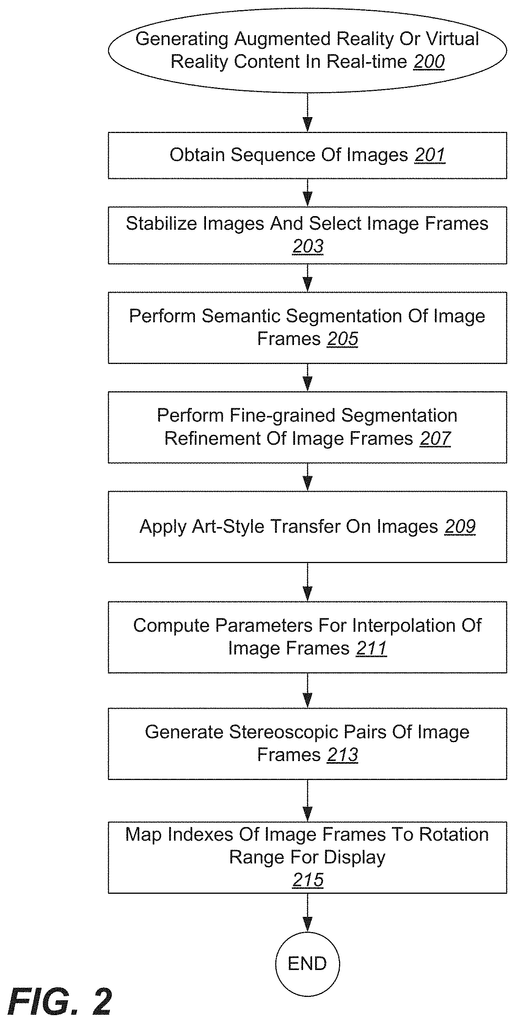

Various embodiments provide information on systems and processes that can be used to generate AR/VR content. One aspect of a method to generate a 3D projection object in virtual reality or an augmented reality environment is to obtain a sequence images using a single lens camera. Each image includes a portion of the object, as well as other subject matter. A trained segmenting neural net is used to segment the object from the sequence of images. This creates a sequence object images that can be used to apply an art-style transfer using a neural network trained to transfer. The interpolation parameters for the object are calculated on the fly and stereoscopic pairs for points along the camera translation are created from the refined sequence segmented image images. These are used to display the object in 3D projections in virtual reality or augmented realities. Segmented image indexes are mapped to a rotation range in order to display the object in the virtual reality and augmented reality environments.

Background for Real-time mobile device capture, and generation of AR/VR art-styled content

Modern computing platforms and technologies are shifting to mobile and wearable devices, which include camera sensors as native acquisition streams. This has made it more obvious that people want to preserve digital moments in a different format than traditional flat (2D) images and videos. Digital media formats are often restricted to passive viewing. A 2D flat image, for example, can only be viewed from one side and cannot be zoomed in or out. Traditional digital media formats such as 2D flat images are not well suited to reproducing memories or events with high fidelity.

Producing combined images such as a panorama or a 3-D (3D) model or image requires data from multiple images. This can involve interpolation or extrapolation. The majority of the existing methods of extrapolation and interpolation require significant amounts of additional data beyond what is available in the images. These additional data must describe the scene structure in dense ways, such as a dense depthmap (where depth values are stored for each pixel) or an optical flowmap (which stores the motion vector between all the images). Computer generation of polygons, texture mapping over a 3-dimensional mesh and/or 3D models are other options. These methods also require significant processing time and resources. These methods are limited in terms of processing speed and transfer rates when it is sent over a network. It is therefore desirable to have improved methods for extrapolating 3D image data and presenting it.

Various mechanisms and processes are provided that allow for the generation of synthetic stereo pairs of images by using monocular sequences of images. One aspect of the invention, which can include at least some of the subject matter from any of the preceding or following examples and aspects, is a method of generating a 3D projection of an object in virtual reality (or augmented reality) environment. This method involves obtaining sequences of images with a single lens camera. Each image in the sequence contains at most a portion of the overlapping subject matter, which also includes the object.

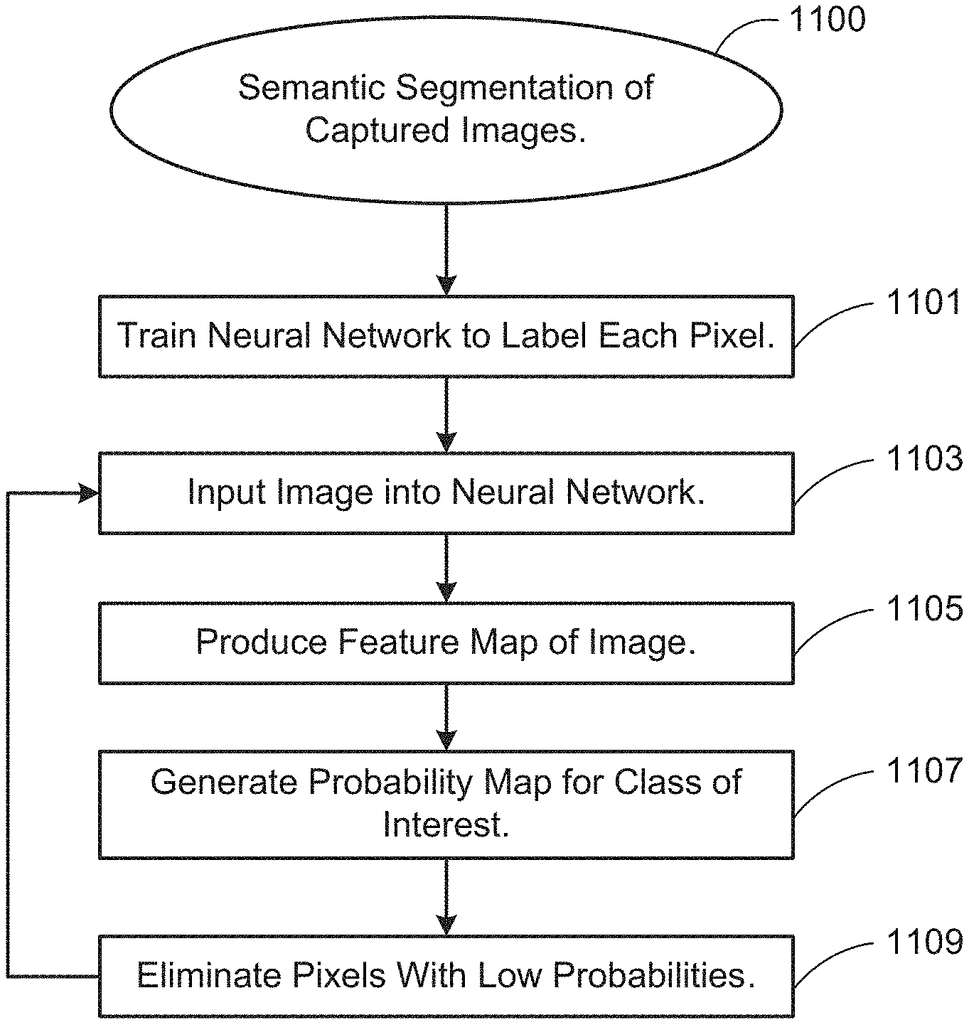

The method also involves segmenting an object from a sequence images using a trained neural network segmenting to create a sequence segmented objects images. Fine-grained segmentation may be used to refine the sequence of segments from images. A temporal conditional random fields may be used to refine the sequence of segmented objects images. This method also involves applying an art-style transfer on the sequence of segmented objects images using a trained neural network. Convolution layers, residual layers and deconvolution layers are all part of the transfer neural network.

The method also includes computing on-the fly interpolation parameters. Interpolation parameters that are generated on-the-fly can be used to create interpolated images along any point of the camera translation in real time. Further, the method involves generating stereoscopic pair images from the refined sequences of segmented objects for display in a 3D environment. One or more points may be used to generate stereoscopic pairs along the camera translation. An interpolated virtual frame may be included in a stereoscopic pair. Rotating an image from one frame may allow for modification. This will make the selected frames correspond to an object view angled towards the object. A projection of the object is created by combining the sequences of segmented image images. This project shows a 3D view without the need for polygon generation. Segmented image indexes are then mapped into a rotation range to be displayed in virtual reality or augmented reality. The mapping of the segmented images indices can include mapping physical viewing points to a frame index.

Other embodiments of this disclosure include the corresponding devices, systems and computer programs that are configured to perform the described actions. A non-transitory computer-readable medium may include one or more programs that can be executed by a computer system. In some embodiments, one or more of the programs includes instructions for performing actions according to described methods and systems. Each of these other implementations can optionally include one or several of the following features.

In another aspect, which may contain at least a part of the subject matter in any of the preceding or following examples and aspects,” a system to generate a three-dimensional (3D), projection of an object within a virtual reality (or augmented reality) environment includes a single lens camera that captures a sequence images using a camera translation. Each image in the sequence contains at least one portion of the overlapping subject matter which includes the object. The system also includes a display module and a processor. It also contains memory that stores one or more programs for execution by the processor. Instructions for performing the actions described in methods and systems are included in one or more of these programs.

These and other embodiments will be described in detail below, with reference to the Figures.

We will now refer to specific examples of disclosure, including the best methods contemplated and used by the inventors in carrying out the disclosure. The accompanying drawings show examples of specific embodiments. Although the present disclosure is presented in conjunction with specific embodiments, it should be understood that it does not limit the disclosure to those embodiments. It is, however, intended to include all alternatives, modifications, or equivalents that may be included in the scope and spirit of the disclosure, as described by the appended claims.

The following description provides a detailed understanding of the disclosure. Some embodiments of this disclosure can be implemented without all or some of these details. Other instances of well-known process operations are not described in detail to avoid confusing the present disclosure.

U.S. Patent Application Ser. No. No. A surround view, according to different embodiments, allows a user to adjust the viewpoint of visual information on a screen.

U.S. Pat. describes “Many systems and methods of rendering artificial intermediate images by view interpolation using one or more images. This is done to create missing frames for an improved viewing experience.” No. No. 14,800,638 filed by Holzer and others on Jul. 15, 2015, titled ARTIFICIALLY RENDERING IMAGES U.S. Patent No. No. According to various embodiments, artificial images can be interpolated between selected keyframes, captured image frames, and/or used in conjunction with one or more frames of a stereo pair. This interpolation can be used in an infinite smoothing method to create any number of intermediate frames that will allow for a smooth transition between frames. No. No.

U.S. Patent Application Ser. No. No. These systems and methods of image stabilization can also be used to create stereo pairs of image frames that are presented to the user in order to give depth perception. No. No.

Overview

According to different embodiments, a surround is a multi-view interactive media representation. This surround view can contain content for virtual reality (VR), augmented reality, (AR) and may be presented to the user using a viewing device such as a virtual reality headset. A structured concave sequence may be captured live around an object of particular interest. This surround view can then be presented as a hologram model when viewed with a viewing device. AR/VR is the term. When referring to both virtual reality and augmented reality, the term?AR/VR? shall be used.

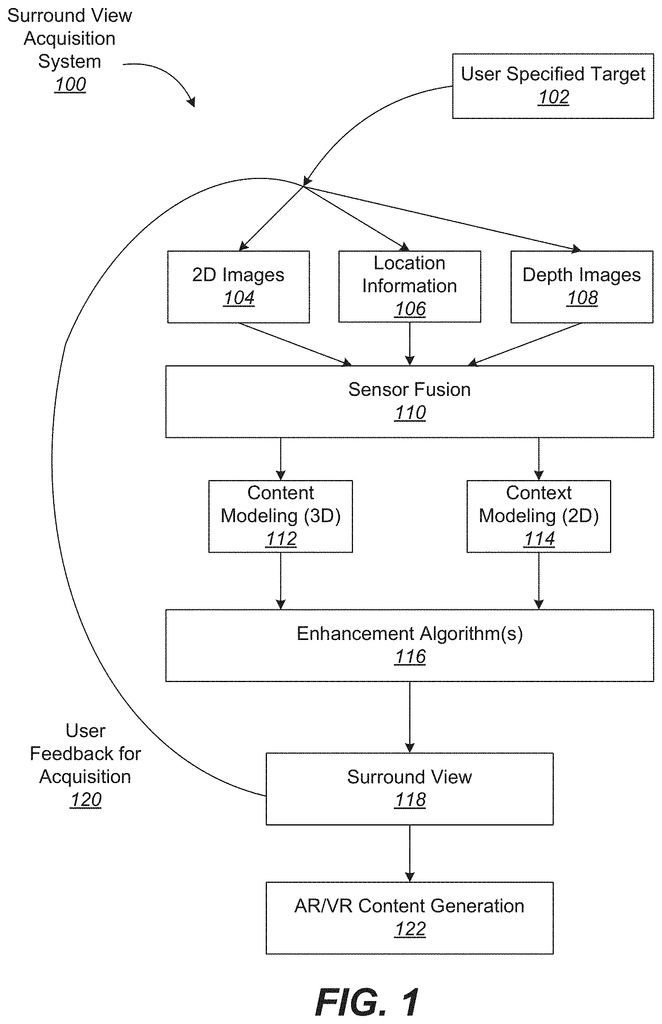

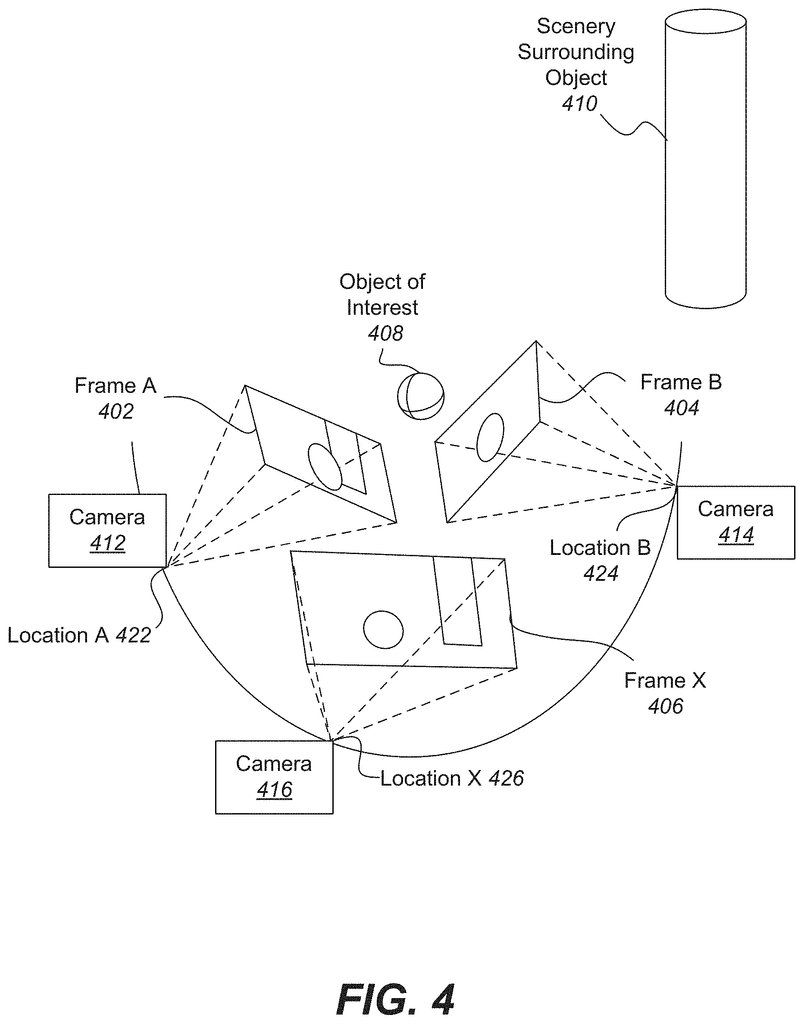

The data that is used to create a surround view can be derived from many sources. A surround view can be generated using data, including two-dimensional (2D), images. These 2D images can be captured using a camera that moves along a camera translation. This may or may not always be uniform. These 2D images can be captured at constant intervals of time or distances using camera translation. These 2D images may include color image data streams, such as multiple sequences of images, video data, etc. or multiple images in any number of formats, depending on what the application requires. A surround view can also be generated from location data, such as GPS, WiFi, IMUs (Inertial Measurement unit systems), magnetometers, accelerometers and magnetometers. Depth images are another data source that can be used in order to create a surround view.

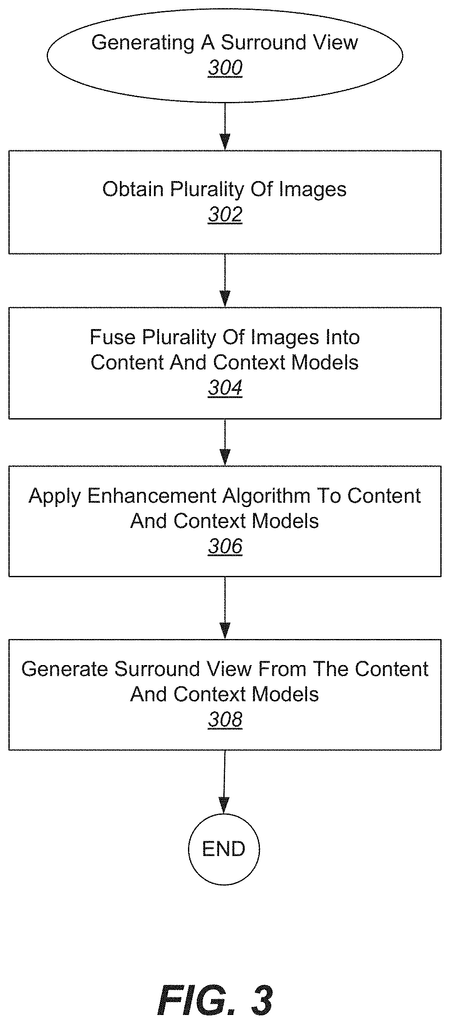

The data can then be merged together in the current example embodiment. A combination of 2D and location data can create a surround view. Other embodiments allow for the use of depth images and location data together. Depending on the application, different combinations of data can be combined with location information. The data is used to model context and content in the current example. The context can be defined as the scene surrounding the object or interest. The content may be presented in a three-dimensional model depicting the object of interest. However, some embodiments allow for the presentation of the content as a two-dimensional image. In some embodiments, context can also be represented as a two-dimensional model of the scenery surrounding the object. While many contexts can present two-dimensional views showing the scenery around the object of curiosity, some embodiments allow for three-dimensional elements. This surround view can be generated using systems and methods described in U.S. Patent Application Ser. No. No.

In the current example embodiment, one or more enhancement algorithm can be applied. You can use different algorithms to capture surround view data in particular embodiments. These algorithms can be used for user enhancement. Automatic frame selection, image stabilization and object segmentation can all be used to enhance the user experience. These enhancement algorithms can be applied to image files after data acquisition. These enhancement algorithms can also be applied to surround view data while image data is being captured. Automatic frame selection can be used to reduce the storage requirements by saving keyframes from all captured images. This allows for more uniform spatial distribution of viewpoints. Image stabilization can be used to stabilize keyframes within a surround view in order to improve things like smoother transitions and enhanced/enhanced focus.

View interpolation can also be used to enhance the viewing experience. To avoid sudden “jumps”, interpolation is particularly useful. Between stabilized frames, synthetic intermediate views can be created on the fly. View interpolation can only be used to the foreground, such as the object or interest. This information can be provided by IMU and content-weighted keypoint tracking, as well denser pixels-to-pixel matches. The process may be simplified if depth information is available. In some embodiments, view interpolation may be used during surround view capture. Other embodiments allow view interpolation to be applied during surround-view generation. These enhancement algorithms and others may be described using U.S. Patent Application Ser. No. No. No. No.

The surround view data may be used to generate content for AR and/or VR viewing. According to different embodiments, additional image processing may generate a stereooscopic three-dimensional view showing an object of interest that can be presented to a user using a viewing device such as a virtual reality headset. The subject matter in the images can be divided into context (background), content (foreground), and semantic segmentation using neural networks. Fine grained segmentation refinement with temporal conditional random field may also be used. This separation can be used to remove background images from the foreground so that only the images that correspond to the object of particular interest are displayed. Systems and methods described in U.S. Patent Application Ser. No. No. To create stereoscopic image pair, stabilization my image may be achieved by determining focal length and image rotation. This is described in U.S. Patent Application Ser. No. No.

Art-style transfer can also be applied to various image frames by changing the images in real time. A trained neural network can be used in various ways to interpolate native data and convert it into a specific style. The style could be associated with a specific artist or theme. View interpolation can also be used to smoothen the transition between images frames infinitely by creating any number of intermediate artificial frames. The parameters of view interpolation can be predetermined in various embodiments to render images in real time during runtime viewing.

Click here to view the patent on Google Patents.