Invented by James M. Powderly, Savannah Niles, Haney Awad, William Wheeler, Nari Choi, Timothy Michael Stutts, Josh Anon, Jeffrey Scott Sommers, Magic Leap Inc

Keyboards designed for MR/VR/AR environments are different from traditional keyboards as they need to provide a seamless and intuitive interaction with the virtual world. These keyboards often incorporate motion tracking sensors or haptic feedback mechanisms to enhance the user’s experience. For example, some MR/VR/AR keyboards can detect hand movements and gestures, allowing users to type or interact with virtual objects without the need for physical keys.

Similarly, displays for MR/VR/AR are designed to provide an immersive visual experience. These displays often feature high resolutions, wide field-of-view, and low latency to minimize motion sickness and enhance the sense of presence. Some displays also incorporate eye-tracking technology to enable more natural interactions by following the user’s gaze and adjusting the virtual content accordingly.

The market for MR/VR/AR keyboards and displays is driven by several factors. Firstly, the increasing popularity of MR/VR/AR applications in various industries such as gaming, entertainment, education, and healthcare is fueling the demand for specialized input and output devices. As more companies and individuals invest in MR/VR/AR technologies, the need for efficient and user-friendly keyboards and displays becomes crucial.

Secondly, advancements in technology are enabling the development of more sophisticated MR/VR/AR keyboards and displays. With the progress in motion tracking, haptic feedback, eye-tracking, and display technologies, manufacturers can now create more immersive and interactive devices that enhance the overall MR/VR/AR experience.

Furthermore, the growing consumer interest in MR/VR/AR devices is also contributing to the market growth. Consumers are increasingly looking for innovative ways to engage with digital content, and MR/VR/AR technologies offer a unique and immersive experience. As a result, the demand for compatible keyboards and displays is rising to complement these devices.

In terms of market trends, there is a growing focus on wireless and compact keyboards for MR/VR/AR environments. Manufacturers are developing keyboards that can connect seamlessly with MR/VR/AR headsets or controllers, eliminating the need for additional wires and enhancing mobility. Additionally, compact keyboards are gaining popularity as they offer a more portable and convenient solution for users on the go.

In conclusion, the market for keyboards and displays for MR/VR/AR is witnessing significant growth as these technologies become more prevalent in various industries. The demand for specialized input and output devices tailored for MR/VR/AR environments is increasing, driven by the rising adoption of MR/VR/AR devices, technological advancements, and growing consumer interest. As the MR/VR/AR market continues to expand, we can expect further innovations in keyboards and displays to enhance the immersive experience and meet the evolving needs of users.

The Magic Leap Inc invention works as follows

The disclosure includes user interfaces for virtual, augmented, and mixed-reality display systems. These user interfaces can be virtual keyboards or physical keyboards. “Techniques are described to display, configure, and/or interact with the user interfaces.

Background for Keyboards and displays for mixed, virtual, and augmented reality

Field

The present disclosure relates primarily to virtual reality systems and augmented-reality imaging and visualisation systems. It also focuses on keyboards that can be used with virtual reality systems and augmented-reality imaging and visualisation systems.

Description of Related Art

Modern computing and display technology has facilitated the development systems for so-called?virtual realities? ?augmented reality,? “Mixed reality” or ‘augmented reality’ are experiences where digitally reproduced images are presented to a user in a way that they can be perceived as real. Digitally reproduced images that are displayed to the user in such a way as to be perceived by them as real. Virtual reality (VR) is a virtual experience. A virtual reality, or?VR,’ scenario involves the presentation of digital image information that is not transparent to any other real-world input. A augmented reality or “AR” is a type of virtual world. A typical scenario involves the presentation of digital image data as an enhancement to the visualization of the real world around the user. Mixed reality, also known as MR, is a type of AR scenario. A mixed reality, or?MR,’ scenario is a kind of AR scenario that relates to merging the real and virtual worlds in order to create new environments where real and virtual objects interact and co-exist in real time.

The systems and methods described herein address various challenges related VR, AR, and MR technology.

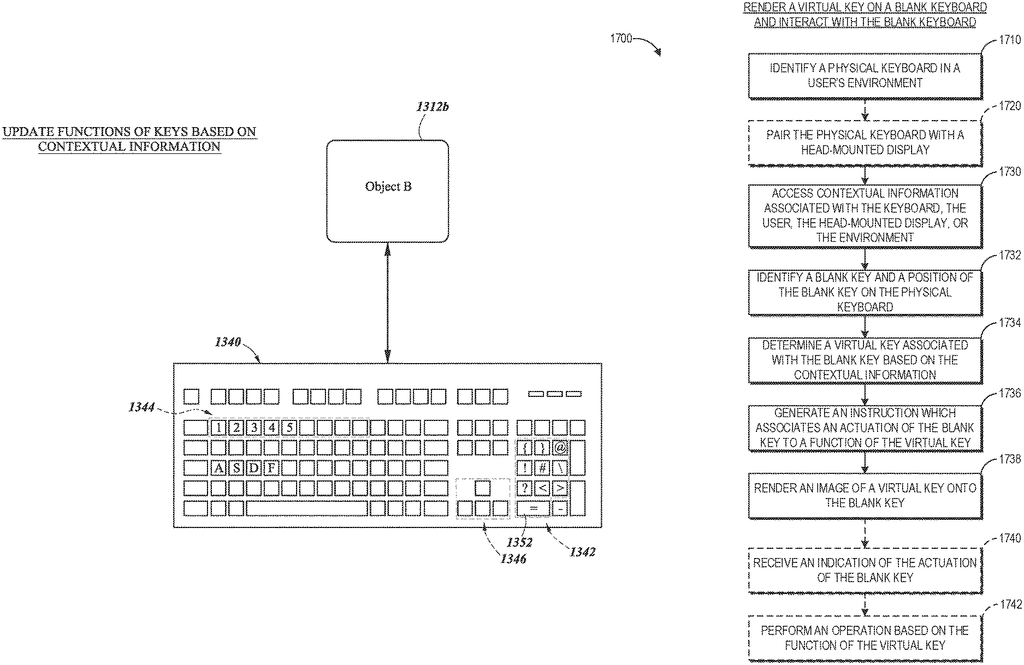

The system can be configured dynamically to perform functions on at least part of the keyboard, using the specification.

The system may include: “A display configured to display virtual reality, mixed reality, or augmented reality content to a users; a physical keypad comprising one or multiple keys; and sensors configured to output an output indicative of location of one of more fingers or hands of the user relative to physical keyboard. One or several hardware processors are also included; as well as one of more computer storage media that store instructions which, when executed, cause the system perform operations such as: determining the location of those hands or finger relative to physical keyboard using the output from the one

The instructions are configured by at least one hardware processing unit to receive a signal indicative of a user interaction from the sensor; determine the type of user interaction using the signal; and send a haptic signal to the input.

The system includes: an outward facing imaging system that can image the physical environment of a user, a display device configured to display a virtual screen to the user, and a hardware processor, which is programmed to receive a 1st image of the environment, determine the location of a keyboard using the image, determine the location of virtual display based on this location, determine the size of virtual display based on the size preference and the location of virtual display, and instruct the display to render the virtual display with the size determined in the first rendering system based on the a first rendering system based on the first rendering system based on the first rendering system based on the first rendering system based on the 1

In some cases, a system includes: a virtual, augmented, or mixed-reality display capable of displaying multiple depths of data, a processor hardware configured to: display a minimum portion of the image data associated to a first application, at a certain depth; display a minimum portion of the image data associated to a second application, at a different depth, the first and the second applications being related, and the second depth selected to be fixed from the first.

A wearable VR/AR/MR can be configured to display 2D or 3-D virtual images for a user. Images can be frames from a video or still images. They may also be videos, combinations of these, or other images. Wearable systems can include wearable devices that present VR, AR or MR environments for user interaction. “The wearable VR/AR/MR can be a HMD (head-mounted device).

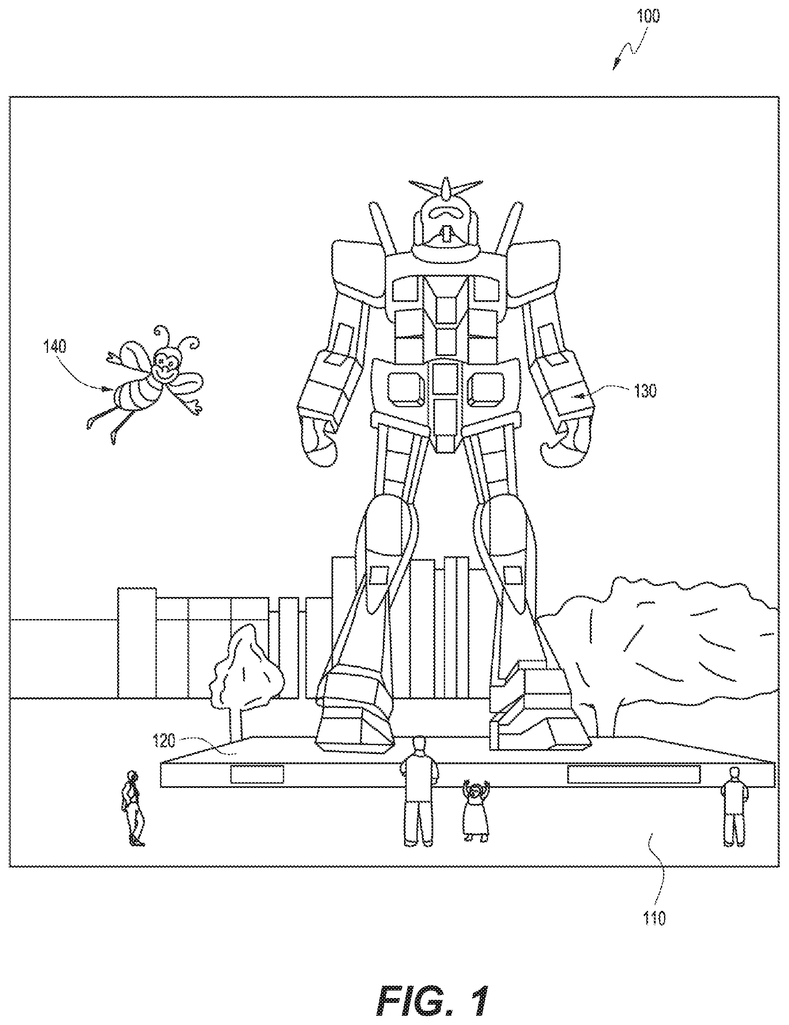

FIG. The user can see a virtual object and a physical object in FIG. The AR/MR user sees the scene shown in FIG. The scene 100 depicted in FIG. 1 includes a park-like real-world setting 110 with people, trees and buildings in the background. It also features a platform. The user of AR/MR technology can also see a virtual robot standing on the platform 120 in real life, as well as a cartoon-like avatar character flying by that seems to personify a bumblebee.

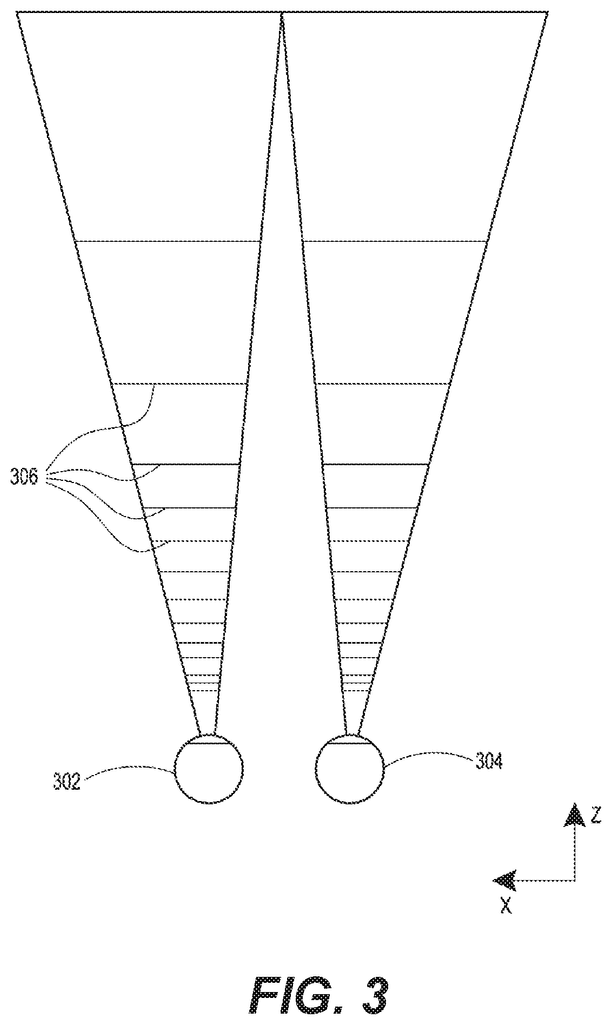

It may be necessary for each display point to have an accommodative reaction that corresponds to its virtual depth in order to simulate the illusion of depth. The human eye can experience accommodation conflicts if the accommodative responses to display points do not match the virtual depth as determined by binocular depth clues of stereopsis and convergence. This may result in unstable images, eye strain and headaches.

Display systems with displays that provide images corresponding to a number of depth planes to a viewer can provide “VR, AR and MR experiences.” Images may differ for each depth plan (e.g. provide slightly different presentation of a scene, object, etc.) and be focused differently by the viewer’s eye, helping to provide depth cues to the user based on the accommodation required of the eye to bring different image elements for the scene located in a different depth level into focus or based upon observing different features of different depth levels being out-of-focus. These depth cues, as discussed elsewhere in this document, provide credible perceptions.

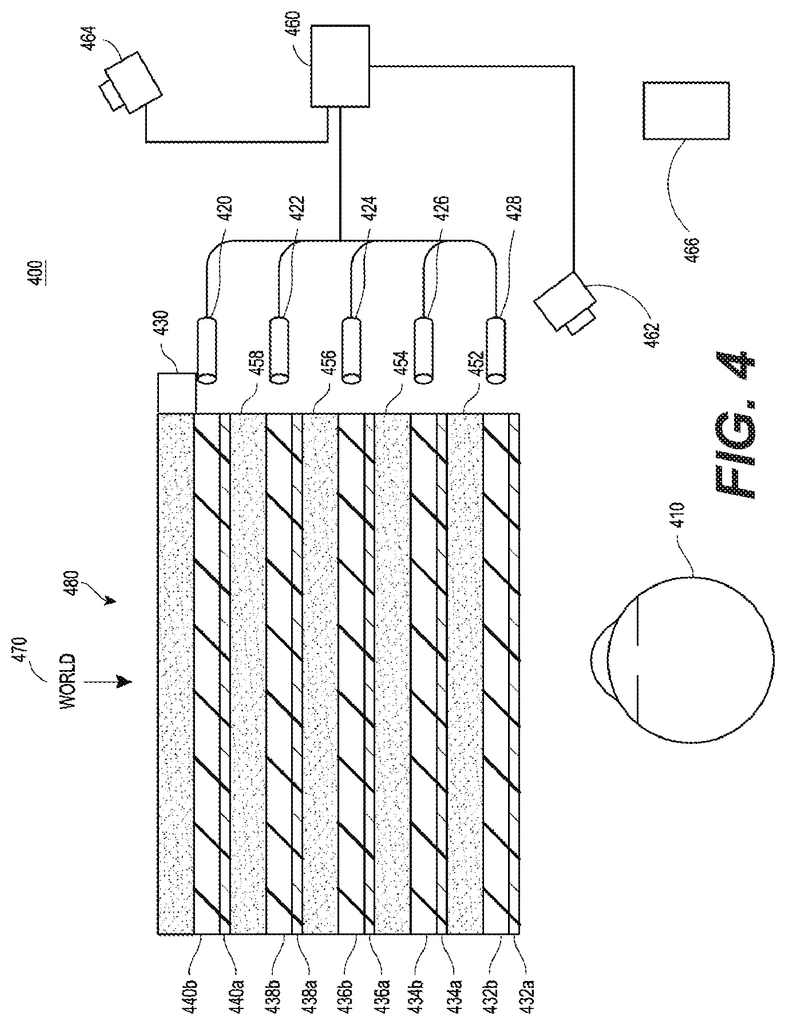

FIG. The figure 2 shows an example of a wearable VR/AR/MR device 200. Wearable system 200 comprises a display 220–as well as various electronic and mechanical modules to support display 220. The frame 230 can be worn by the user, wearer or viewer 210. The display 220 may be placed in front of a user’s eyes 210. The display 220 may present AR/VR/MR contents to the user. The display 220 may be a HMD that is worn by the user. In some embodiments a speaker is attached to the frame and placed adjacent to the ear of the user. (Another speaker not shown can be located adjacent to the opposite ear of the users to provide stereo/shapeable sounds control).

The wearable system can include an imaging system that faces outwards (shown in FIG. The wearable system 200 can include an outward-facing imaging system 464 (shown in FIG. Wearable systems 200 can include an imaging system that faces inwards (shown in FIG. The wearable system 200 can also include an inward-facing imaging system 462 (shown in FIG. The inward-facing system can track one or both eye movements. The inward facing imaging system 462 can be attached to frame 230, and in electrical communication with processing modules 260, 270. These modules may use the image information obtained by the inward facing imaging system in order to determine the eye movements or line of sight, as well as the pupil diameters, orientations, or sizes of the eyes.

The wearable system can, for example, use either the outward-facing or inward-facing image system 464 to capture images of a user’s pose or gestures. Images may be frames from a video or still images. Videos, videos, or combinations of these images are also possible.

The display 220 may be coupled to a local processing module 260 by means of a wired or wireless connection 250. This module can be fixed to the frame 230 or fixed to a helmet, hat, or other headgear worn by the user. It can also be embedded into headphones or be otherwise removable attached to the user (e.g. in a backpack or belt-coupling configuration).

The local processing and data modules 260 can include a hardware processer, as well as digital memories, such as nonvolatile memory (e.g. flash memory), which are both used to aid in the processing, caching and storage of data. Data may be captured by sensors, such as cameras (e.g. inward-facing or outward-facing), microphones (e.g. inertial measurement unit (IMU)), accelerometers (GPS), compasses (compass), radio devices (gyroscopes), or microphones (e.g. inward-facing or outward-facing image capture system), or acquired or processed via remote processing module (270) or remote data repository (280), possibly for transmission to the display (230) Communication links 262 and 264 may be used to operatively couple the local data and processing module 260 to remote data and processing module 270. The remote processing module and the remote data repository may also be operatively linked to each other.

In some embodiments, a remote processing module 270 can include one or multiple processors that are configured to process and analyze data and/or images. In some embodiments the remote data repository may be a digital storage facility that is accessible via the internet or another networking configuration. Resource configuration. In some embodiments all data and computations are stored in the local data and processing module. This allows for fully autonomous usage from a remote module.

The human vision system is complex and providing a realistic sense of depth can be challenging. Vergence and accommodation can cause viewers to perceive an object as three-dimensional. The vergence (i.e. rolling movements of pupils towards or away from one another to converge lines of vision of the eyes in order to fixate on an object) movements of two eyes are closely related to focusing (or “accommodation?”) The lenses of the eye are adapted. Under normal conditions, changing the focus of the lenses of the eyes, or accommodating the eyes, to change focus from one object to another object at a different distance will automatically cause a matching change in vergence to the same distance, under a relationship known as the ?accommodation-vergence reflex.? Normal conditions will also trigger a change of accommodation when a vergence change occurs. Display systems with a better match of accommodation and vergence can create more realistic and comfortable three-dimensional images.

Click here to view the patent on Google Patents.