Invented by Akula; Arjun Reddy, Pruthi; Garima

Understanding colors in images isn’t just about seeing red, blue, or green. For computers, it’s much more complex, especially when it comes to telling the difference between very close shades. A new patent application brings an exciting approach to helping machines learn about colors with impressive accuracy. In this article, we’ll cover what’s happening in the world of color-aware AI, why current systems often fall short, and how this new invention can change the game.

Background and Market Context

Color is a powerful part of how we see the world. It shapes what we feel, helps brands stand out, and makes digital content appealing. In business, color is a big deal—think about the signature red of Coca-Cola or the bright blue of Facebook. Brands spend millions making sure their colors are always just right because color means identity and trust.

As more images and videos fill the internet, we need smart systems that can understand, search, and create digital content based on what people want. If you type “green shoes” into a shopping site, you expect to see green shoes, not blue or teal ones. If you’re a designer or marketer, you want to make sure your product images match your brand’s exact color specifications. And for creators, being able to generate or search for images with specific colors opens up new creative possibilities.

Today, smart computer models, especially those that link images and text, are everywhere. They help us search better, shop smarter, and create faster. These models work by connecting pictures with words, so if you ask for a “yellow flower,” you get a photo of a yellow flower. But there’s a catch: when it comes to exact colors, things often get fuzzy. If you want a specific green or a brand’s unique blue, the results may not be accurate. The difference between “sky blue” and “baby blue” might be lost on the machine, even if it’s obvious to a human.

This gap is more than just an annoyance; it’s a real problem for many industries. Fashion brands want to make sure their catalog photos match their actual products. Designers need to see how a color will look on a product before making it. Marketing teams want to check that every ad uses the right brand colors. As more work moves online, the need for precise color understanding in AI has never been more important.

But why do current systems struggle with color? Most data used to train AI models only labels colors in a general way. Words like “red,” “blue,” or “green” are common, but rarely do we see exact terms like RGB values (“#FF5733”) or unique brand colors. This makes it tough for computers to tell apart subtle shades. The lack of detailed, high-quality image-text pairs that describe precise colors makes training even harder. As a result, color confusion remains a weak spot in many AI models today.

Enter this new patent application, which aims to solve the color problem at its core. By teaching computers to truly understand and match specific colors—down to very fine differences—this technology could have a huge impact on how we search, create, and manage digital content.

Scientific Rationale and Prior Art

To understand what’s new about this invention, it helps to know how current systems work and where they fall short. At the heart of many modern image and text systems is something called an “embedding model.” In simple terms, these models learn to turn both images and words into numbers (called embeddings) in such a way that similar images and similar words end up close together in this number space. This trick makes it possible for the computer to match a text prompt like “red apple” to an image of a red apple.

One well-known approach in this space is CLIP (Contrastive Language-Image Pretraining), which was developed to connect images and text. CLIP is trained on lots of image-text pairs from the internet, learning to match images to descriptions and vice versa. This allows for all sorts of cool tasks, like searching for images by text, generating images from descriptions, and more. But as powerful as CLIP and similar models are, they still have weak spots when it comes to exact color matching—especially for unusual shades or brand-specific colors.

The main reason for this is data. Most training data uses broad color names. If you want a “red apple,” the system can find it. But if you want a “Pantone 185C apple,” things get messy. There simply isn’t enough data with that level of detail in color descriptions. This leads to several problems:

First, the model might mix up similar colors. For example, “blue” and “sky blue” could be treated almost the same, even if they look very different.

Second, if you try to fix this by training the model on a small set of very specific examples, you risk something called “overfitting.” That means the model gets too focused on the few examples it has seen and doesn’t generalize well to new ones. In the worst case, the model could even collapse, failing to learn anything useful at all.

In the world of computer vision, other attempts to improve color understanding have included using larger datasets, adding more color labels, or tweaking the training process. Some researchers have tried to use segmentation models, which help identify parts of an image (like the apple, not the background), and then change only the color of those parts. Others have explored color transfer techniques, where the color from one image is applied to another.

Still, all these approaches hit the same wall: data. Without enough detailed, high-quality pairs of images and exact color descriptions, it’s hard for AI to learn fine color distinctions. Most systems are good at broad categories (“red car”), but struggle with subtle or rare colors (“turquoise blue car” or “brand-specific yellow”).

This is where the new patent’s approach stands out. It introduces a way to generate new, detailed image-text pairs on demand, covering any color—even those not found in standard lists. This not only gives the model more to learn from, but also helps to avoid overfitting by creating a large, varied training set. The invention also brings in new training tricks to make sure the model focuses on color differences and doesn’t lose track of the overall meaning of the image or text.

By combining smart data generation, careful color editing, and special training steps, this invention moves beyond what previous systems have done. It sets out to make AI models that can truly “see” and “read” colors the way people do—even when the colors are rare or brand-specific.

Invention Description and Key Innovations

This new patent application presents a complete system for teaching computers to understand and match colors with a level of detail never seen before. Let’s break down how it works and what makes it special.

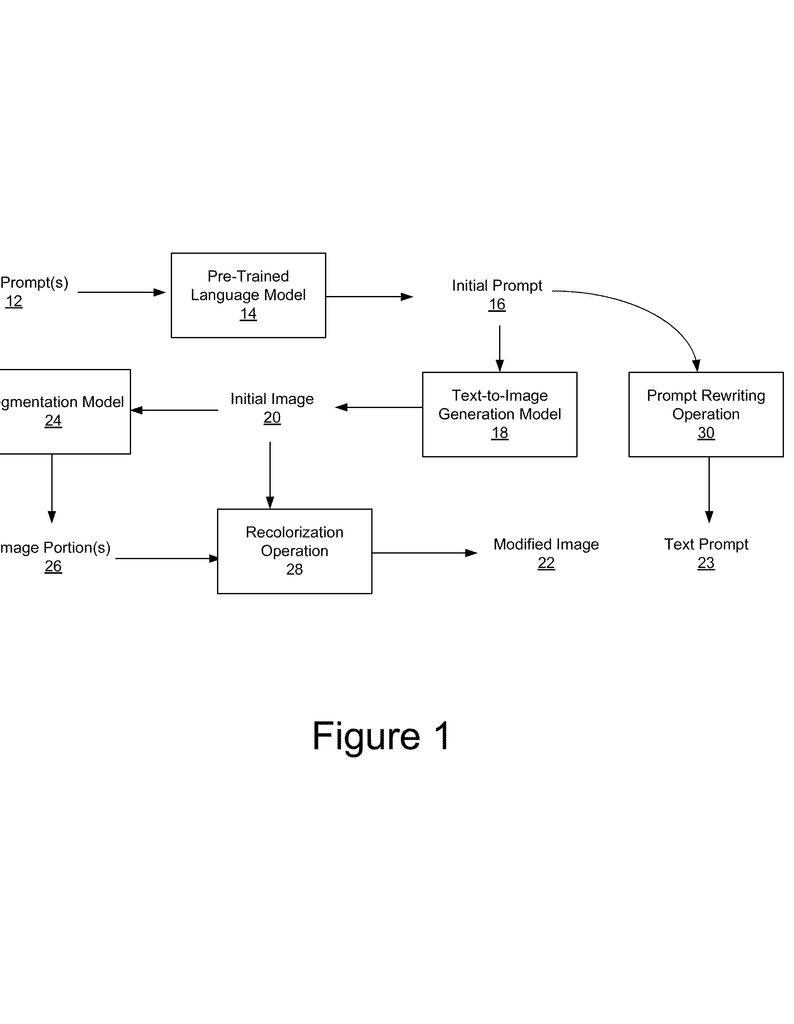

The core idea is to create new training data that connects images and text in a way that highlights the exact color of an object. Here’s how the process unfolds, step by step:

1. Start with an initial image and prompt: The system begins by getting an image of an object in a specific color. This image could be generated using a text prompt like “a red apple.” This prompt might be created by a smart language model, and the image itself might be generated by a text-to-image model that can turn words into pictures.

2. Change the color in the image: Next, the system uses a segmentation model to find the object in the image. For our example, it would locate the apple and not the background. Then, it carefully changes the color of the apple pixels to a different color—say, green or a unique brand shade—using a recolorization technique. This ensures only the object changes color and the rest of the image stays the same.

3. Update the text prompt: At the same time, the original text prompt is edited to match the new color. So, “a red apple” becomes “a green apple” or “a turquoise apple.” If the new color isn’t a common name, the system can even use a special token or code to represent it, making it possible to handle any RGB color, even rare or brand-specific shades.

4. Create lots of these pairs: By repeating this process with many objects and many colors, the system builds a large set of image-text pairs where each pair is tightly linked to a specific color. This gives the AI model much more detailed examples to learn from, covering the full range of possible shades.

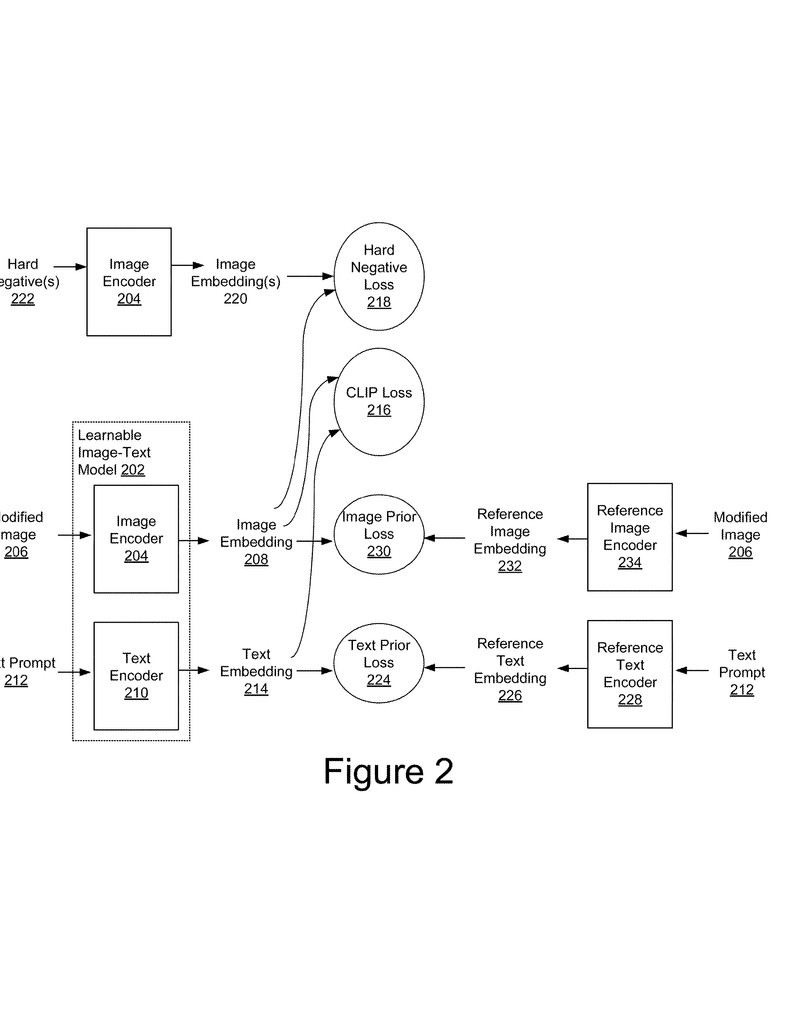

5. Train the model with smart losses: The model is trained using these pairs. It uses a special loss function—a way to measure how well it’s learning—that combines several elements. First, it uses a contrastive loss (like CLIP) to bring matching image and text embeddings closer together, and push mismatched ones apart.

But the invention goes further. It introduces “hard negatives,” which are images that look almost like the target image but have slightly different colors. By making the model tell apart, for example, a “turquoise apple” from a “teal apple,” it learns to notice even small color differences. This is crucial for applications where exact shades matter.

The system also uses “prior losses” to make sure the model doesn’t lose its grip on the overall meaning of images and text. These losses compare the current model’s outputs to a reference version (from before fine-tuning), helping to avoid overfitting and keeping the model’s general knowledge intact.

6. Flexible and broad color support: The model isn’t limited to standard colors like those in HTML lists. With the use of unique tokens, it can learn about any RGB color, whether it’s a standard shade, a rare hue, or a brand-specific color. This opens the door to a whole new level of color precision in AI.

7. Wide range of uses: Once trained, the model can do much more than just match images to color descriptions. It can:

- Find images based on specific color prompts

- Describe the exact color found in an image

- Generate new images in requested colors

- Help brands check that their colors are used correctly

- Support creative tools where users pick any color they like

The patent also describes various ways the system can be set up, including working on a user’s device, on a central server, or in a hybrid way. It could be used in apps, websites, or design tools, making it very flexible for businesses and creators.

What makes this invention special?

It’s not just about changing colors in images. The real magic is in how the invention weaves together smart data generation, careful editing, and advanced training techniques to build a model that truly “gets” color. By focusing on color at every step—image creation, text editing, and model training—it creates a system that can recognize, match, and generate even the most subtle color variations.

Unlike past systems, which were stuck with the colors found in their training data, this invention lets you teach the model about any color you want. And by using hard negatives and prior losses, it avoids the common pitfalls of overfitting and losing general knowledge. The result is a model that’s both flexible and robust, able to handle real-world challenges where color matters most.

Conclusion

Color is more than just a detail—it’s a critical part of how we see, feel, and connect with the world. For AI, truly understanding color means unlocking new possibilities in design, branding, search, and creativity. The patent application we explored introduces a powerful new way to teach computers about color, moving beyond the limits of today’s systems. By creating custom image-text pairs, editing images and prompts with precision, and using smart training techniques, this invention paves the way for AI models that can match, describe, and create images with exact colors—even those never seen before.

For companies, designers, and creators, this means better tools for managing brand identity, more accurate searches, and new creative options. For the world of AI, it’s a big step toward making machines that see color as clearly as we do. As this technology develops, expect to see smarter, more color-aware systems everywhere—from shopping and advertising to art and design.

Click here https://ppubs.uspto.gov/pubwebapp/ and search 20250218076.