Invented by Daly; Jake Matthew, Colbert; Ian Charles, Yershov; Andrei Rudolfovich, Mitchell; Ryan, Gottlieb; Robert A., Rubin; Norman, Advanced Micro Devices, Inc.

Compilers are the silent engines powering our software, turning code into something computers can run. But what if compilers could use artificial intelligence to make programs even faster and more efficient? This groundbreaking patent application reveals how AI can guide a compiler’s instruction scheduler, changing how code is arranged and run. Let’s go step-by-step to understand why this matters, what has come before, and what this invention brings to the table.

Background and Market Context

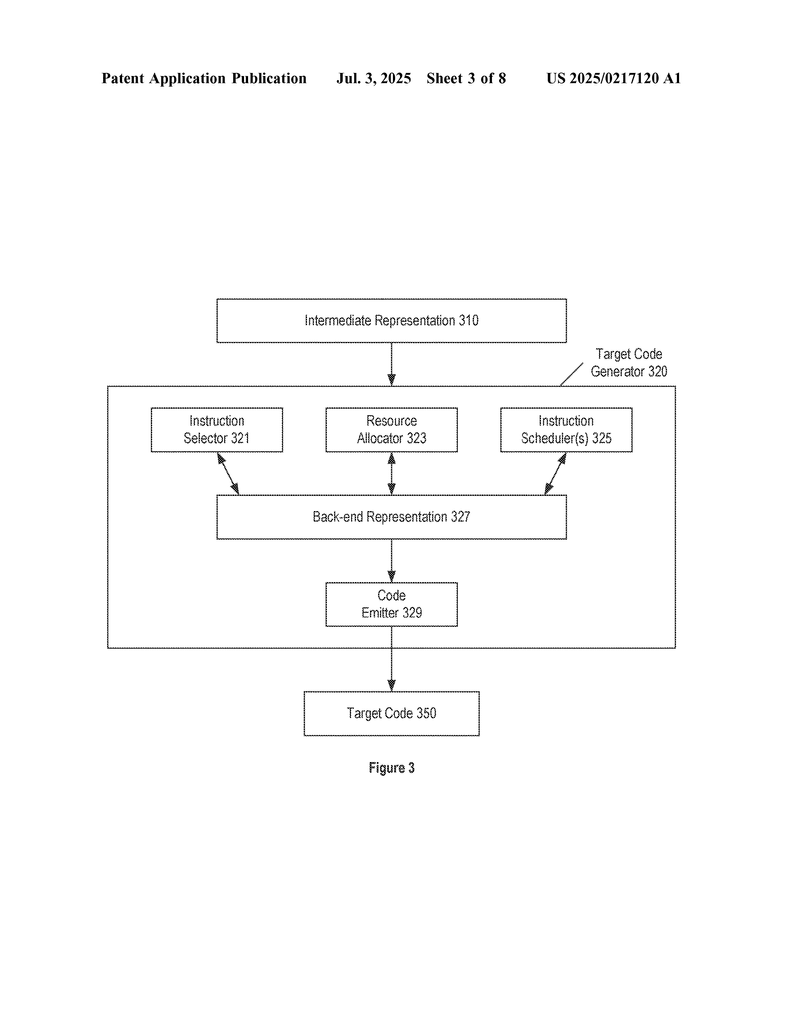

When you write a computer program, you use simple words and clear steps. But computers don’t understand these words directly. A compiler turns your program into a special set of instructions that the computer’s brain—its processor—can follow. This process is called compilation.

The compiler’s job does not stop at just translating; it tries to make your program better. It can make the program run faster, use less memory, or save power. One big part of this is called instruction scheduling. Imagine you have a list of chores to do, and you want to do them in the best order so you finish quickly and don’t waste time waiting. That is what instruction scheduling does for a program.

Why is this important? Modern computers have many types of processors. There are CPUs (the main brain), GPUs (which are great for graphics), and many others like FPGAs, TPUs, and even chips made just for AI. Each one works in its own way. Making sure the instructions are in the best order for each chip can mean the difference between a fast game and one that lags, or a phone battery that lasts all day versus one that dies by lunch.

But as processors get more complex, the job of the compiler also gets harder. For example, a graphics processor (GPU) can run many things at once, but it also has strict rules about how instructions should be ordered. If the compiler doesn’t get it right, the program will be slow, or even worse, it may not work at all.

Big tech companies and chip makers spend a lot of money making sure their compilers are smart. They want every app, game, or system to run as fast as possible, because users notice even small delays. If AI can help compilers do a better job, that means faster phones, games, websites, and more. This is why the market for smarter compiler technology is growing fast.

In summary, the market is hungry for better, faster, and smarter compilers. AI-based instruction scheduling is a new and powerful tool to meet this demand. This invention aims to make compilers not just translators, but intelligent helpers that learn and adapt to every type of processor.

Scientific Rationale and Prior Art

To understand why this new approach matters, let’s first look at how compilers have handled instruction scheduling before.

Traditional compilers use a set of rules called heuristics. These rules might say, “Do this kind of instruction first,” or “Don’t do two big tasks at the same time.” The compiler checks the instructions and tries different orders, hoping to find the best one. This is a bit like guessing which order will be fastest for your chores, based on past experience.

One common way is called evaluation-driven scheduling. Here’s how it works: the compiler tries many different ways to order the instructions (sometimes dozens or more for each small piece of code called a basic block). For each order, it checks which is best based on things like how many cycles the processor will need, or how much waiting (called pipeline stalls) will happen. Then it keeps the best one. The problem is that trying all these combinations takes a lot of time and computer power, especially for large programs.

Some compilers use model-driven scheduling, where an AI tries to directly create the best order. This can work, but it has its own problems. The AI needs to know what a “best” order looks like, and finding that is very hard for large pieces of code. Also, training an AI to do this can take ages, because there are so many ways to arrange instructions.

Other approaches blend the two, using AI to help choose which rules (heuristics) to use, but still relying on the compiler’s own logic to do most of the work. However, even these can be slow, because they often don’t reduce the number of orders the compiler has to check by much.

As processors keep getting more complicated, these old ways just can’t keep up. There are too many possible ways to schedule instructions, and the time to try them all grows quickly. This is especially true for devices like GPUs and AI chips, which run hundreds or thousands of instructions at the same time.

What’s missing? A way for the compiler to predict which instruction orders are likely to be best, before it spends time checking them all. That’s where this patent’s approach comes in. Instead of always trying every possible rule, the compiler uses an AI model to guess which rules are likely to work best for each basic block. This means less guessing, less wasted time, and faster, smarter code.

The science behind this is simple yet powerful. AI models, especially those based on neural networks and transformers (the same kind of technology behind modern language models), are very good at spotting patterns. If given enough examples, they can learn to pick out which rules will work best for different types of code. By training the model on past data, the compiler gets better over time, adapting to new processors and new types of programs.

Existing art has tried using AI in compilers, but usually in a “driving” role—letting the AI decide everything. This can be slow and hard to train. The new idea here is to use AI as a “guide” instead. The AI helps the compiler choose, but the compiler still does the heavy lifting. This keeps things fast and practical.

Invention Description and Key Innovations

This invention is all about using artificial intelligence to help compilers make better decisions when arranging instructions. Let’s break down how it works and what makes it special.

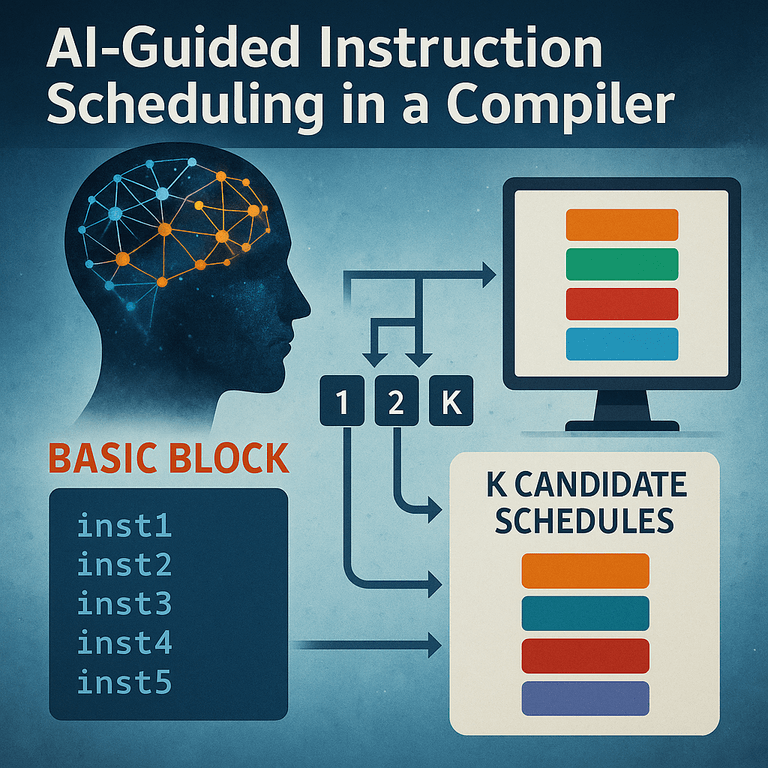

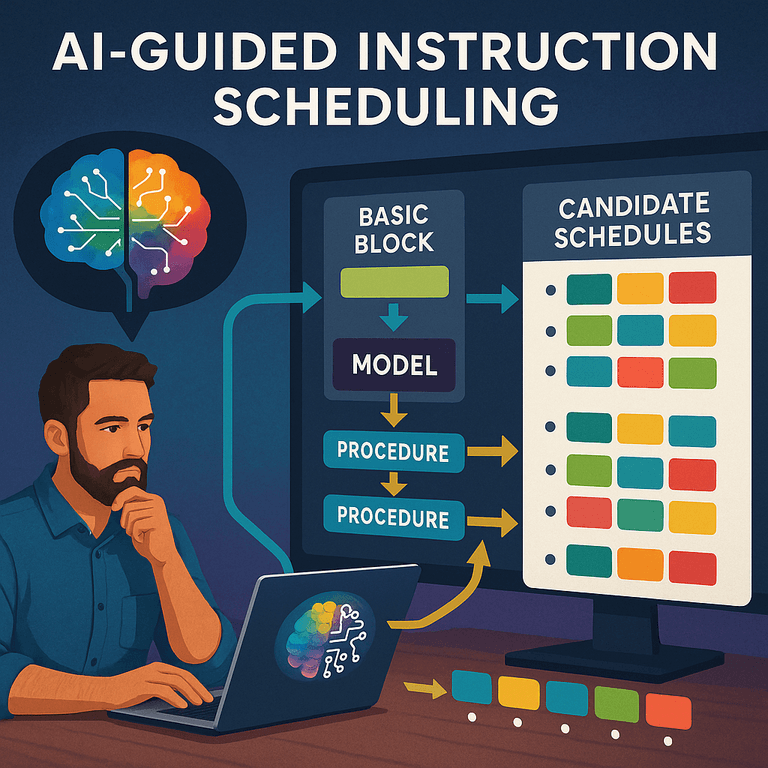

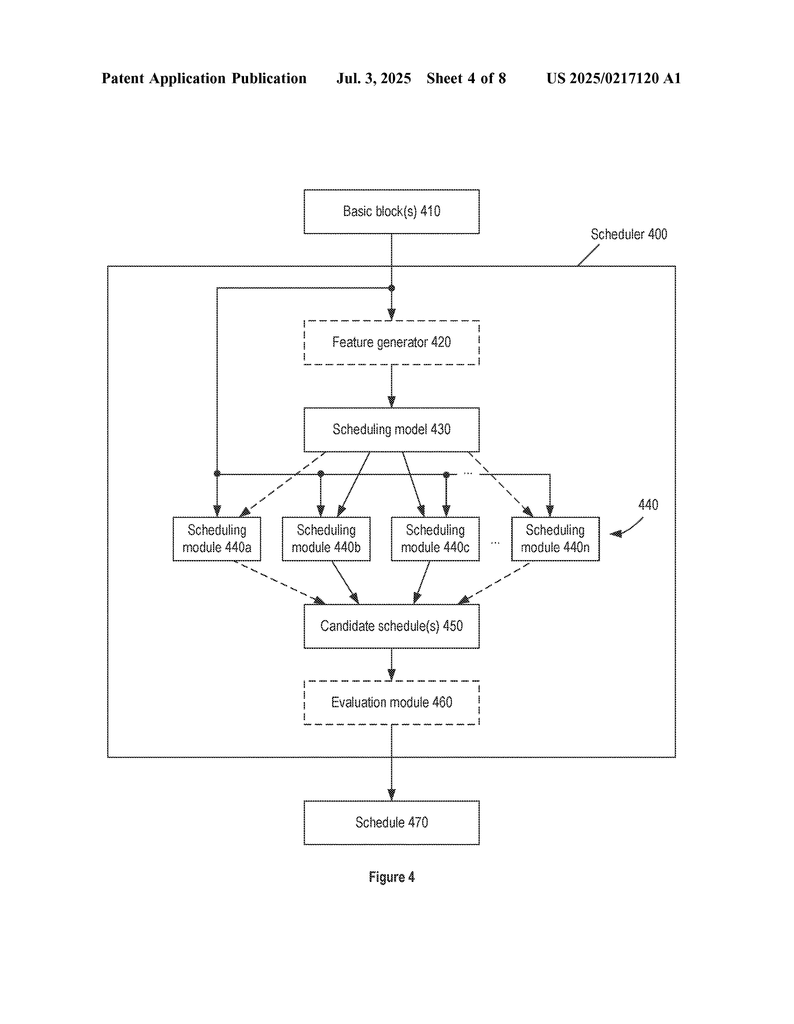

The heart of the invention is a process that, for each basic block of code, uses an AI model to pick a few of the best instruction scheduling procedures from a bigger set. Instead of trying every possible way to order the instructions, the compiler only tries the most promising ones. Here’s how it works, step by step:

1. Dual Representations: For every basic block (a small group of instructions that always run together), the compiler creates two versions. One is high-level (not tied to any specific processor), and the other is low-level (matched to the target processor). The high-level version is easier for the AI to understand, while the low-level version is what finally gets run.

2. Feature Extraction: The compiler takes the high-level version and turns it into a set of features—simple numbers or tokens that describe what’s inside. This might include the number of instructions, the types of instructions, and more. This keeps the AI model fast and small.

3. AI-Based Selection: These features are fed into an AI model (often a neural network with pooling layers to keep it small and efficient). The AI looks at these features and predicts which of the available scheduling procedures are most likely to create the best result for this block. If there are N possible procedures, the AI picks the top K (where 1 ≤ K < N).

4. Focused Scheduling: Now, instead of running all N procedures, the compiler only runs the K selected ones on the low-level version of the block. Each one produces a candidate schedule (an ordering of instructions).

5. Evaluation: The compiler checks which of these K candidate schedules is best using its normal evaluation tools (like estimating the number of cycles or stalls), and keeps that one.

6. Code Generation: Finally, the compiler uses the best candidate schedule to produce the portion of the target code that will actually run on the processor.

What’s innovative?

First, this method saves a lot of time and computer power. By using the AI model to narrow down choices, the compiler skips many unnecessary steps. For large blocks, the savings are huge. The inventors report up to 25% faster scheduling for real-world shader programs, and sometimes more than 50% for individual blocks.

Second, the use of AI is flexible. If a new processor comes out, the AI model can be retrained with new data. If a new kind of workload (like a game or a big data task) becomes common, the model can be fine-tuned. This means the same compiler can quickly adapt to new hardware, saving time and money for both chip makers and software developers.

Third, the invention allows for “hybrid” scheduling. For very small blocks, traditional evaluation-based scheduling is still fastest. The invention includes a way to automatically pick which scheduler to use for each block, based on things like the number of instructions or the kind of work being done. This means the compiler always uses the best tool for the job.

Fourth, the patent describes several technical tricks to keep the AI model fast and light, such as using pooling layers and feature normalization. This makes it practical to use even in large projects or on computers with limited resources.

Finally, this approach can work with many types of processors. Whether the target is a CPU, GPU, TPU, FPGA, or something else, the same basic method applies. The only difference is how the AI model is trained and which features matter most.

The invention also covers how the system can be implemented in real-world compilers, with clear details on how to connect the AI model, manage the code representations, and organize the scheduling steps. It even includes provisions for storing instructions on computer-readable media, making it easy to deploy in commercial products.

Why Does This Matter for the Industry?

Every millisecond counts in today’s world. Faster compilers mean faster software, happier users, and less wasted energy. With AI-guided scheduling, companies can get more out of every chip, whether it’s in a phone, a gaming console, or a massive data center.

For developers, this means less time spent tuning code by hand. For chip makers, it means their hardware can shine, running software at top speed. For end users, it means apps that open quickly, games that don’t lag, and devices that last longer on a charge.

Conclusion

This patent application lays out a simple but powerful idea: leverage artificial intelligence as a guide—not a replacement—for compiler instruction scheduling. By predicting which scheduling procedures to use for each block of code, the compiler can skip unnecessary work, adapt to new processors, and deliver better performance for everyone.

The approach is flexible, efficient, and ready for the real world. It balances the power of human-designed heuristics with the adaptability of modern AI, opening the door for smarter, faster, more responsive software on every device. As compilers become more intelligent, the line between software and hardware will blur even further, bringing us closer to a future where every program runs at its very best, no matter where it’s run.

If you work with compilers, chip design, or high-performance software, this is an innovation to watch. The next leap in performance may not come from faster chips, but from smarter software that knows how to make the most of every instruction.

Click here https://ppubs.uspto.gov/pubwebapp/ and search 20250217120.